Emergence AI’s new system automatically creates AI agents rapidly in realtime based on the work at hand

Join daily and weekly newsletters to obtain the latest updates and exclusive content to cover the leading artificial intelligence in the industry. Learn more

Another day, another announcement of artificial intelligence agents.

Many market research reports were praised as a great direction of technology in 2025-especially in the organization-it seems that we cannot go to more than 12 hours or so without another way of another method, or coordinating it (correlation together), or improving artificial intelligence tools created for this purpose in another way.

However, AI, an emerging company founded by the veterans of IBM Research, which appeared late last year for the first time in the framework of the agent of his own Amnesty International, with a novel from all the remainder: a new platform for creating AI, allows the human user to determine what they are trying to accomplish through text claims, then turns into the models of Amnesty International to create students who believe it is necessary They determine the work.

This new system is literally a symbol, a natural language, and a multi -agent builder works by the New i, and it works in actual time. The appearance of AI describes as a milestone in the luser intelligence, and aims to simplify and accelerate the work of the complex data of the institution’s users.

“Our systems allow creativity and intelligence to expand its scope, without human bottlenecks, but always within the limits known by man,” said Satia Nita, co -founder and CEO of Descipence Ai.

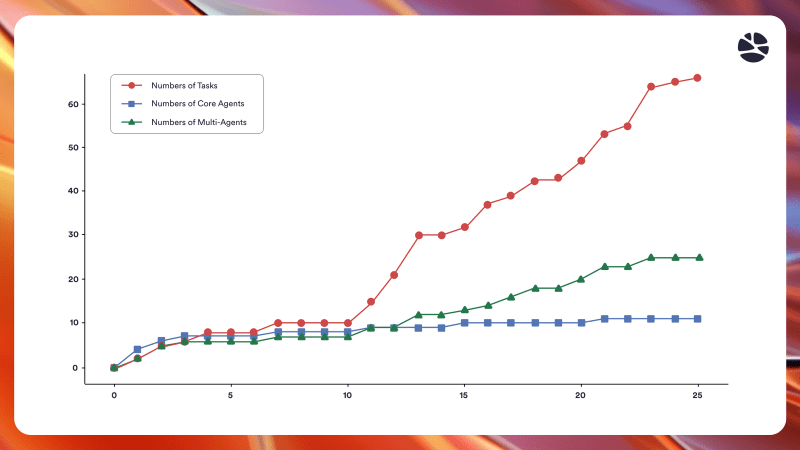

The basic system for assessing the tasks received, and verify its current agent’s record, and if necessary, create new factors designed independently to meet the needs of the specific institutions. Understanding variables can also be created proactively to anticipate related tasks, and expand problem -solving capabilities over time.

According to NITTA, the Orchestrator provides completely new levels of autonomy in the automation of institutions. “Organ Estrenna links multiple factors together independently to create multiple agents without human coding. If he does not have a task factor, it will be automatically and even self -playing to learn relevant tasks by creating new factors themselves,” explained.

A brief show showing adventure on a video call last week appeared as impressive as due, as NITTA showed how simple text instructions to make the email classification ignited a wave of new agents created on a visual schedule that explains each agent represented as a color point in the design of a column designed to help pregnancy.

NITTA also said that the user can stop and interfere in this process, and he transmits additional text instructions at any time.

Bring the agent coding to the functioning of the institution

Despection Ai focuses on automating the workflow of data that focuses on data such as the creation of the ETL pipeline, data deportation, transformation, and analysis. Platform agents are equipped with agent rings, long -term memory, and self -improvement capabilities through planning, verification and self -employment. This system enables not only the completion of individual tasks but also to understand and transfer the tasks surrounding areas of neighboring use.

“We are in a strange time to develop technology and our society. We now have Amnesty International to join the meetings,” Nita said. “But then, one of the most exciting things that has occurred in artificial intelligence over the past two years, three years is that large language models produce a symbol. They improve, but they are probabilities. The symbol may not always be perfect, and it is not implemented, verified or corrected.”

The AI platform seeks to appear to fill this gap by combining the capabilities of large code models into large models with an independent agent technique. Nita added: “We are marrying the capabilities of generating LLMS code with independent agent technology,” Nita added. “The parental coding has tremendous effects, and the story of next year and many years will be for the coming years. The turmoil is deep.”

“AI” is the ability of the platform to integrate with leading AI models such as the GPT-4O from Openai, GPT-4.5, Claude 3.7 Sonnet, Meta’s Llama 3.3, as well as Frameworks such as Langchain, Crew AI and Microsoft Autogen.

The focus focuses on the interfering operation-which allows institutions to bring their models and third-party agents to the platform.

Expand multiple agents capabilities

With the current version, the statute is expanding to include Mosul agents, data intelligence agents and text, allowing institutions to build more complicated systems without writing a manual symbol.

Orchestrator’s ability to evaluate its restrictions and take the basic measures of the appearance approach.

“The inexhaustible thing that is happening is when a new mission comes, the orchestra discovers if it can solve the task by verifying the record of the current agents,” Nita said. “If he cannot, it creates a new agent and records it.”

He added that this process is not just interactive, but it is obstetric. “Orchestrator is not only the creation of agents; it creates goals for himself. He says,” I cannot solve this task, so I will create a goal to create a new agent. “This is really exciting.”

A bet, fearing that you will worry that the hook will go out of control and create also Many of the dedicated agents are unnecessary for every new task, the research shows “appearance” on its designer platform – and its successful implementation – the additional condition of reducing the number of agents that were created whenever they approach the completion of the task, with the addition of agents with a more general application to its internal record in its favor for you The institution, and check this before creating anything new.

Giving priority to safety, verification and human control

To maintain supervision and ensuring responsible use, AI AI includes many safety and compliance features. These include handrails, arrival controls, verification forms to evaluate the performance of the agent, and supervise the human in the episode to verify the validity of the main decisions.

NITTA emphasized that human oversight is still a major component of the platform. “A person in the episode is still important,” he said. “You need to verify that the multi -agent system or the new agents created are doing the task you want and went in the right direction.” The company organized the articles of association with clear checkpoints and verification layers to ensure that the institutions retain control and clarity on the automatic operations.

Although pricing information is not disclosed, the appearance of AI calls on institutions to contact them directly to access pricing details. In addition, the company plans to make another update in May 2025, which will extend the capabilities of the basic system to support the publication in force in any cloud environment and allow the creation of the expanded agent through self -play.

Look forward: expanding the automatic operation of institutions

Amnesty International is located in New York, with offices in California, Spain and India. The company’s leadership and engineering team includes graduates from the IBM Research, Google Brain, Allen Institute for AI, Amazon and Meta.

AI AI describes its work as still in the early stages, but it is believed that the career of the linguistic intelligence can open new possibilities for automating institutions, and in the end AI-Arid systems.

“We believe that the agents will be always necessary,” Nita said. “Even with the increasing strength of the models, the circular in the movement space is very difficult. There is a big room for people like us to apply for this next decade.”

2025-04-01 16:12:00