Mahmoud Khalil publishes op-ed blasting Columbia University

The anti -Israel organizer, Mahmoud Khalil, represented the Colombia University administration in an opening article published in the school newspaper on Friday.

The joints, entitled “A Message to Colombia”, accuse the Foundation of the Foundation of Pelicious. He continues to compare President Donald Trump’s campaign against the anti -Israel demonstrators to the indifference in Colombia towards the Palestinians, while including other students who were “extracted by the state.”

Khalil wrote, “The situation reminds us strangely when it escaped from the brutality of the regime of Bashar al -Assad in Syria and resorted to Lebanon,” Khalil wrote. “The logic that the federal government uses to target psychological and peer

He continued accusing Colombia managers of making “public hysteria about anti -Semitism without mentioning tens of thousands of Palestinians who were killed under bombs made of your dollars.”

ICE deportation colleges have diluted discounts and financial aid to illegal immigrants: Republican representative

Mahmoud Khalil started at Columbia University in an article published on Friday. (AP Photo/Ted Shafrey, left; Barry Williams/New York Daily News/Tribune News Service via Getty Images, to the right.)

Some students also targeted students in Colombia, who says it helped create a false sense that anti -Semitism was spreading across the campus. He also pointed to the efforts made by some students to reveal the anti -Israel demonstrators, although he did not call any individuals.

The Trump administration orders the Man Maryland to return by accidentally to El Salvador Prison

“Especially in light of the dual grades program with the University of Tel Aviv, I can only think that if I am in Palestine, some of these students will be the ones who stop me at the checkpoints, the invasion of my university, or the experiment of drones wipe in my community, or kill in the military Camp in the military Camp. The victim claims in the semester.”

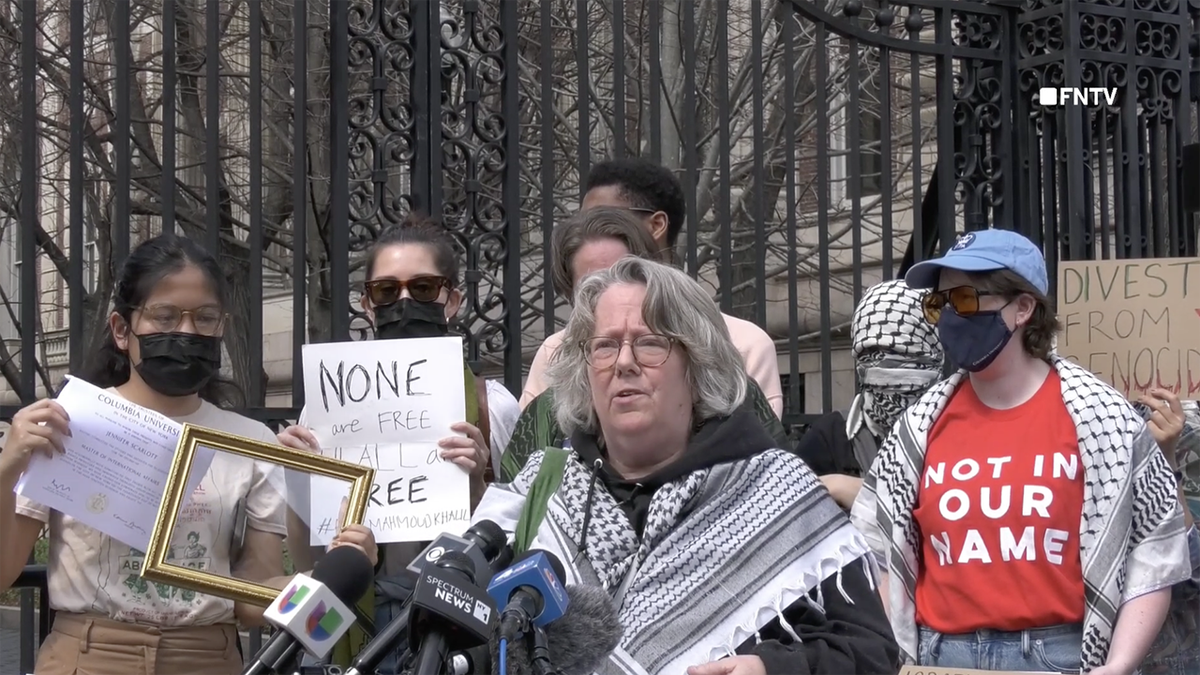

A graduate of Colombia University is speaking before tearing her degree during a protest on Saturday, March 29. (Freedom News TV)

“Columbia faculty members who receive themselves on their back for their progressive tendencies, but they are satisfied with limiting their participation in the performance statements: What requires you to resist the destruction of your university? Do your positions deserve more than the lives of your students and the safety of your work?” He added.

The message comes weeks after Ice Khalil’s agents were held in New York City in early March. The Ministry of Internal Security claimed that it “led the alignment activities to Hamas, a dedicated terrorist organization.”

The demonstrators display a big banner reading “Mahmoud Khalil Al -Hurra, and the name of the trustees” from a balcony, where students make themselves to the doors of Colombia University, demanding accountability from the university secretaries after the arrest of Mahmoud Khalil, in New York, on April 2, 2025. (Selcuk Acar/Anadolu via Getty Images)

Last week, several Columbia University The students chained to a gate outside the Church of St. Paul at the school in protest against the arrest of Khalil.

Click here to get the Fox News app

Students demanded that the Foundation be issued the names of the trustees “who gave Mahmoud Khalil’s name to the ice.” The Solidarity Committee, Colombia Palestine, wrote to X that “we will not leave until our request is met.”

The school denies that any of its principals requested the presence of ICE on the campus.

2025-04-06 17:17:00