MIT Researchers Develop Methods to Control Transformer Sensitivity with Provable Lipschitz Bounds and Muon

Training large -scale transfers constantly It was a long challenge in deep learning, especially as models grow in size and expression. The Massachusetts Institute researchers deal with a continuous problem with its root: Unstable growth for activations The cracker is the loss resulting from unrestricted weight and activation standards. Their solution is enforcement Lipschitz borders that can be proven The converter by *regulating the weights in a spectrum – *with no use of the activation normalization, the QK base, or the Logit SoftCapping tricks.

What is lipschitz binding – and why it imposed?

A Lipschitz is binding On the nerve network, the nerve network determines the maximum amount in which the outputs can change in response to the disorders (or weight). Sports, the FFF function is KKK-Lipschitz if: ∥f (x1) −f (x2) ∥≤k∥x1-X2∥ ∀x1, x2 | F (x_1) -F (x_2) | LeQ K | x_1 – x_2 | Forll x_1, x_2∥f (x1) −f (x2) ∥≤k∥x1 – x2∥ ∀x1, x2

- Lower lipschitz is binding ⇒ greater durability and ability to predict.

- It is important for stability, hostile durability, privacy, and generalization, with less limits, which means that the network is less sensitive to hostile changes or noise.

The motives and clarification of the problem

Traditionally, he participated in a large -scale training training A variety of tricks “Aid Aid”:

- The normalization of the layer

- QK normalization

- Tanh SoftCapping

But this does not directly address the growth of the basic spectrum base (the largest individual value) in weights, which is the root cause of stimulating stimulation and instability in training – especially in large models.

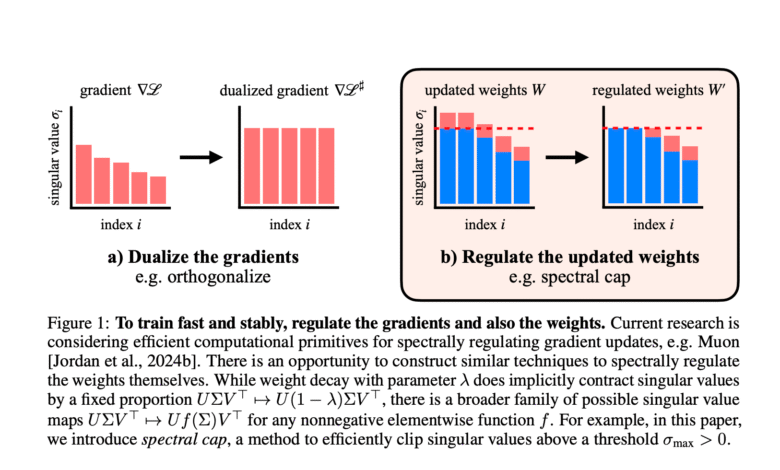

the Central hypothesis: If we organize the same weights in a spectrum – only the range or activation – we can maintain the tightening control over the lipschitzness, and perhaps the instability of its source.

Main innovations

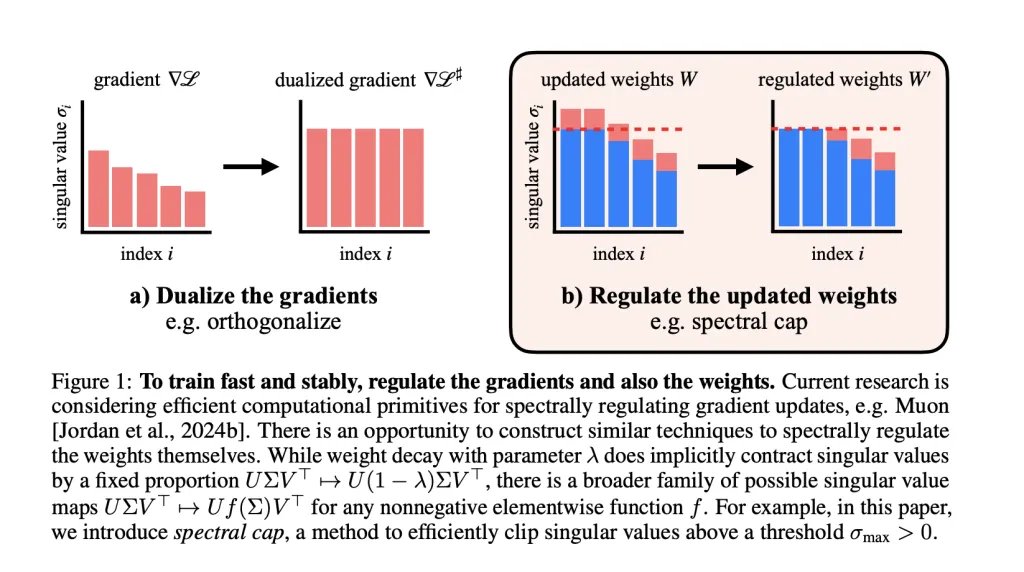

Specific organization of weight and improved Moon

- Tear The optimum, which is organized in a spectral manner GradesEnsuring each gradient step does not increase the spectral base beyond the specified limit.

- Researchers Extension of the organization to the weights: After each step, they apply operations to Reducing individual values From each weight matrix. The activation rules remain significantly small As a result-the values that are compatible with the FP8 accuracy in GPT-2 transformers exceed.

Remove stability tricks

In all experiences, Do not normalize a layer, there is no QK base, Tanh Logit is not used. yet,

- The maximum activation entries in The GPT-2 has never exceeded ~ 100, While the unrestricted foundation exceeded 148,000.

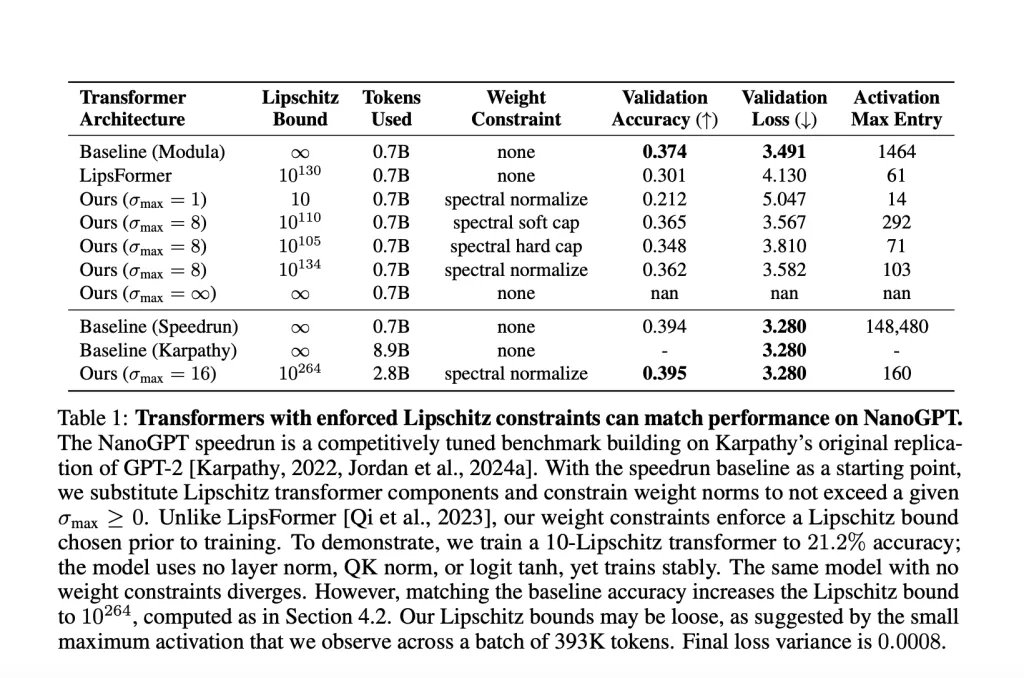

Table sample (NANOGPT)

| model | Activating maximum | Stability tricks layer | Health verification accuracy | Lipschitz is binding |

|---|---|---|---|---|

| Speedrun | 148,480 | Yes | 39.4 % | ∞ |

| Lipschitz adapter | 160 | no one | 39.5 % | 10⁰²⁶⁴ |

Ways to impose lipschitz restrictions

A variety of Methods for restricting normative restrictions It was explored and compared to its ability to:

- Maintain high performanceand

- Lipschitz binding warrantyAnd

- Lipschitz.

Techniques

- The weight of weight: The standard, but not always strict on the spectral base.

- Sifting normalizationIt guarantees the crowned value of the highest single, but it may affect all individual values in the world.

- Sifter lid: A new method, applies smoothly and efficiently σ → min (σmax, σ) Sigma to the min (sigma_ {text {max}}, sigma) σ → min (σmax, σ) for all individual values in parallel (using multi -border approximation). This is a joint designer of MUON’s high -border rankings.

- SpectrumIt only determines the largest individual value of σmaxsgma_ {text {max}} σmax, most appropriate for Adamw Optimizer.

Experimental results and visions

Form evaluation on different scales

- Shakespeare (a small adapter, <2-lipschitz):

- It achieves a 60 % health verification accuracy with Lipschitz proof below.

- It surpasses the unrestricted foundation line in the loss of health verification.

- Nanogpt (145m parameters):

- With lipschitz binding <10, accuracy verification: 21.2 %.

- to match Unrestricted power line (39.4 % resolution), The large upper limit requires 1026410^{264} 10264. This highlights how strict Lipschitz restricts often moved with the expression of large scales at the present time.

The efficiency of the weight restriction method

- Moon + spectrum cap: It leads boundary bodies– Lipschitz Lipschitz constants for the loss of compatible or better verification compared to the weights + AdamW +.

- Soft lid and normalization (Under MUON) Empowering the best limits on the lipschitz comparison is a loss.

Stability and durability

- Hostility It increases sharply within the lower limits of lipschitz.

- In experiments, restricted lipschitz models have suffered a more moderate decrease in an aggressive attack compared to unrestricted basic lines.

Activation sizes

- With the regulation of spectral weight: The maximum activations remain small (compatible with FP8), compared to unlimited foundation, even on a large scale.

- This opens the ways to Low training accuracy and reasoning In devices, where smaller activations reduce the costs of account, memory and energy.

Open restrictions and questions

- Choose a “narrow” comparison For weight standards, the regimens of the record remains, and the expansion of attention depends on surveying, not a principle.

- The current upper borders are loose: The global -calculated borders can be astronomically significantly (for example 1026410^{264} 10264), while the real activation criteria remain small.

- It is not clear whether it is possible to match the performance of the unrestricted foundation line with the totally small Lipschitz borders with an increase in the scale –More necessary research.

conclusion

Specific weight regulation-especially when pairing with a MUON-can train large transformers with forced Lipschitz borders, without normalization of activation or other aid tricks. This addresses instability at a deeper level and maintains activation in a predictable compressed scope, and greatly improves hostilities and possible efficiency.

This working line indicates new and effective alternatives to the regulation of the nerve network, with wide applications for privacy and safety and the spread of low -precision artificial intelligence.

verify Card. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-02 20:54:00