Google AI Ships TimesFM-2.5: Smaller, Longer-Context Foundation Model That Now Leads GIFT-Eval (Zero-Shot Forecasting)

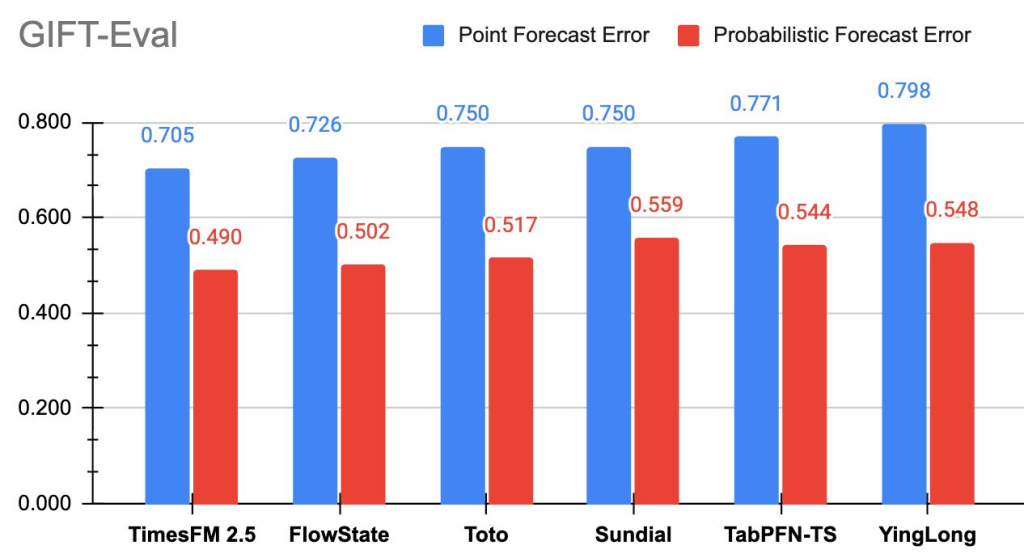

Google Research released Timesfm-2.5The basis for the 200 meters of time series, with its coding with a Long context 16k And the citizen Prediction prediction Supports. The new checkpoint directly on the embrace. on giftTimesfm-2.5 now The leaders are at the top of the accuracy standards (MASE, CRPS).

What is the prediction of the time chain?

The prediction of time chains is the practice of analyzing the sequential data points collected over time to determine the patterns and predict future values. It supports important applications across industries, including predicting demand for retail products, monitoring weather trends and precipitation, improving large -scale systems such as supply chains and power networks. By picking up temporal dependencies and seasonal differences, the prediction of the time chain allows to make decisions based on data in dynamic environments.

What changed Timesfm-2.5 VS v2.0?

- border: 200m (Lower 500 meters in 2.0).

- Far context: 16,384 Points (height from 2048).

- Quantities: Optional 30m-pram quantitative head For continuous quantitative expectations even 1K horizon.

- Inputs: There is no “frequency” indicator required; New inference flags (face inflammation, positive inference, quantities repair).

- Road Map: coming Linen Implementation of the fastest inference; Variables Support scheduled to return; Documents to be expanded.

Why is a longer context important?

16 kg The historical points allow one pass to the front to capture a multi -seasonal structure, system breaks, and low frequency components without tiling or hierarchical sewing. In practice, this reduces pre -reasoning for treatment and improves the stability of areas where the context is >> Horizon (for example, energy loading, demand for retail). The longest context is to change the basic design that was explicitly observed for 2.5.

What is the context of the research?

TIMESFM basic thesis –Only one basis model decoding to predictIt was presented at the ICML 2024 and the Google Research Code. Salsforce has emerged to unify the evaluation via fields, frequencies, horizon lengths, and mono -variable/multi -variable systems, with the generally hosted leaders panel.

Main meals

- Smaller and faster model: Timesfm-2.5 runs with 200m teachers (Half the size of 2.0) with the improvement of accuracy.

- Long -context: Support The input length is 16kEnabling expectations with deeper historical coverage.

- Standard Leader: Now it is ranked #1 Between zero basic models On Val’s gift for both of them Mase (Point accuracy) and CRPS (Possibility).

- Ready for production: Effective design and quantitative prediction support makes it suitable for publishing in the real world across industries.

- Wide availability: The model is Living on the face of embrace.

summary

Timesfm-2.5 shows that the basis for predictions move to the past proving the concept to practical and ready tools for production. By cutting the parameters in half with the expansion of the context and the leadership of gifts across each point and probability, it represents a change in efficiency and ability. With an embrace to reach the integration of the living garden and the environment/model on the road, the model is placed to accelerate the adoption of zero time time predictions in the pipelines in the real world.

verify Form Card (HF), Ribo, Standard and paper. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Michal Susttter is a data science specialist with a master’s degree in Data Science from the University of Badova. With a solid foundation in statistical analysis, automatic learning, and data engineering, Michal is superior to converting complex data groups into implementable visions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-09-16 16:29:00