Google Cloud reveals how AI Is reshaping cybersecurity defense

At the elegant Google Singapore office in Block 80, Level 3, Mark Johnston stood in front of a room of technological journalists at 1:30 pm with amazing acceptance: Five decades of cybersecurity development, defenders are still losing war. “In 69 % of the accidents in Japan, Asia and the Pacific, the organizations were notified of their violations by external entities,” the director of the CISO office in Asia and the Pacific, revealed that the presentation segment shows inspiring statistics – most companies cannot discover when they were breached.

What was revealed during the “cybersecurity in the era of artificial intelligence” was an honest assessment of how Google Cloud AI was attempted to reflect decades of defensive failures, even as the same tools of artificial intelligence that enables the attackers with unprecedented capabilities.

Historical context: 50 years of defensive failure

The crisis is not new. Johnston follows the problem to the pioneer of cybersecurity James B. Anderson in 1972 that “the systems we really use do not protect themselves” – a challenge that has continued despite decades of technological progress. Johnston said: “What James B Anderson said in 1972 is still applied today,” which highlights the extent to which basic security problems are not solved even with the development of technology.

The continuation of the basic weaknesses focuses on this challenge. “More than 76 % of the violations begin with the basics” – the configuration errors and the credentials compatible with which it has been afflicted with decades organizations. Johnston cited a recent example: “Last month, a very common product used by most institutions used at some point, Microsoft SharePoint, also what we call a security vulnerability on zero … and during that time, it was attacked continuously and abused.”

AI Arms Race: Defenders against the attackers

Kevin Koran, a senior member of IEEE and Cybersonial Security Professor at Olster University, describes the current scene as a “high armament race” where both cybersecurity and threat representatives use Amnesty International tools to excel each other. “For defenders, artificial intelligence is one of the valuable assets,” Koran explains in a media note. “The institutions have applied the AI gym and other automatic operating tools to analyze huge amounts of data in an actual time and identify abnormal cases.”

However, the same techniques benefit the attackers. “For the actors of the threats, Amnesty International can simplify the hunting attacks, automate the creation of harmful programs and help scan networks for weaknesses,” Koran warns. The dual -use nature of Amnesty International creates what Johnston calls the “defender dilemma”.

Google Cloud AI initiatives aim to tilt these scales in favor of defenders. Johnston has argued that “artificial intelligence provides the best opportunity to raise the defender’s dilemma, and tilt the electronic space scales to give defenders a decisive advantage over the attackers.” The company’s approach focuses on what it describes “countless cases of artificial intelligence in defense”, which extends to the discovery of weakness, the intelligence of threat, the generation of safe code, and the response to accidents.

Project Zero’s Big Sleep: AI find what humans miss

One of the most persuasive examples of Google Defense in which artificial intelligence works is the “Big Sleep” initiative for Project Zero, which uses large language models to determine the weaknesses in the real world symbol. Johnston shared impressive standards: “Big Sleep found a weakness in an open source library using the II Getyroxual Tools – the first time we believe that weakness was found through artificial intelligence service.”

The development of the program shows the increasing capabilities of Amnesty International. “Last month, we announced that we found more than 20 weaknesses in different packages,” Johnston pointed out. “But today, when I looked at the big dashboard, I found 47 weaknesses in August that were found in this solution.”

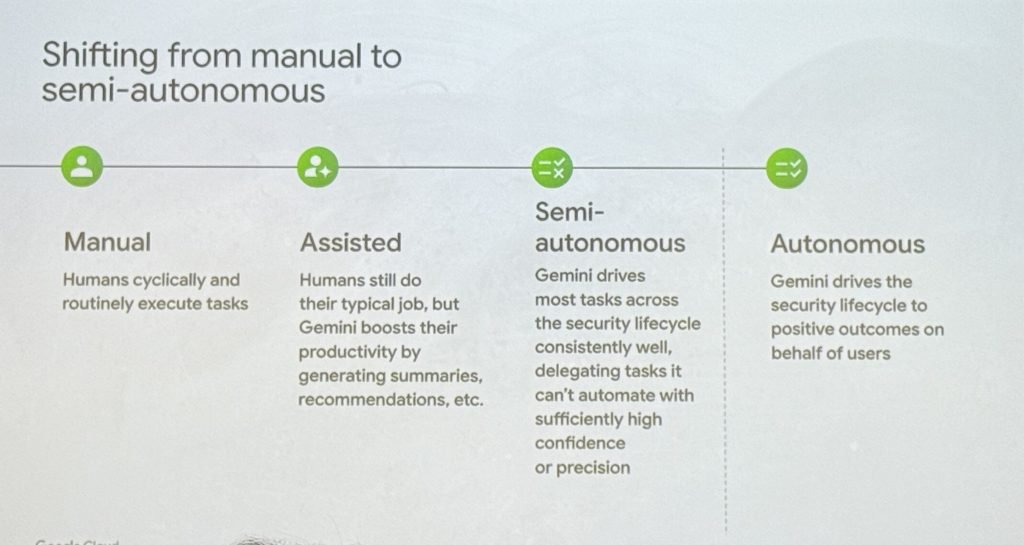

The progress of manual human analysis to the discovery supported by AI is what Johnston describes as a “manual to semi -independent security operations”, where “Gemini” cannot do most of the tasks in the life cycle of security well, which cannot delegate the tasks that are not automated with a high degree of confidence or accuracy. “

Automation paradox: promise and risks

The Google Cloud road map is imagining progress through four stages: manual security, assistance, semi -independent and independent. In the semi -self, artificial intelligence systems will deal with routine tasks with the escalation of complex decisions for human operators. Amnesty International will eventually witness “the leadership of the safety cycle of safety to positive results on behalf of users.”

However, this automation offers new weaknesses. When asked about the dangers of excessive dependence on artificial intelligence systems, Johnston admitted to the challenge: “There is the possibility of this service to be attacked and manipulated. For the time time, when you see tools in which these agents are filled, there is no really good framework for declaring that this is the actual tool that has not been replaced.”

Koran repeats this anxiety: “The risks to which companies are exposed to are that their security teams will be excessive in artificial intelligence, and perhaps calm human rule and leave the systems vulnerable to attacks. Human” Copilot “is still needed and roles must be determined clearly.”

Real world implementation: Control of an unpredictable nature of artificial intelligence

Google Cloud’s approach includes practical guarantees to address one of the most problematic characteristics of artificial intelligence: its tendency to generate non -relevant or inappropriate responses. Johnston explained this challenge through a tangible example of contextual inconsistency that can create work risks.

“If you have got a retail store, you shouldn’t have provided medical advice instead,” Johnston explained, describing how artificial intelligence systems can be unexpectedly turned into non -relevant areas. “Sometimes these tools can do it.” The inability to predict is a great responsibility for companies that publish artificial intelligence systems facing customers, as responses can be mixed outside the subject of clients, brand reputation, or even create legal exposure.

This Google ARMOR MODEL technology deals with this by working as a smart filter. “The presence of filters and the use of our capabilities to put health checks on these responses allows the institution to obtain confidence,” Johnston pointed out. The system removes AI for personal information, liquidating the content is not suitable for work context, and prevents responses that can be “outside the brand” of the intended state of use of the institution.

The company also deals with the increasing concern about spreading the shadow of intelligence. Organizations discover hundreds of unauthorized artificial intelligence tools in their networks, creating huge security gaps. Google’s sensitive data protection techniques try to process this by surveying in multiple cloud services and local systems.

Measurement Challenge: Budget restrictions against increased threats

Johnston has identified budget restrictions as the main challenge facing Ciso in Asia and the Pacific, which specifically occurs when organizations face rising internet threats. The paradox is blatant: with the increase in the attacks of the attack, the organizations lack the resources necessary to respond sufficiently.

“We look at the statistics and say objectively, we see more noise – we may not be superior, but there is more noise that is more general expenses, and this costs more to deal with it,” Johnston noted. The increase in the frequency of the attack, even when individual attacks are not necessarily more advanced, create resource drainage that many organizations cannot maintain.

Financial pressure intensifies a really complex security scene. “They are looking for partners who can help accelerate this without having to employ 10 other employees or get larger budgets,” Johnston explained, describing how security leaders face increasing pressure to do more with current resources during threats.

Critical questions remain

Despite the promising Google Cloud Ai capabilities, many important questions are still standing. When the stabbing faces whether the defenders are already winning this arms race, Johnston admitted: “We haven’t seen new attacks using artificial intelligence yet,” but we indicated that the attackers use artificial intelligence to expand the scope of current attacks and create “a wide range of opportunities in some aspects of the attack.”

Effectiveness also requires audit. While Johnston cited a 50 % improvement in the speed of writing incident reports, he admitted that accuracy is still a challenge: “There are certainly mistakes. But humans make mistakes as well.” The acknowledgment highlights the constant restrictions of current artificial intelligence safety applications.

Look forward: Preparations after a quarter

Besides the current artificial intelligence applications, Google Cloud is already preparing to turn the following form. Johnston revealed that the company “has already published a post -quarter encryption between our databases on a large -scale”, specifying future quantum computer threats that could make the current encryption old.

Judgment: Cautious optimism required

The integration of artificial intelligence into cybersecurity is an unprecedented opportunity and a great danger. While the techniques of artificial intelligence by Google Cloud shows real potential for weakness, analysis of threats, and automatic response, the technologies themselves enable the attackers through improved capabilities of reconnaissance, social engineering and evasion.

Koran’s evaluation provides a balanced perspective: “Given the speed that has evolved technology, organizations will have to adopt a more comprehensive and urgent cybersecurity policy if they want to stay at the forefront.

The success of cybersecurity, which ultimately works on male artificial intelligence, does not depend on the technology itself, but on how studied organizations implement these tools while maintaining human control and treating basic security hygiene. Johnston also concluded, “We must adopt in low -risk methods,” stressing the need for measured implementation instead of wholesale automation.

The artificial intelligence revolution takes place in cybersecurity, but victory will belong to those who can balance innovation with wise risk management – and not those who simply spread the most advanced algorithms.

See also: Google Cloud reveals AI ally for safety teams

Do you want to learn more about artificial intelligence and large data from industry leaders? Check AI and Big Data Expo, which is held in Amsterdam, California, and London. The comprehensive event is part of Techex and is determined with other leading technological events, click here for more information.

AI News is supported by TechForge Media. Explore other web events and seminars here.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-28 11:02:00