High-level visual representations in the human brain are aligned with large language models

Kanwisher, N. Functional specificity in the human brain: a window into the functional architecture of the mind. Proc. Natl Acad. Sci. USA 107, 11163–11170 (2010).

Konkle, T. & Oliva, A. A real-world size organization of object responses in occipitotemporal cortex. Neuron 74, 1114–1124 (2012).

Bao, P., She, L., McGill, M. & Tsao, D. Y. A map of object space in primate inferotemporal cortex. Nature 583, 103–108 (2020).

Kriegeskorte, N. et al. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron 60, 1126–1141 (2008).

Cichy, R. M., Kriegeskorte, N., Jozwik, K. M., van den Bosch, J. J. F. & Charest, I. The spatiotemporal neural dynamics underlying perceived similarity for real-world objects. NeuroImage 194, 12–24 (2019).

Kriegeskorte, N. Deep neural networks: a new framework for modeling biological vision and brain information processing. Annu. Rev. Vis. Sci. 1, 417–446 (2015).

Kriegeskorte, N. & Douglas, P. K. Cognitive computational neuroscience. Nat. Neurosci. 21, 1148–1160 (2018).

DiCarlo, J. J., Zoccolan, D. & Rust, N. C. How does the brain solve visual object recognition? Neuron 73, 415–434 (2012).

Bracci, S. & Op de Beeck, H. P. Understanding human object vision: a picture is worth a thousand representations. Annu. Rev. Psychol. 74, 113–135 (2023).

Doerig, A. et al. The neuroconnectionist research programme. Nat. Rev. Neurosci. 24, 431–450 (2023).

Richards, B. A. et al. A deep learning framework for neuroscience. Nat. Neurosci. 22, 1761–1770 (2019).

Yamins, D. L. K. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl Acad. Sci. USA 111, 8619–8624 (2014).

Khaligh-Razavi, S.-M. & Kriegeskorte, N. Deep supervised, but not unsupervised, models may explain it cortical representation. PLoS Comput. Biol. 10, e1003915 (2014).

Güçlü, U. & van Gerven, M. A. J. Deep neural networks reveal a gradient in the complexity of neural representations across the ventral stream. J. Neurosci. 35, 10005–10014 (2015).

Brandman, T. & Peelen, M. V. Interaction between scene and object processing revealed by human fMRI and MEG decoding. J. Neurosci. 37, 7700–7710 (2017).

Sadeghi, Z., McClelland, J. L. & Hoffman, P. You shall know an object by the company it keeps: an investigation of semantic representations derived from object co-occurrence in visual scenes. Neuropsychologia 76, 52–61 (2015).

Bonner, M. F. & Epstein, R. A. Object representations in the human brain reflect the co-occurrence statistics of vision and language. Nat. Commun. 12, 4081 (2021).

Ackerman, C. M. & Courtney, S. M. Spatial relations and spatial locations are dissociated within prefrontal and parietal cortex. J. Neurophysiol. 108, 2419–2429 (2012).

Chafee, M. V., Averbeck, B. B. & Crowe, D. A. Representing spatial relationships in posterior parietal cortex: single neurons code object-referenced position. Cereb. Cortex 17, 2914–2932 (2007).

Graumann, M., Ciuffi, C., Dwivedi, K., Roig, G. & Cichy, R. M. The spatiotemporal neural dynamics of object location representations in the human brain. Nat. Hum. Behav. 6, 796–811 (2022).

Zhang, B. & Naya, Y. Medial prefrontal cortex represents the object-based cognitive map when remembering an egocentric target location. Cereb. Cortex 30, 5356–5371 (2020).

Bar, M. Visual objects in context. Nat. Rev. Neurosci. 5, 617–629 (2004).

Russell, B., Torralba, A., Liu, C., Fergus, R. & Freeman, W. Object recognition by scene alignment. Adv. Neural Inf. Process. Syst. 20, (2007).

Võ, M. L.-H., Boettcher, S. E. & Draschkow, D. Reading scenes: how scene grammar guides attention and aids perception in real-world environments. Curr. Opin. Psychol. 29, 205–210 (2019).

Kaiser, D., Quek, G. L., Cichy, R. M. & Peelen, M. V. Object vision in a structured world. Trends Cogn. Sci. 23, 672–685 (2019).

Võ, M. L.-H. The meaning and structure of scenes. Vis. Res. 181, 10–20 (2021).

Epstein, R. A. & Baker, C. I. Scene perception in the human brain. Annu. Rev. Vis. Sci. 5, 373–397 (2019).

Bartnik, C. G. & Groen, I. I. A. Visual perception in the human brain: how the brain perceives and understands real-world scenes. In Oxford Research Encyclopedia of Neuroscience (2023).

Epstein, R. A. & Kanwisher, N. A cortical representation of the local visual environment. Nature 392, 598–601 (1998).

Epstein, R., Harris, A., Stanley, D. & Kanwisher, N. The parahippocampal place area: recognition, navigation, or encoding? Neuron 23, 115–125 (1999).

Epstein, R. A. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn. Sci. 12, 388–396 (2008).

Groen, I. I. A., Ghebreab, S., Prins, H., Lamme, V. A. F. & Scholte, H. S. From image statistics to scene gist: evoked neural activity reveals transition from low-level natural image structure to scene category. J. Neurosci. 33, 18814–18824 (2013).

Stansbury, D. E., Naselaris, T. & Gallant, J. L. Natural scene statistics account for the representation of scene categories in human visual cortex. Neuron 79, 1025–1034 (2013).

Groen, I. I. et al. Distinct contributions of functional and deep neural network features to representational similarity of scenes in human brain and behavior. eLife 7, e32962 (2018).

Brown, T. B. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Cer, D. et al. Universal sentence encoder for English. In Proc. 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations 169–174 (Association for Computational Linguistics, 2018).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. Preprint at https://doi.org/10.48550/arXiv.1810.04805 (2018).

Arora, S., Liang, Y. & Ma, T. A simple but tough-to-beat baseline for sen-tence embeddings. In International Conference on Learning Representations (2017).

Song, K., Tan, X., Qin, T., Lu, J. & Liu, T.-Y. MPNet: masked and permuted pre-training for language understanding. Adv. Neural Inf. Process. Syst. 33, 16857–16867 (2020).

Lu, J., Batra, D., Parikh, D. & Lee, S. ViLBERT: pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Adv. Neural Inf. Process. Syst. 32 (2019).

Tan, H. & Bansal, M. LXMERT: learning cross-modality encoder representations from transformers. Preprint at https://doi.org/10.48550/arXiv.1908.07490 (2019).

Pramanick, S. et al. VoLTA: vision-language transformer with weakly-supervised local-feature alignment. Preprint at https://doi.org/10.48550/arXiv.2210.04135 (2022).

Radford, A. et al. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning 8748–8763 (PMLR, 2021).

Du, Y., Liu, Z., Li, J. & Zhao, W. X. A survey of vision-language pre-trained models. Preprint at https://doi.org/10.48550/arXiv.2202.10936 (2022).

Chen, F.-L. et al. VLP: a survey on vision-language pre-training. Mach. Intell. Res. 20, 38–56 (2023).

Allen, E. J. et al. A massive 7T fMRI dataset to bridge cognitive neuroscience and artificial intelligence. Nat. Neurosci. 25, 116–126 (2022).

Lin, T.-Y. et al. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014: 13th European Conference 740–755 (Springer, 2014).

Chen, X. et al. Microsoft COCO captions: data collection and evaluation server. Preprint at https://doi.org/10.48550/arXiv.1504.00325 (2015).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 30, (2017).

Cer, D., Diab, M., Agirre, E., Lopez-Gazpio, I. & Specia, L. SemEval-2017 Task 1: semantic textual similarity multilingual and crosslingual focused evaluation. In Proc. 11th International Workshop on Semantic Evaluation (SemEval-2017) 1–14 (Association for Computational Linguistics, 2017).

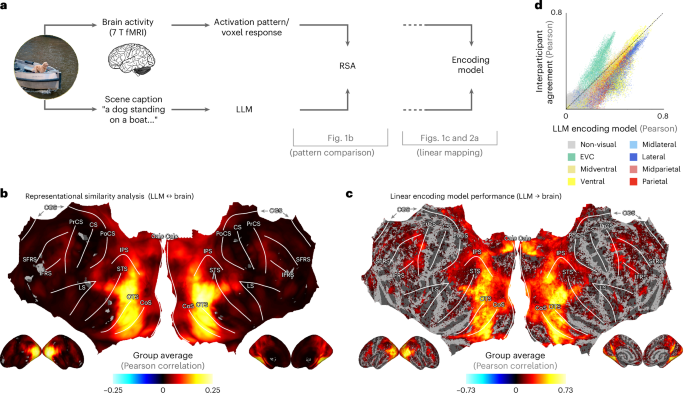

Kriegeskorte, N., Mur, M. & Bandettini, P. Representational similarity analysis—connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2, 4 (2008).

Kriegeskorte, N. & Kievit, R. A. Representational geometry: integrating cognition, computation, and the brain. Trends Cogn. Sci. 17, 401–412 (2013).

Nili, H. et al. A toolbox for representational similarity analysis. PLoS Comput. Biol. 10, e1003553 (2014).

Rokem, A. & Kay, K. Fractional ridge regression: a fast, interpretable reparameterization of ridge regression. Gigascience 9, giaa133 (2020).

Pennock, I. M. L. et al. Color-biased regions in the ventral visual pathway are food selective. Curr. Biol. 33, 134–146.e4 (2023).

Kay, K. N., Naselaris, T., Prenger, R. J. & Gallant, J. L. Identifying natural images from human brain activity. Nature 452, 352–355 (2008).

Sharma, P., Ding, N., Goodman, S. & Soricut, R. Conceptual captions: a cleaned, hypernymed, image alt-text dataset for automatic image captioning. In Proc. 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (eds Gurevych, I. & Miyao, Y.) 2556–2565 (Association for Computational Linguistics, 2018).

Bojanowski, P., Grave, E., Joulin, A. & Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 5, 135–146 (2016).

Joulin, A., Grave, E., Bojanowski, P. & Mikolov, T. Bag of tricks for efficient text classification. Preprint at https://doi.org/10.48550/arXiv.1607.01759 (2016).

Pennington, J., Socher, R. & Manning, C. GloVe: global vectors for word representation. In Proc. 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (eds Moschitti, A., Pang, B. & Daelemans, W.) 1532–1543 (Association for Computational Linguistics, 2014).

Kaplan, J. et al. Scaling laws for neural language models. Preprint at https://doi.org/10.48550/arXiv.2001.08361 (2020).

Hernandez, D., Kaplan, J., Henighan, T. & McCandlish, S. Scaling laws for transfer. Preprint at https://doi.org/10.48550/arXiv.2102.01293 (2021).

Mehrer, J., Spoerer, C. J., Jones, E. C., Kriegeskorte, N. & Kietzmann, T. C. An ecologically motivated image dataset for deep learning yields better models of human vision. Proc. Natl Acad. Sci. USA 118, e2011417118 (2021).

Kietzmann, T. C., McClure, P. & Kriegeskorte, N. Deep neural networks in computational neuroscience. In Oxford Research Encyclopedia of Neuroscience (2019).

Konkle, T. & Alvarez, G. A. A self-supervised domain-general learning framework for human ventral stream representation. Nat. Commun. 13, 491 (2022).

Zhuang, C. et al. Unsupervised neural network models of the ventral visual stream. Proc. Natl Acad. Sci. USA 118, e2014196118 (2021).

Spoerer, C. J., Kietzmann, T. C., Mehrer, J., Charest, I. & Kriegeskorte, N. Recurrent neural networks can explain flexible trading of speed and accuracy in biological vision. PLoS Comput. Biol. 16, e1008215 (2020).

Mehrer, J., Spoerer, C. J., Kriegeskorte, N. & Kietzmann, T. C. Individual differences among deep neural network models. Nat. Commun. 11, 5725 (2020).

Hong, H., Yamins, D. L. K., Majaj, N. J. & DiCarlo, J. J. Explicit information for category-orthogonal object properties increases along the ventral stream. Nat. Neurosci. 19, 613–622 (2016).

Conwell, C., Prince, J. S., Kay, K. N., Alvarez, G. A. & Konkle, T. A large-scale examination of inductive biases shaping high-level visual representation in brains and machines. Nat. Commun. 15, 9383 (2024).

Han, Y., Poggio, T. & Cheung, B. System identification of neural systems: if we got it right, would we know? In International Conference on Machine Learning 12430–12444 (PMLR, 2023).

Storrs, K. R., Kietzmann, T. C., Walther, A., Mehrer, J. & Kriegeskorte, N. Diverse deep neural networks all predict human inferior temporal cortex well, after training and fitting. J. Cogn. Neurosci. 33, 2044–2064 (2021).

Bo, Y., Soni, A., Srivastava, S. & Khosla, M. Evaluating representational similarity measures from the lens of functional correspondence. Preprint at https://doi.org/10.48550/arXiv.2411.14633 (2024).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2015).

Ungerleider, LG., Mishkin, L. in Analysis of Visual Behavior (eds Goodale, M. et al.) Ch. 18, 549 (MIT Press, 1982).

Goodale, M. A. & Milner, A. D. Separate visual pathways for perception and action. Trends Neurosci. 15, 20–25 (1992).

Tanaka, K. Inferotemporal cortex and object vision. Annu. Rev. Neurosci. 19, 109–139 (1996).

Ishai, A., Ungerleider, L. G., Martin, A., Schouten, J. L. & Haxby, J. V. Distributed representation of objects in the human ventral visual pathway. Proc. Natl Acad. Sci. USA 96, 9379–9384 (1999).

Conwell, C., Prince, J. S., Kay, K. N., Alvarez, G. A. & Konkle, T. What can 1.8 billion regressions tell us about the pressures shaping high-level visual representation in brains and machines? Nat. Commun. 15, 9383 (2023).

Schrimpf, M. et al. Brain-Score: which artificial neural network for object recognition is most brain-like? Preprint at bioRxiv https://doi.org/10.1101/407007 (2018).

Russakovsky, O. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis., 115, 211–252 (2014).

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A. & Torralba, A. Places: a 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40, 1452–1464 (2018).

Zamir, A. et al. Taskonomy: disentangling task transfer learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3712–3722 (2018).

Mahajan, D. et al. Exploring the limits of weakly supervised pretraining. In Proc. European Conference on Computer Vision 181–196 (2018).

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations. In International conference on machine learning 1597–1607 (PMLR, 2020).

Ratan Murty, N. A., Bashivan, P., Abate, A., DiCarlo, J. J. & Kanwisher, N. Computational models of category-selective brain regions enable high-throughput tests of selectivity. Nat. Commun. 12, 5540 (2021).

Güçlü, U. & van Gerven, M. A. J. Semantic vector space models predict neural responses to complex visual stimuli. In International Conference on Machine Learning 1597–1607 (PMLR, 2015).

Frisby, S. L., Halai, A. D., Cox, C. R., Lambon Ralph, M. A. & Rogers, T. T. Decoding semantic representations in mind and brain. Trends Cogn. Sci. 27, 258–281 (2023).

Greene, M. R., Baldassano, C., Esteva, A., Beck, D. M. & Fei-Fei, L. Visual scenes are categorized by function. J. Exp. Psychol. Gen. 145, 82–94 (2016).

Greene, M. R. Statistics of high-level scene context. Front. Psychol. 4, 777 (2013).

Henderson, J. M. & Ferreira, F. in The Interface of Language, Vision, and Action: Eye Movements and the Visual World (ed. Henderson, J. M.) Vol. 399, 1–58 (Psychology Press, 2004).

Greene, M. R. & Oliva, A. The briefest of glances: the time course of natural scene understanding. Psychol. Sci. 20, 464–472 (2009).

Malcolm, G. L. & Shomstein, S. Object-based attention in real-world scenes. J. Exp. Psychol. Gen. 144, 257–263 (2015).

Biederman, I. Perceiving real-world scenes. Science 177, 77–80 (1972).

Greene, M. R. Scene perception and understanding. In Oxford Research Encyclopedia of Psychology (2023).

Potter, M. C. Meaning in visual search. Science 187, 965–966 (1975).

Carlson, T. A., Simmons, R. A., Kriegeskorte, N. & Slevc, L. R. The emergence of semantic meaning in the ventral temporal pathway. J. Cogn. Neurosci. 26, 120–131 (2014).

Contier, O., Baker, C. I. & Hebart, M. N. Distributed representations of behaviorally-relevant object dimensions in the human visual system. Nat. Hum. Behav. 8, 2179–2193 (2024).

Marblestone, A. H., Wayne, G. & Kording, K. P. Toward an integration of deep learning and neuroscience. Front. Comput. Neurosci. 10, 94 (2016).

Golan, T. et al. Deep neural networks are not a single hypothesis but a language for expressing computational hypotheses. Behav. Brain Sci. 46, e392 (2023).

Conwell, C. et al. Monkey see, model knew: large language models accurately predict human AND macaque visual brain activity. In UniReps: 2nd Edition of the Workshop on Unifying Representations in Neural Models (2024).

Popham, S. F. et al. Visual and linguistic semantic representations are aligned at the border of human visual cortex. Nat. Neurosci. 24, 1628–1636 (2021).

Wang, A. Y., Kay, K., Naselaris, T., Tarr, M. J. & Wehbe, L. Better models of human high-level visual cortex emerge from natural language supervision with a large and diverse dataset. Nat. Mach. Intell. 5, 1415–1426 (2023).

Tang, J., Du, M., Vo, V. A., Lal, V. & Huth, A. G. Brain encoding models based on multimodal transformers can transfer across language and vision. Adv. Neural Inf. Process. Syst. 36, 29654–29666 (2023).

Kay, K., Bonnen, K., Denison, R. N., Arcaro, M. J. & Barack, D. L. Tasks and their role in visual neuroscience. Neuron 111, 1697–1713 (2023).

Çukur, T., Nishimoto, S., Huth, A. G. & Gallant, J. L. Attention during natural vision warps semantic representation across the human brain. Nat. Neurosci. 16, 763–770 (2013).

Goldstein, A. et al. Alignment of brain embeddings and artificial contextual embeddings in natural language points to common geometric patterns. Nat. Commun. 15, 2768 (2024).

Schrimpf, M. et al. The neural architecture of language: integrative modeling converges on predictive processing. Proc. Natl Acad. Sci. USA 118, e2105646118 (2021).

Zada, Z. et al. A shared linguistic space for transmitting our thoughts from brain to brain in natural conversations. Neuron 112, 3211–3222 (2023).

Cadieu, C. F. et al. Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput. Biol. 10, e1003963 (2014).

Bird, S., Klein, E., & Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit (Reilly Media, 2009).

Kriegeskorte, N., Goebel, R. & Bandettini, P. A. Information-based functional brain mapping. Proc. Natl Acad. Sci. USA 103, 3863–3868 (2006).

Haynes, J. D. & Rees, G. Predicting the stream of consciousness from activity in human visual cortex. Curr. Biol. 15, 1301–1307 (2005).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B 57, 289–300 (1995).

Google Scholar

Kietzmann, T. C. et al. Recurrence is required to capture the representational dynamics of the human visual system. Proc. Natl Acad. Sci. USA 116, 21854–21863 (2019).

Kubilius, J. et al. CORnet: modeling the neural mechanisms of core object recognition. Preprint at bioRxiv https://doi.org/10.1101/408385 (2018).

Muttenthaler, L. & Hebart, M. N. THINGSvision: a Python toolbox for streamlining the extraction of activations from deep neural networks. Front. Neuroinform. 15, 679838 (2021).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (eds Pereira, F. et al.) Vol. 25 (Curran Associates, Inc., 2012).

timmdocs: documentation for Ross Wightman’s timm image model library. GitHub https://github.com/fastai/timmdocs (2025).

Doerig, A. Visuo_llm (v1.0). Zenodo https://doi.org/10.5281/ZENODO.15282176 (2025).

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-07 00:00:00