How AlphaChip transformed computer chip design

research

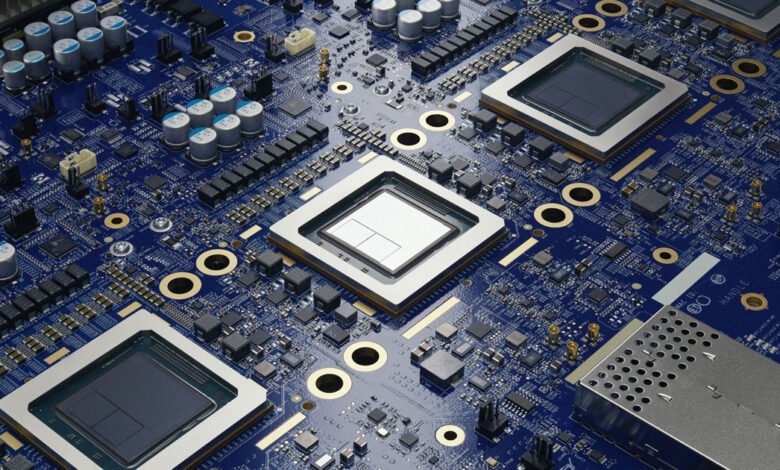

Our AI’s method has accelerated with improved and improved chips, and super chip layouts are used in devices all over the world

In 2020, we previously released the introduction of the new reinforcement method for designing chips layouts, which we later published in Nature and Open Sourted.

Today, we publish an addition of a nature that describes more of our way and its effect on the field of chips design. We also launch a pre -trained checkpoint, share the typical weights and announce its name: Alphachip.

Computer chips have fueled a remarkable progress in artificial intelligence (AI), and alphachip restores the use of artificial intelligence to accelerate and improve chips design. This method of Superhuman chips design has been used in the last three generations of Google’s allocated, tensioner processing unit (TPU).

Alphachip was one of the first reinforcement learning approach used to solve the geometry in the real world. It generates superior or comparable chips layouts in hours, instead of taking weeks or months of human effort, and their layouts are used in chips around the world, from data centers to mobile phones.

“

AI AI’s pioneering approach to Alphachip is a revolution in a main stage design.

Father Tsai, First Vice president of Mediaatek

How to work alphachip

Frex layout design is not a simple task. Computer chips consist of many interconnected blocks, with layers of circuit components, all of which are incredibly delicate. There are also many complex and interlocking design restrictions that must all be met at the same time. Because of its absolute complexity, the designers of the chips have struggled to automate the planning of the chips of the floor for more than sixty years.

Like Alphao and Alphazero, who learned to master GO, Chess and Shogi games, we built Alphachip to approach the chips floor scheme as a kind of game.

Starting with an empty mesh, Alphachip places one circle component at one time until all ingredients are placed. It is then rewarded based on the quality of the final design. The “alphachip” nerve network allows learning relationships between the components of the interconnected chip and generalization via chips, allowing Alphachip to improve with each designs design.

Left: Animation that shows Alphachip has developed the ARIANE RISC-V Central Unit, with no previous experience. Right: Animation that shows Alphachip Place the same mass after practicing 20 TPU designs.

Using Amnesty International to design FIFA International International

Alphachip has created super chip layouts for a person used in each GOOGLE generation since its publication in 2020. These chips make it possible to expand the scope of artificial intelligence models significantly based on the Google adapter structure.

TPUS lies in the heart of our powerful artificial intelligence systems, from large language models, such as Gemini, to photo and video generators, imagen and Veo. AI’s accelerators are also at the heart of Google’s AI services and are available to Google Cloud users.

A row of TPU V5P AI ACELERATOR at Google Data Center.

To design TPU layouts, Alphachip is first practiced on a variety of chips blocks of previous generations, such as network blocks on the chip and between chip, memory control units, and data transmission institutions. This process is called before training. Then we manage Alphachip on the current TPU blocks to create high -quality layouts. Unlike previous methods, Alphachip becomes better and faster because it solves more important chips, similar to how human experts do.

With every new generation of TPU, including the latest Trillium (Sixth Generation), Alphachip designed better chip layouts, provided more total floor scheme, speed up the design cycle and give high performance chips.

The tape fee displays the number of Alphachip chips across three generations of Google (TPU) conversation units (TPU), including V5E, V5P and Trillium.

The tape graph, which shows the average reduction of blue in Alphachip, is displayed across three generations of Google conversation units (TPUS), compared to the places created by the TPU.

The wider alphachip effect

Alphachip’s effect can be seen through its applications via Alphabet, the research community and the chip design industry. Besides the design of specialized AI’s accelerators such as TPUS, Alphachip created layouts for other alphabet chips, such as Google Axion processors, which are the central processing units of the first arm data center.

External organizations also adopt and build on Alphachip. For example, MediaTek, one of the best chip design companies in the world, Alphachip extended to accelerate the development of its most advanced chips with energy, performance and chips.

Alphachip has sparked an artificial intelligence explosion for chips design, and was extended to the other critical stages of chips design, such as logic synthesis and macro selection.

“

Alphachip inspired a completely new line of research on reinforcement learning for chips design, and reached the design flow from logic creation to the floor station, improving timing and beyond.

Professor Sidharith Gargi, Faculty of Engineering at New York University Tandon

Create future chips

We believe that Alphachip has the ability to improve each stage of the chips design cycle, from computer structure to manufacturing – and convert chip design for custom devices present in daily devices such as smartphones, medical equipment, agricultural sensors and more.

Future versions of Alphachip are now developing, and we look forward to working with society to continue a revolution in this field and achieve a future in which the chips are faster, cheaper and more efficient in strength.

Thanks and appreciation

We are very grateful to our amazing participants: Mustafa Yazjan, Joe Winji Jiang, Ibrahim Songuri, Shin Wang, Yong Yun Lee, Eric Johnson, Umkar Pathak, Al -Azad Nazi, Geoowo, Andy Tong, Kaf Surinifasa Najjar and Jeff Dean.

We in particular appreciate Joe Winji Jiang, Ibrahim Songuri, young Yun Lee, Roger Carpenter, Sergio Guadarrama, ongoing efforts to decline in this productive impact, Quoc V. Lee for his research and guidance advice, and our main author Jeff Dean to support him and deep technical discussions.

We also want to thank Ed Chi, Zoubin Grammani, Korai Kavokooglu, Dave Patterson, and Chris Manning for all their advice and support.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2024-09-26 14:08:00