How Does Claude Think? Anthropic’s Quest to Unlock AI’s Black Box

LLMS models such as Cloud changed the way we use technology. They are energy tools like Chatbots, and they help write articles and even create poetry. But despite their amazing abilities, these models are still a number of ways. People often call them a “black box” because we can see what they say, but not how they discover it. This lack of understanding creates problems, especially in important areas such as medicine or law, where errors or hidden biases can cause real harm.

Understanding how LLMS works is necessary to build confidence. If we cannot clarify the reason for giving a specific answer form, it is difficult to trust its results, especially in sensitive areas. The interpretation also helps in identifying and identifying biases or errors, and ensuring that the models are safe and moral. For example, if the model constantly prefers some views, knowing the reason can help developers to correct it. This need for clarity is what drives the research to make these models more transparent.

The human being, the company behind Claude, opens this black square. They made exciting progress in knowing how LLMS thinks, and this article explores their breakthroughs in making Claude easier to understand.

Maps for Claude’s ideas

In mid -2014, the anthropologist achieved an exciting penetration. They created a basic “map” of how to process Claude information. Using a technique called the dictionary learning, they found millions of patterns in the “brain” of Claude – the nerve network. Each style or “feature” connects to a specific idea. For example, some features help the cities of Claude a spot, famous people or coding errors. Others are linked to more difficult topics, such as gender or confidential bias.

The researchers discovered that these ideas are not isolated within individual neurons. Instead, they spread across many neurons from the Claude network, with each neuron contributes to different ideas. This overlap made it difficult to discover these ideas in the first place. But by discovering these frequent patterns, the Antarbur researchers began to decipher how Claude organized his ideas.

Follow the logic of Claude

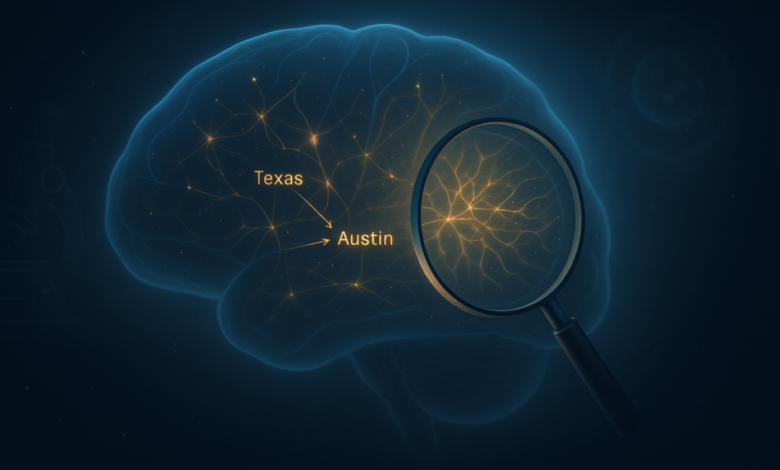

After that, a person wanted to see how Claude used these ideas to make decisions. They recently built a tool called attribution, which works like a step -by -step guide for Claude’s thinking. Each point on the graph is an idea that illuminates the mind of Claude, and the arrows show how one idea flows until the next. This chart allows researchers to track how to turn Claude into a question to an answer.

To understand the best work of charts for support, think about this example: When asked, “What is the country’s capital with Dallas?” Claude must realize that Dallas exists in Texas, then remembers that the capital of Texas is Austin. The chart of the chain of transmission showed this fine process – one part of the Claude “Texas”, which led to the choice of another “Austin”. The team even tested by modifying the “Texas” part, and certainly, other than the answer. This indicates that Claude not only guess – it works through the problem, and now we can see it happens.

Why is this important: analogy of biological sciences

To find out the reason for this, it is comfortable thinking about some major developments in biological sciences. Just as the microscopic invention allowed scientists to discover cells – hidden building blocks of life – these tools of interpretation allow artificial intelligence researchers to discover building blocks from thought within models. Just as the maps of the nerve circuits in the brain or genome sequence pave the way to achieve breasts in medicine, the mapping of the Claude interior works can pave the way for the intelligence of the most reliable and controlled machine. These interpretation tools can play a vital role, which helps us look at the process of thinking about artificial intelligence models.

Challenges

Even with all this progress, we are still away from LLMS just like Claude. Currently, the charts cannot explain the chain of transmission on one in four of the Claude decisions. Although the map of its features is impressive, it covers part of what is going on inside the brain of Claude. With billions of parameters, Claude and other LLMS make countless accounts for each task. Follow each one to see how the answer forms are similar to trying to follow all the neurons that are launched in a human brain during one thought.

There is also a “hallucinations” challenge. Sometimes, artificial intelligence models generate responses that appear reasonable but actually wrong – such as a male with incorrect real confidence. This happens because the models depend on patterns of their training data rather than a real understanding of the world. Understanding the reason for their movement in manufacturing remains a difficult problem, while highlighting the gaps in our understanding of their internal works.

Bias is another important obstacle. Artificial intelligence models learn from vast data collections from the Internet, which inherently carry human biases – granulated patterns, biases, and other societal defects. If Claude picked up these biases from his training, it may reflect them in their answers. The emptying of these biases that arise and how it affects the thinking of the model is a complex challenge that requires both technical solutions and careful consideration of data and ethics.

The bottom line

Human work in making large language models (LLMS) like Claude is more understanding an important step forward in the transparency of artificial intelligence. By revealing how Claude processing information and making decisions, they are redirecting the main concerns about the accountability of artificial intelligence. This progress opens the door for the safe integration of LLMS in critical sectors such as health care and law, where trust and morals are vital.

As ways to improve the ability to develop, industries that have been cautious about adopting artificial intelligence can now be reviewed. Transparent models such as Claude provides a clear way for the future of artificial intelligence – not only repeated human intelligence, but also explains their thinking.

2025-04-03 05:00:00