How to Build Portable, In-Database Feature Engineering Pipelines with Ibis Using Lazy Python APIs and DuckDB Execution

In this tutorial, we explain how to use them Ibis To create a portable engineering pipeline within the database that looks like Pandas but is implemented entirely within the database. We show how we connect to DuckDB, securely log data within the backend, and define complex transformations using window functions and aggregates without pulling the raw data into local memory. By keeping all transformations slow and agnostic, we show how to write analytics code once in Python and rely on Ibis to translate it into efficient SQL. verify Full codes here.

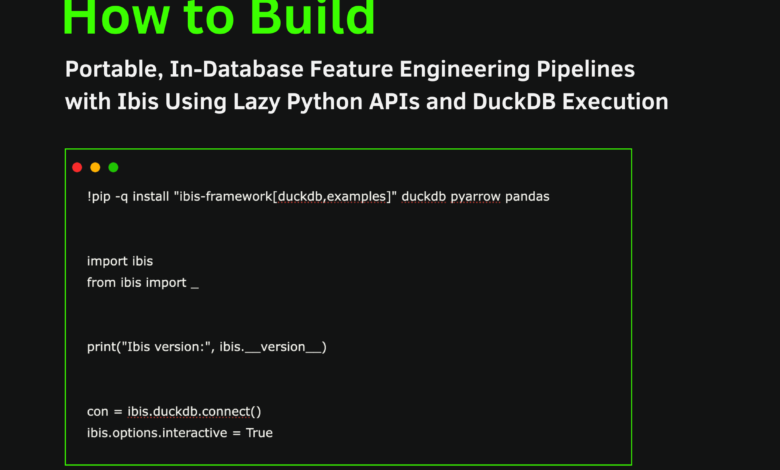

!pip -q install "ibis-framework[duckdb,examples]" duckdb pyarrow pandas

import ibis

from ibis import _

print("Ibis version:", ibis.__version__)

con = ibis.duckdb.connect()

ibis.options.interactive = TrueWe install the required libraries and configure the Ibis environment. We create a DuckDB connection and enable interactive execution so that all subsequent operations remain lazy and routed through the backend. verify Full codes here.

try:

base_expr = ibis.examples.penguins.fetch(backend=con)

except TypeError:

base_expr = ibis.examples.penguins.fetch()

if "penguins" not in con.list_tables():

try:

con.create_table("penguins", base_expr, overwrite=True)

except Exception:

con.create_table("penguins", base_expr.execute(), overwrite=True)

t = con.table("penguins")

print(t.schema())We load the Penguins dataset and explicitly register it within the DuckDB catalog to ensure it is available for SQL execution. We check the table schema and make sure that the data now lives inside the database and not in local memory. verify Full codes here.

def penguin_feature_pipeline(penguins):

base = penguins.mutate(

bill_ratio=_.bill_length_mm / _.bill_depth_mm,

is_male=(_.sex == "male").ifelse(1, 0),

)

cleaned = base.filter(

_.bill_length_mm.notnull()

& _.bill_depth_mm.notnull()

& _.body_mass_g.notnull()

& _.flipper_length_mm.notnull()

& _.species.notnull()

& _.island.notnull()

& _.year.notnull()

)

w_species = ibis.window(group_by=[cleaned.species])

w_island_year = ibis.window(

group_by=[cleaned.island],

order_by=[cleaned.year],

preceding=2,

following=0,

)

feat = cleaned.mutate(

species_avg_mass=cleaned.body_mass_g.mean().over(w_species),

species_std_mass=cleaned.body_mass_g.std().over(w_species),

mass_z=(

cleaned.body_mass_g

- cleaned.body_mass_g.mean().over(w_species)

) / cleaned.body_mass_g.std().over(w_species),

island_mass_rank=cleaned.body_mass_g.rank().over(

ibis.window(group_by=[cleaned.island])

),

rolling_3yr_island_avg_mass=cleaned.body_mass_g.mean().over(

w_island_year

),

)

return feat.group_by(["species", "island", "year"]).agg(

n=feat.count(),

avg_mass=feat.body_mass_g.mean(),

avg_flipper=feat.flipper_length_mm.mean(),

avg_bill_ratio=feat.bill_ratio.mean(),

avg_mass_z=feat.mass_z.mean(),

avg_rolling_3yr_mass=feat.rolling_3yr_island_avg_mass.mean(),

pct_male=feat.is_male.mean(),

).order_by(["species", "island", "year"])We define a reusable engineering pipeline using pure Ibis expressions. We compute derived features, apply data cleaning, and use window and batch functions to create advanced, native features of the database while keeping the entire pipeline lazy. verify Full codes here.

features = penguin_feature_pipeline

print(con.compile(features))

try:

df = features.to_pandas()

except Exception:

df = features.execute()

display(df.head())We call the feature path and compile it into DuckDB SQL to verify that all transformations are pushed to the database. We then run the pipeline and return only the final aggregated results for the scan. verify Full codes here.

con.create_table("penguin_features", features, overwrite=True)

feat_tbl = con.table("penguin_features")

try:

preview = feat_tbl.limit(10).to_pandas()

except Exception:

preview = feat_tbl.limit(10).execute()

display(preview)

out_path = "/content/penguin_features.parquet"

con.raw_sql(f"COPY penguin_features TO '{out_path}' (FORMAT PARQUET);")

print(out_path)We materialize the geometric features as a table directly inside DuckDB and lazily query it to verify it. We also export the results to a Parquet file, demonstrating how we can deliver features computed in the database to downstream analytics or machine learning workflows.

In conclusion, we built, compiled, and implemented an advanced feature engineering workflow entirely within DuckDB using Ibis. We showed how to inspect the generated SQL, instantiate the results directly into the database, and export them for end use while maintaining portability across analytical backends. This approach reinforces the core idea behind Ibis: we keep calculations close to the data, reduce unnecessary data movement, and maintain a single, reusable Python database that scales from local experiments to production databases.

verify Full codes here. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Check out our latest version of ai2025.deva 2025-focused analytics platform that turns model launches, performance benchmarks, and ecosystem activity into a structured data set that you can filter, compare, and export.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2026-01-09 14:50:00