Huawei Voice AI Sparks Ethics Uproar

Huawei Voice Ai Sparks Ethics UPROAR

Huawei Voice Ai Sparks Ethics Uplooar appears as a controversial virus, Xiaoyi, as she provided a biased and degrading responses when they are demanded by political and culturally sensitive questions. Online reaction was quickly, as researchers, ethics and users all over the world raises difficult questions about the accountability of artificial intelligence, the role of artificial intelligence in moderate content, and the global effects of algorithm. Since Huawei pushes its technologies to the western markets, this prominent incident has escalated on the urgency to develop transparent artificial intelligence, moral guarantee, and global organizational alignment.

Main meals

- Xiaoyi Voice Assistant answered sensitive topics with doubtful answers, which sparked anger online.

- The accident increased from the global audit of Huawei Ai Ethics and Governance.

- Experts compare the repercussions with Microsoft’s Tay and Google’s failure, while highlighting fears of artificial intelligence on a large scale.

- With the expansion of Huawei’s eyes in the West, the need for organizational compliance and moral intelligence frameworks increases.

Viral accident: What does Amnesty International Huawei say?

The controversy started when a recorded video clip of the user with the Huawei Voice Assistant, Xiaoyi, went on social platforms. The auxiliary video, which responds to questions related to sensitive topics, showed the language that some have interpreted as national, rejecting or indirectly offensive to certain groups. Critics indicated that Xiaoyi behavior reflects previous ethical violations in the design of artificial intelligence, as the outputs of the models reflect the training data biases or the lack of content guarantees.

It included one of the examples mentioned in the auxiliary user forums that express strong opinions on geopolitical tension topics. Although Huawei did not reveal the full training data of the model, many of its language patterns reflect the compact preferences and moderate policies affected by the country that usually appear on Chinese technology platforms.

Huawei’s response is a general violent reaction

Huawei has released a general apology, saying that she is investigating the assistant responses and is working to improve training models in Xiaoyi. The company confirmed that these responses do not reflect their values for companies and highlighted its continuous research partnerships that aim to comply with moral standards.

Despite the company’s statement, consumer confidence is still vibrating. Social media comment shows that many believe that the behavior of the assistant is symptoms of deeper governance issues in the development of artificial intelligence based on China instead of an isolated technical accident. The increase in the audit also indicated questions in the broader industry, such as the moral effects of advanced male and data supervision.

Historical context: The failure of another Amnesty International

This incident is not without a predecessor. He adds to an increasing list of the failure of the artificial intelligence model that has been biased or produced inappropriate outputs:

- Microsoft’s Tay Chatbot (2016): Tay is designed to interact with users on Twitter, and began to echo racist and offensive views within 24 hours due to hostile user training.

- Google photos (2015): The algorithm to recognize images from the articles of association described black individuals as “Gorilla”, which sparked widespread condemnation and forced the repair of signs.

- Facebook Chatbots (2017): Experimental robots began to develop their encrypted language after learning courses have exploded enhancement of humanitarian building standards.

Each case has become a pivotal moment in discussions about the algorithm. These failures act as warning examples for companies that publish artificial intelligence technologies, including those that use AI Voice in applications facing customers.

Technical interpretation: Why is the bias of artificial intelligence happen

Understanding the reason for artificial intelligence assistants, such as Xiaoyi, produces biased or inappropriate responses to how to build them. Voice Assistants rely on large language models trained with huge data collections that are withdrawn from the Internet. If these data groups include a biased content, politically charged materials, or culturally deviant opinions, the inputs constitute the behavior of the assistant.

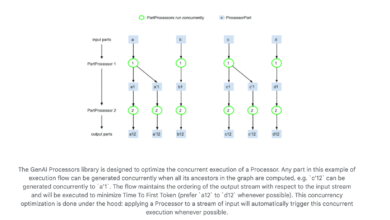

Below is a basic collapse of the operation behind the sound assistant response:

- Discover inputs: The user asks a question or gives an order.

- Normal Treatment (NLP): The assistant translates the request using a sentence and semantics analysis.

- Recover data or generation: The assistant accesses a database or creates content using a trained model.

- Directing: Responses pass through filters designed to prevent offensive content or wrong information.

- Response formation: The response is created and presented to the user in a operative or written model.

If the bias slip through any of these stages, especially in data source filters or directing, this may lead to problematic responses. Transparency is necessary to ensure that artificial intelligence systems act fairly and reflect responsible development options.

Many ethics urge companies to seriously deal with artificial intelligence behavior. Dr. Margaret Mitchell, known for her research, said that the evacuation of responsibility could not replace shared responsibility. Timnit Gebru argued that artificial intelligence products should face third -party reviews to reduce the possible long -term damage. Kate Croford, author of “Atlas International”, confirmed that these systems are not present in a vacuum because they are designed within political and economic ecosystems.

Institutions such as UNESCO and IEEE suggest principles such as algorithm transparency, comprehensive training groups, human supervision, and implemented reviews. Huawei’s current infrastructure appears with local standards, but it still faces shortcomings compared to international standards that are informed of obligations such as the European Union AI or the advice of accountability for the American algorithm. These concerns reflect those in media analyzes such as the DW Documentary about artificial intelligence and morals, which explore cultural differences in the organization of artificial intelligence and tolerance with risks.

Geopolitical lens: Huawei Global Batch

The controversy reaches a sensitive time. Huawei places itself as a technology player throughout the European markets and North America. Compliance with the laws of local and international artificial intelligence is no longer a procedural issue, as it constitutes confidence and future access to these markets. European organizers need to reveal how to make algorithm decisions and require evaluations for the pre -emptive risk of technology that affects public discourse and rights.

Since the International Energy Agency agencies in the United States and the European Union are enhancing scrutiny in the imports of artificial intelligence, Huawei needs to adopt more stringent compliance measures and verify third -party parties. World consumers have become more aware of the baptism of content and seek better handrails. The comparisons are already drawn to other publishing processes of artificial intelligence, including innovations such as soundhound, which gained attention to their ready features of compliance.

Common questions

What did the Huawei AI voice assistant?

According to the Xiaoyi Voice assistant, the politically sensitive questions answered in ways that seemed to be biased and supported by specific national views. Although the content depends on the fine formulation, many viewers believe that the responses reflect a single or rejecting tone.

Why is Huawei artificial intelligence criticized?

The company suffers from fire because the assistant displays cultural and political bias in its responses. This raised concerns about whether state or ideological perspectives are included in the algorithm and whether Huawei maintains sufficient moral processes.

What is the algorithm in artificial intelligence?

The algorithm prejudice includes an unintended bias or a deviant behavior shown by artificial intelligence systems. This is often the result of the biased data inputs, the accountability of the weak model, or the insufficient content control items that fail to protect the marginalized or diverse views.

Did any Amnesty International face similar moral problems?

Yes. The experimental chatbot tay of Microsoft has become a social media. Google photos offend black individuals in an inhuman way. These incidents have caused strong criticism from civil rights groups and engineering leaders, which has made changes to training and artificial intelligence.

How does global technology companies organize artificial intelligence?

The leading companies carry out instructions from organizations such as IEEE, which makes artificial intelligence systems more responsible through audit processes, clarification features, and fair data sources. Some governments are studying or enriched laws to ensure users protect from discriminatory results.

The way for Huawei and the governance of artificial intelligence

Huawei Challenge with Xiaoyi is a warning sign. Success in global markets depends on transparency, safety and the development of moral artificial intelligence. In addition to issuing general apologies, Huawei must adhere to showing how to train their models and how prevented outputs prevent future publishing operations. This includes adopting the liquidation of stricter content, documenting decision -making protocols, and participating in international ethics councils.

At a broader level, this position indicates the need to cooperate at the level of industry. Developers cannot ignore that their technologies work in social spaces. With the increasing interaction of humanity with digital assistants, maintaining confidence and accountability will determine the companies that threaten. The issue may also relate to the deepest inquiries in how to simulate human perspectives, and the borders must impose the developers to preserve objectivity and respect.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-12 12:30:00