Implementing Self-Refine Technique Using Large Language Models LLMs

This tutorial shows how to carry out self -rear technology using large LLMS models with Mirascope, a strong framework for building organized workflow tasks. Selfed Selfine is a quick engineering strategy where the model evaluates its own results, generates notes, and improves its response repeatedly based on this counter -feeding. This improvement ring can be repeated several times to enhance the quality and accuracy of the final answer.

The self -reverse approach is especially effective for tasks that involve thinking, generating the code and creating content, as additional improvements lead to greatly better results. verify Full codes here

Constance stabilization

!pip install "mirascope[openai]"API Openai key

To get the Openai API key, please visit https://platform.epenai.com/Settes/organization/API- KEYS and create a new key. If you are a new user, you may need to add details of the bills setting and pay the minimum $ 5 to activate the API access. verify Full codes here

import os

from getpass import getpass

os.environ["OPENAI_API_KEY"] = getpass('Enter OpenAI API Key: ')The basic implementation of the self -esteem

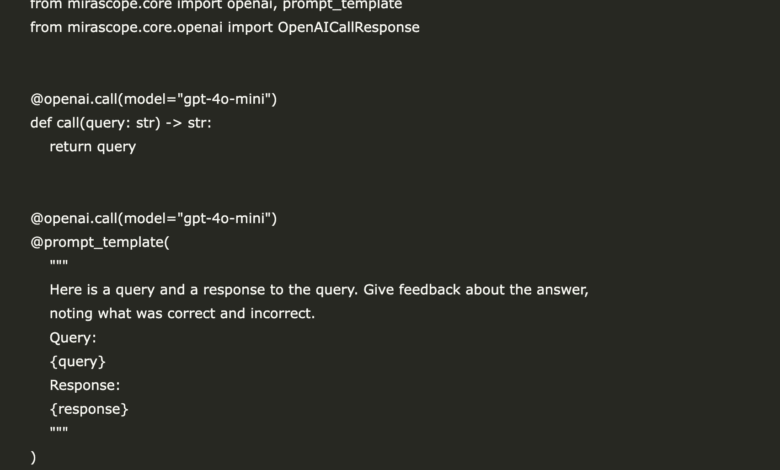

We start carrying out the self -reserve technology using Decorarators Miracope’s @openai.call and Prompt_Template. The process begins with creating a preliminary response to the user’s inquiry. Then this response is evaluated through the same form, which provides constructive notes. Finally, the model uses these comments to create an improved response. The Self_refine function allows us to repeat this improvement process for a specific number of repetitions, which enhances the quality of the output with each cycle. verify Full codes here

from mirascope.core import openai, prompt_template

from mirascope.core.openai import OpenAICallResponse

@openai.call(model="gpt-4o-mini")

def call(query: str) -> str:

return query

@openai.call(model="gpt-4o-mini")

@prompt_template(

"""

Here is a query and a response to the query. Give feedback about the answer,

noting what was correct and incorrect.

Query:

{query}

Response:

{response}

"""

)

def evaluate_response(query: str, response: OpenAICallResponse): ...

@openai.call(model="gpt-4o-mini")

@prompt_template(

"""

For this query:

{query}

The following response was given:

{response}

Here is some feedback about the response:

{feedback}

Consider the feedback to generate a new response to the query.

"""

)

def generate_new_response(

query: str, response: OpenAICallResponse

) -> openai.OpenAIDynamicConfig:

feedback = evaluate_response(query, response)

return {"computed_fields": {"feedback": feedback}}

def self_refine(query: str, depth: int) -> str:

response = call(query)

for _ in range(depth):

response = generate_new_response(query, response)

return response.content

query = "A train travels 120 km at a certain speed. If the speed had been 20 km/h faster, it would have taken 30 minutes less to cover the same distance. What was the original speed of the train?"

print(self_refine(query, 1))Self enhancement with the response model

In this improved version, we define an organizational response form using Pydantic to capture both the steps and the final numerical answer. Endanced_generate_New_NewSPONSE function improves output by integrating the feedback created by the model and coordinating the improved response into a well -defined scheme. This approach guarantees clarity, consistency, ease of best use of a repeated answer-especially for tasks such as solving mathematical problems. verify Full codes here

from pydantic import BaseModel, Field

class MathSolution(BaseModel):

steps: list[str] = Field(..., description="The steps taken to solve the problem")

final_answer: float = Field(..., description="The final numerical answer")

@openai.call(model="gpt-4o-mini", response_model=MathSolution)

@prompt_template(

"""

For this query:

{query}

The following response was given:

{response}

Here is some feedback about the response:

{feedback}

Consider the feedback to generate a new response to the query.

Provide the solution steps and the final numerical answer.

"""

)

def enhanced_generate_new_response(

query: str, response: OpenAICallResponse

) -> openai.OpenAIDynamicConfig:

feedback = evaluate_response(query, response)

return {"computed_fields": {"feedback": feedback}}

def enhanced_self_refine(query: str, depth: int) -> MathSolution:

response = call(query)

for _ in range(depth):

solution = enhanced_generate_new_response(query, response)

response = f"Steps: {solution.steps}\nFinal Answer: {solution.final_answer}"

return solution

# Example usage

result = enhanced_self_refine(query, 1)

print(result)The reinforced self -weapon technology has proven its effectiveness in solving the precisely specific mathematical problem:

“A train travels 120 km at a certain speed. If the speed is 20 km/h, it takes 30 minutes to cover the same distance. What is the original speed of the train?”

By repeating one of the refinement, the model delivered an audio and brightly derivative derivation, which leads to the correct answer of 60 km/h. This shows many of the main benefits of self -grammar approach:

- Improving accuracy through the repetitive reinforcement that depends on the feedback.

- Thinking steps are clearer, including variable setting, formulating the equation, and applying the square solution.

- More transparency, which makes it easier for users to understand the solution and confidence in it.

In broader applications, this technology carries a strong promise to tasks that require accuracy, structure and repetitive improvement – by solving technical problems to creative and professional writing. However, executives should remain familiar with the exhibitions in the calculation cost and control depth and comments to match their specific state of use.

verify Full codes here. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Common questions: Can Marktechpost help me promote my artificial intelligence product and put it in front of AI Devs and data engineers?

Answer: Yes, Marktechpost can help enhance your product artificial intelligence by publishing articles, status studies or product features, and targeting a global audience for artificial intelligence developers and data engineers. The MTP platform is widely read by technical professionals, which increases the vision of the product and location within the artificial intelligence community. [SET UP A CALL]

I am a graduate of civil engineering (2022) from Islamic Melia, New Delhi, and I have a strong interest in data science, especially nervous networks and their application in various fields.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-29 14:47:00