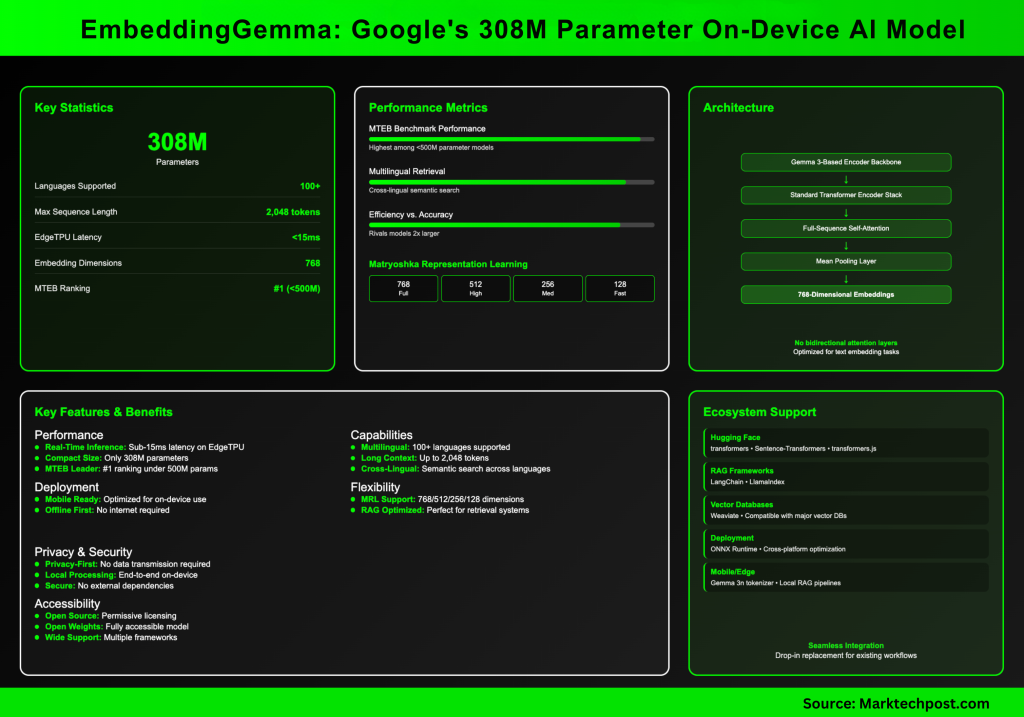

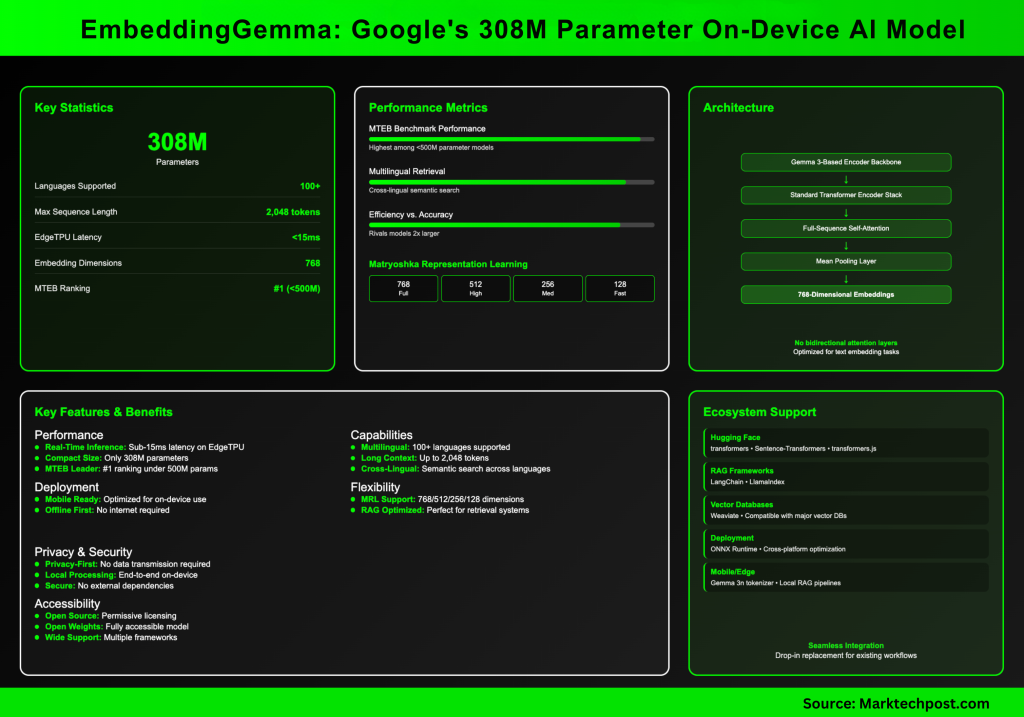

Google AI Releases EmbeddingGemma: A 308M Parameter On-Device Embedding Model with State-of-the-Art MTEB Results

inclusion It is an improved new open text model for AI on devices, designed to balance efficiency with a newer recovery performance.

How much is Embeddinggemma compared to other models?

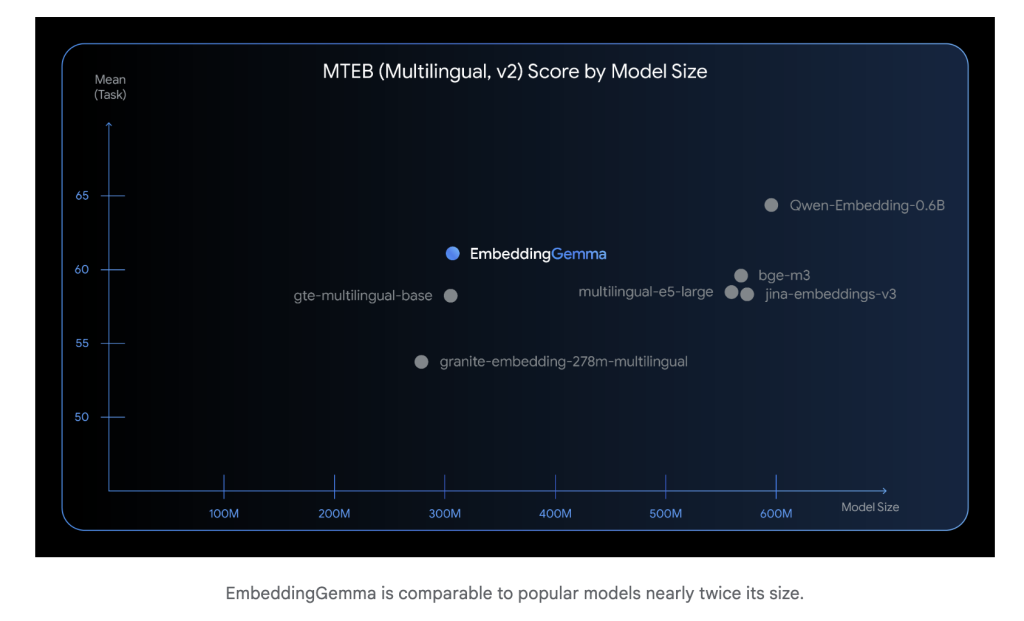

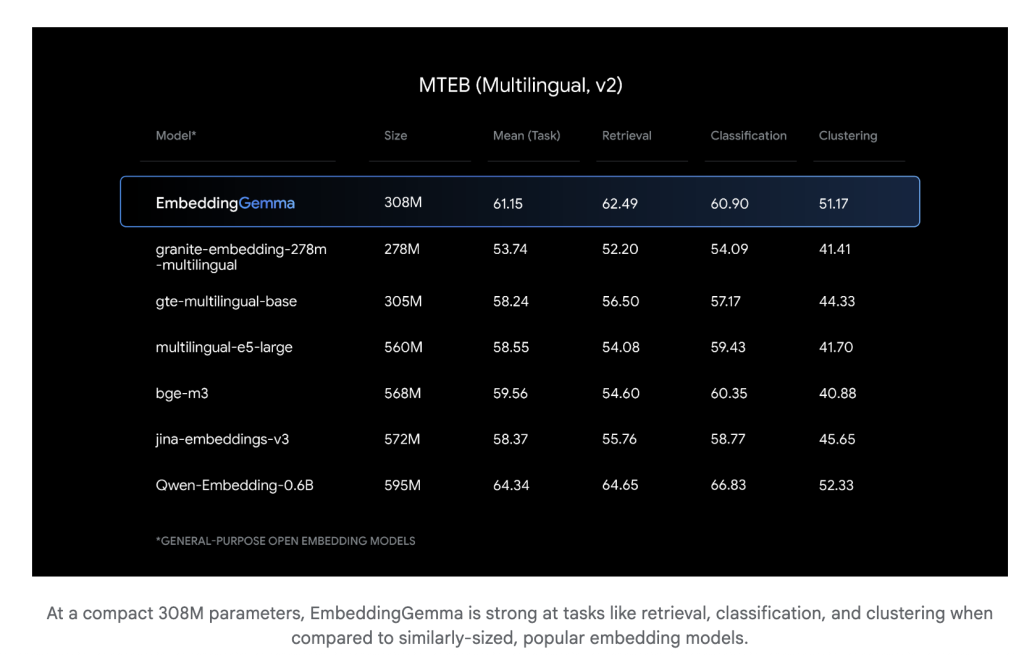

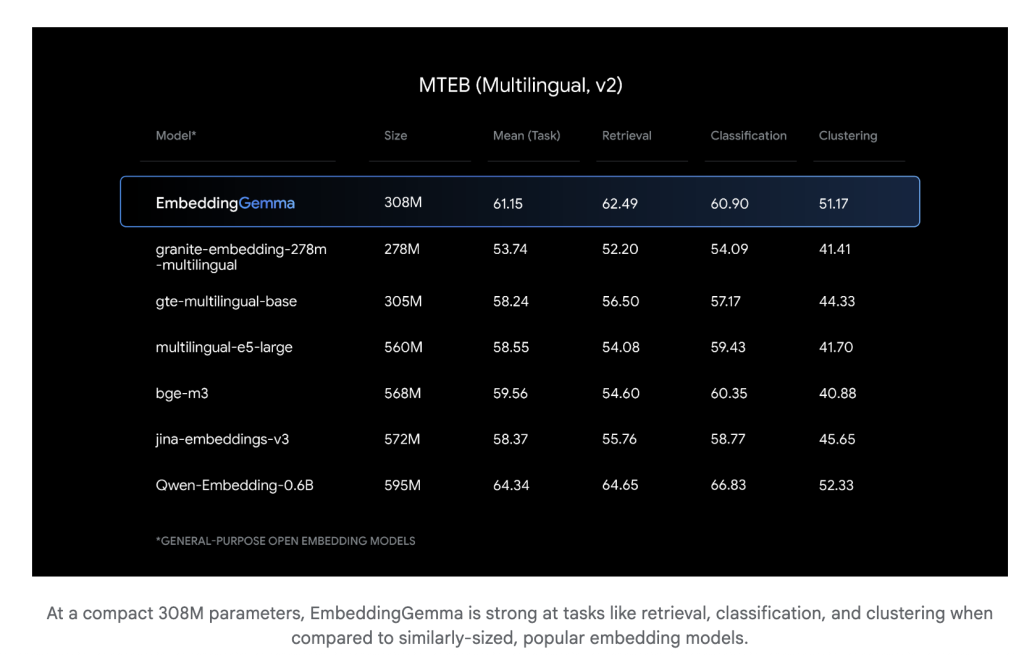

In the fair 308 million teachersIncmbedddinggemma is lightweight enough to run on mobile devices and the internet -related environments. Despite its size, it performs competitively with much larger inclusion forms. SUB-15 MS for 256 symbols on Edgetpu, which makes it suitable for applications in actual time.

How much does it perform on multi -language standards?

The inclusion has been trained across 100+ language And achieved The highest arrangement on the huge standard to include text (MTB) Between the models under 500 meters. The competitors of their performance or exceed models that include nearly twice their size, especially in retrieval through languages and semantic research.

What is the basic architecture?

Insbeddinggemma has been built on a Gemma 3 encoding is the average assembly. More importantly, architecture does not use dual -directional attention layers on which GEMMA 3 applies to image inputs. Instead, it is used Insbeddinggemma Standard transplantation staple with full sequencing self -interestIt is typical for models that include text.

This encryption produces 768 Dimensions And support the sequence of up to 2,048 symbolsWhich makes it good suitable for the generation (RAG) and long research. The intermediate assembly step ensures fixed -term representations, regardless of the input size.

What makes their inclusion flexible?

Employment of inclusion Learn to represent Matryoshka (MRL). This allows deduction from 768 dimensions to 512, 256, or even 128, with minimal loss of quality. Developers can control the comparison between storage efficiency and retrieval accuracy without re -training.

Can it work completely incompatible?

Yes. The inclusion is particularly designed On the device, the internet -connected use cases. Because he shares a symbol with Gemma 3NThe same problems that can work directly on compressed pipelines can be of local rag systems, taking advantage of the privacy to avoid cloud reasoning.

What are the tools and frameworks that support inclusion?

Smoothly merge with:

- Embroidery (Transformers, Wholesale Transformers, Transformers.js)

- Linjshen and Llamaindex For rag pipes

- Weaviaate And the rules of other vectors data

- ONNX operating time For optimal publication via platforms

This ecological system guarantees that developers can open it directly in the current workflow.

How can it be implemented in practice?

(1) Download and include

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("google/embeddinggemma-300m")

emb = model.encode(["example text to embed"])

(2) Control the size of the inclusion

Use the full 768 DIMS for maximum accuracy or deduct to 512/256/128 DIMS for the lower memory or retrieval faster.

(3) Integration into a rag

Run the similarities searched locally (the similarity of the perfect pocket) and the results of the upper feeding in Gemma 3N For the generation. This allows completely Connecting rag pipeline.

Why do you include beauty?

- Wide efficiency High multi -language retrieval accuracy in a compressed fingerprint.

- Flexibility – Dimensions of adjustable inclusion via MRL.

- privacy -Pipes from one side to the end without external dependencies.

- accessibility – Open weights, tolerant licensing, and strong ecosystem support.

GEND is proven The smaller models can achieve better in the category While the light is enough to publish in a non -connection mode. It represents an important step towards privacy and developmental efficiency on the device.

verify Form and technical details. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically intact and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-09-04 21:39:00