Deion Sanders’ Sons Shedeur and Shilo Enter NFL Together

Sanders debt Now he has two sons in the American Football Association, and he joins the ranks of the growing brothers in the league.

Hours after Qurtbbeck Shidor23, Cleveland Braones, his brother and safety ShiloSigned a contract with Tampa Bay Buccaneers on Saturday, April 26, as an uninterested heat.

Shilo, 25, was not captured by a team during the 2025 US Football Association draft earlier this week. Meanwhile, Shidor was expected to be chosen in the first round, but instead he heard his name called the fifth and after speculation that his father affected one way or another on his disappointing position.

“Both, you are flexible,” I told Dion, 57, his two sons during a mysterious period on Saturday, for each USA today. “[The draft] I tested all family members. I am grateful. Tampa is a great place. “

None of the Sanders family attended the draft live in Green Bay and Wisconsin, instead he was watching from their family’s home in Texas. They were all particularly surprised that Shidor was not formulated higher in the program.

“We have not expected this, of course,” Shidor said in a video of social media earlier this week, after his name was not called in the first round. “But I feel with God, everything is possible – everything is possible. I do not feel that this happened without reason.”

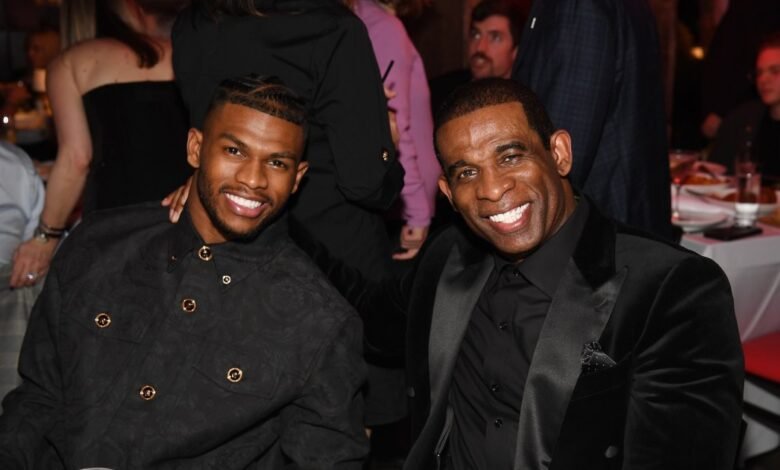

Shidor Sanders, Dun Sanders, and Shilo Sanders.

Jeff Kravitz/MagicHe added: “All this, of course, fuel on the fire. Under any circumstances – we all know that this should not have happened. But we understand, we face bigger and better things. Tomorrow. We will be happy regardless.”

Days later, Shilo indicated via Twitch that he was only hoping to reach the US Football Association on any team to be able to play against his younger brother. (The famous American Football Association brothers also include The Kelces, The Mannss, The Watts and McCafffreys.)

She follows SHEDEUR and Shilo, of course, Dad Deion. The legendary broad recipient of the US Football Association played for 14 seasons in Atlanta Falcons, San Francisco 49ers, Dallas Copews, Washington leaders, and Baltimore Raven. He was recruited in the Football Camel Hall in the college and the Football celebrity Hall in 2011.

Dion, known as peak time title, is currently the football coach in Colorado Bovalo. Under the PRIME title coach, he trained SHEDEUR and Shilo at school and is now looking for their next steps with the American Football Association.

“Everyone is concerned about what happened yesterday and the fear of what will happen tomorrow when we focus on it now.

Don’t miss more hot News like this! Click here to discover the latest in Entertainment news!

2025-04-27 14:43:00