Kumo’s ‘relational foundation model’ predicts the future your LLM can’t see

Join the event that the leaders of the institutions have been trusted for nearly two decades. VB Transform combines people who build AI’s strategy for real institutions. Learn more

Editor Note: Kumo Ai was one of the final contestants at VB Transform during our annual innovation exhibition and RFM from Mainstage at VB Transform on Wednesday.

The Tuwaidi artificial intelligence boom has given us strong language models that can write, summarize and summarize on huge amounts of text and other data. But when it comes to high -value predictive tasks such as predicting customer violation or detecting fraud and bumper data, institutions are still stuck in the traditional world of automatic learning.

Professor Stanford and the founder of the Kure Leskovec founder that this is the lost decisive piece. His company, RFM, is a new type of pre -trained artificial intelligence that brings the possibilities of “zero” to large language models (LLMS) to organized databases.

“It comes to a prediction of something you don’t know, something that has not happened yet,” Leskovic told Venturebeat. “This is mainly a new ability, and I would like to claim, missing from the current jurisdiction of what we think about the name of Gen AI.”

Why ML prediction is a “30 -year -old technique”

While LLMS and generation systems of recovery (RAG) can answer questions about current knowledge, they are mainly retroactively. They recover and cause the information already. For predictive business tasks, companies still rely on classic machine learning.

For example, to build a model that predicts the customer’s operation, the company must employ a team of data scientists who spend a large time in “Features Engineering”, which is the process of creating a manual predictive signals of data. This includes complex data quarrels to join information from different schedules, such as customer purchase record and website clicks, to create one huge training schedule.

“If you want to do automated learning (ML), sorry, you are stuck in the past,” said Leskovik. Time -expensive and time -consuming bottlenecks prevent most organizations from being really graceful with their data.

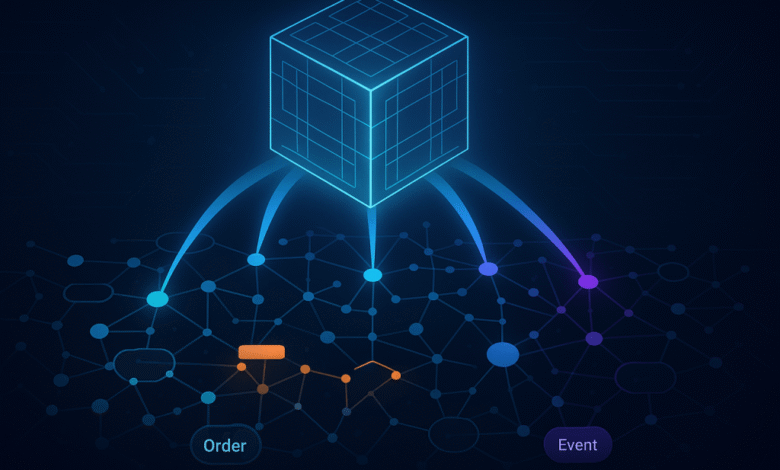

How Como generalize transformers to databases

Komo’s approach, “deep, well -known learning”, avoids this manual process with two main visions. First, it automatically represents any one -graphic relationship database. For example, if the database contains a “user” schedule to record customer information and a “requests” schedule to register customer purchases, each row in the user schedule becomes a user knot, and each row in the applications schedule becomes a request knot, and so on. Then this contract is automatically connected using the current ties to the database, such as foreign keys, and create a rich map for the entire data set without manual effort.

Second, Komo has circulated the transformer structure, the engine behind LLMS, to learn directly from this graphic representation. Transformers excel in understanding the sequence of symbols using the “attention mechanism” because of the weight of the importance of different symbols in relation to each other.

RFM applies from Kumo this same mechanism to the graph, allowing it to learn complicated patterns and relationships across multiple schedules simultaneously. Leskovec compares this jump to the development of a computer vision. In the early first decade of the twentieth century, ML engineers had to design features manually such as edges and shapes to detect an object. But the latest structures such as the fighter nerve networks (CNN) can take raw pixels and learn the relevant features automatically.

Likewise, RFM consumes RAW database schedules and allows the network to discover the most predictable signals on its own without the need for manual voltage.

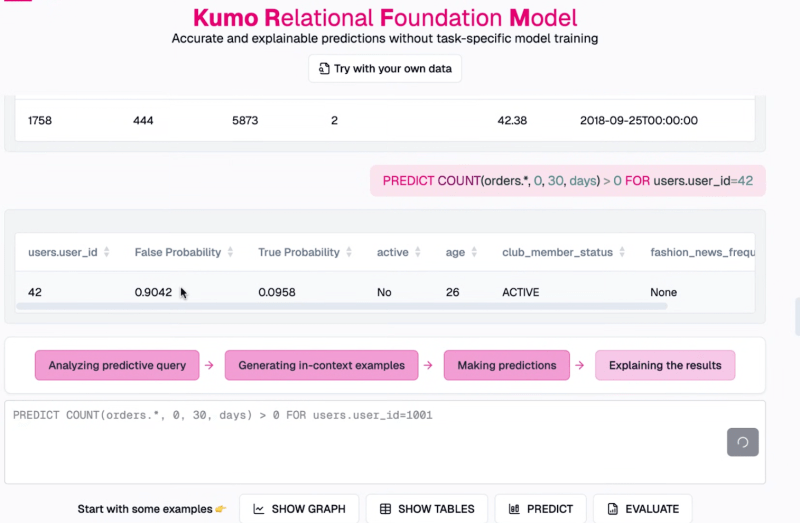

The result is the pre -trained foundation model that can perform predictive tasks on a new database immediately, which is known as “divorced zero”. During the demonstration, Leskovec explained how the user can write a simple query to predict whether a specific customer will apply in the next thirty days. Within a second, the system has restored the degree of possibility and explanation of the data points that led to the end, such as the last user activity or its absence. The model has not been trained on the database provided and adapted in actual time through learning within the context.

“We have a pre -trained model that simply indicates your data, and it will give you an accurate prediction after 200 milliliters after that.” He added that it could be “accurate like, let’s say, weeks of data scientist’s work.”

The interface is designed to be familiar to data analysts, not just machine learning specialists, which gives access to predictive analyzes.

Operating the future agent

This technology has great effects on developing artificial intelligence agents. In order for the agent to make meaningful tasks within the institution, he must do more than just a treatment language; Smart decisions should be made based on the company’s own data. RFM can serve as an predictive engine for these factors. For example, the customer service agent can inquire about the RFM to determine the possibility of a customer in overcoming or its potential future value, then use LLM to customize his conversation and provide accordingly.

“If we believe in the future of an agent, the agents will need to make decisions rooted in private data. This is the way to make decisions,” explained Leskovec.

Como’s work refers to a future where the AI is divided into two supplementary fields: LLMS to deal with knowledge retroactively in the irregular text, and RFMS to predict the organized data. By eliminating the engineering bottleneck, RFM is to put ML tools strong in the hands of more institutions, which greatly reduces time and cost to get data to the decision.

The company has released a general offer for RFM and plans to launch a copy that allows users to communicate their own data in the coming weeks. For institutions that require maximum accuracy, Kumo will also provide polishing service to increase performance on private data groups.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-27 19:40:00