Liquid AI Open-Sources LFM2: A New Generation of Edge LLMs

| What is included in this article: |

| Performance breakthroughs – 2X faster conclusion and 3X training faster Technical architecture Hybrid design with wrapping and interest blocks Form specifications Three variables of size (350 meters, 700 meters, 1.2b parameters) Standard results -Outstanding performance compared to similar models Improving publishing -A design focuses on the edge of various devices Open source access Apache 2.0 license The effects of the market – Impact on the adoption of the edge |

The scene of artificial intelligence on the device has taken a big jump forward with the launch of the artificial intelligence liquid of LFM2, the liquid basis for the second generation. This new chain of artificial intelligence models represents a model of edge computing, providing unprecedented improvements in performance designed specifically to spread the device while maintaining competitive quality standards.

Revolutionary performance gains

LFM2 defines new standards in the AI Edge space by achieving great efficiency improvements in multiple dimensions. The 2x Decode and Premill models provide a performance compared to QWEN3 on the CPU structures, and it provides critical applications in actual time. Perhaps more impressive, the same training process has been improved to achieve 3X training compared to the previous LFM generation, making LFM2 the most cost -effective path to building artificial intelligence systems capable of general diseases.

These improvements in performance are not just gradual, but they are a basic boom in making strong artificial intelligence available on resources backed devices. The models are specifically designed to cancel the insurance of the teller time again, the flexibility that is not connected to the Internet, the privacy with a realistic data design-the basic capabilities of phones, mobile computers, cars, robots, wearable devices, satellites and other finish points that should cause real time.

Innovation, hybrid architecture

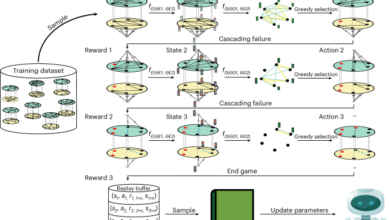

The technical basis of LFM2 is located in the new hybrid architecture that combines the best wrapping aspects and interest mechanisms. The model uses a sophisticated structure of 16 masses consisting of 10 short -term damage blocks and 6 blocks of the collection of the collection of the collection (GQA). This mixed approach from the pioneering work in artificial intelligence liquid derives on the stable liquid networks (LTCS), which have provided frequent nerve networks on continuous time with linear dynamic systems adjusted by non -linear input gates.

At the core of this structure, there is a LIV workshop framework, which allows to generate weights in a busy manner from the input they act on. This allows coloring, repetition, attention and other structured layers to fall under one unified frame and awareness of inputs. LFM2 wrapping blocks carry out double gates and short coloring, creating first -class linear systems that converge to scratch after a limited time.

The selection of architectural engineering, the search engine in the liquid architectural engineering, which was modified to assess the capabilities of language modeling as well as the loss of traditional verification and confusion standards. Instead, a comprehensive set of more than 50 is employed internal assessments that evaluate various capabilities including calling, multi -law thinking, understanding of low -resources, instructions, and tools.

Comprehensive model collection

LFM2 is available in three strategic configurations: 350 meters, 700 meters and 1.2b, each of which is improved for different publishing scenarios while maintaining the basic benefits of efficiency. All models were trained on 10 trillion symbols derived from a carefully organized training group that includes about 75 % of the English language, 20 % multi -language content, and 5 % symbol data of web materials and licensed materials.

Training methodology includes knowledge distillation using the current LFM1-7B as a model for the teacher, with the extent introduction between the outcomes of the LFM2 student and the teacher’s outputs as a basic training signal throughout the complete 10T training process. The length of context was extended to 32 thousand during training, allowing models to deal with a longer sequence.

Excellent reference performance

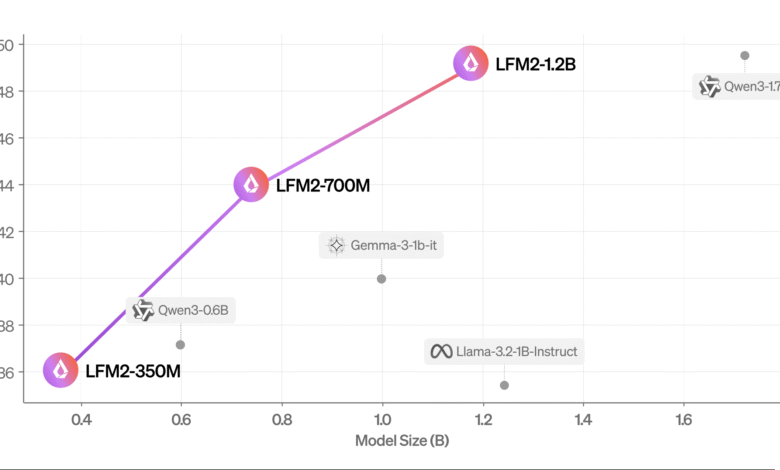

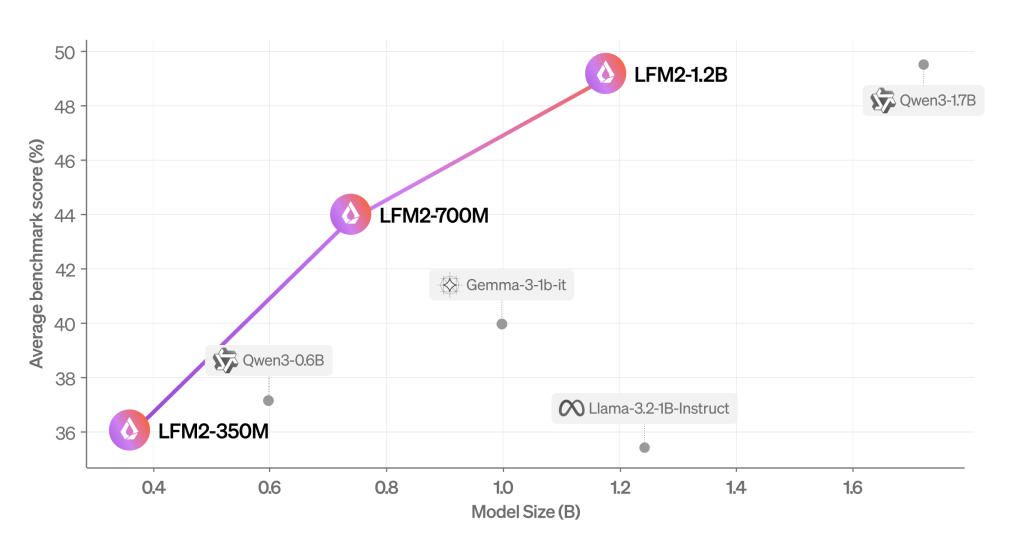

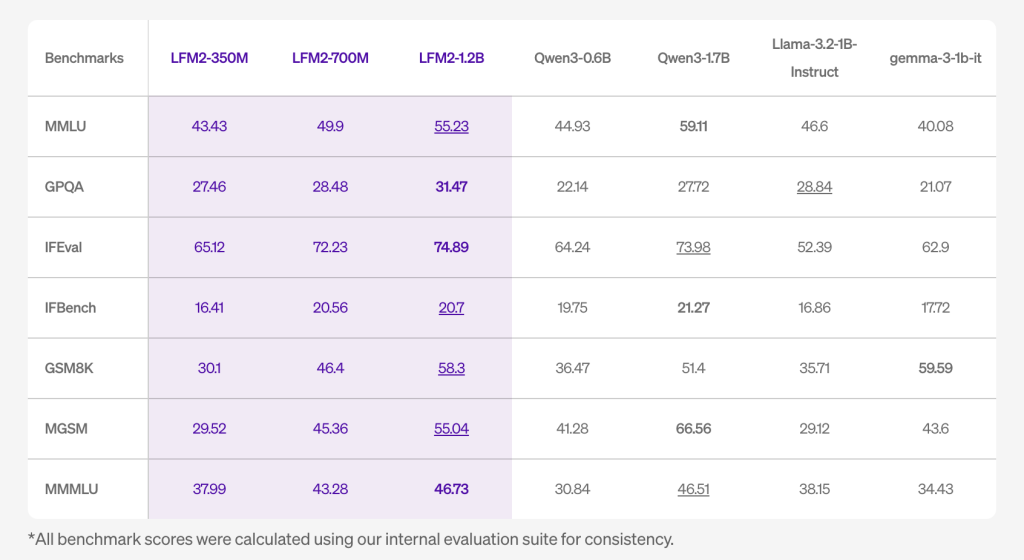

The evaluation results show that LFM2 greatly outperforms models of similar size across multiple reference categories. The LFM2-1.2B model works competitive with QWEN3-1.7B, although there are 47 % less parameters. Likewise, LFM2-700M outperforms GEMMA 3 1B, while the smallest LFM2-350M inspection point remains competitive with QWEN3-0.6B and Llama 3.2 1B.

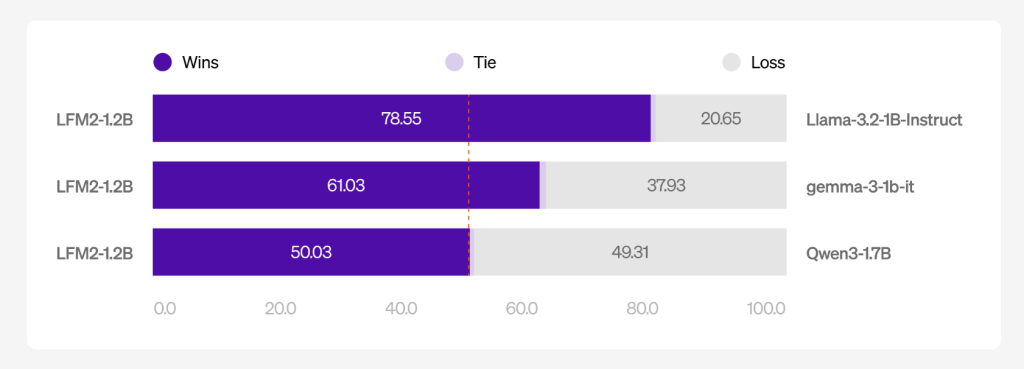

Besides mechanical standards, LFM2 shows the capabilities of superior conversation in multi -turn dialogues. Using the Wildchat data collection and the LLM-AS-A-Jugft Rating Frame, LFM2-1.2B showed significant preference advantages on Llama 3.2 1B ANSTRUCT and GMMA 3 1B while matching QWEN3-1.7B performance despite being smaller and faster.

Join Publishing edge

Models excel in the real world’s publishing scenarios, as they were exported to multiple business frameworks including Pytorch’s Executorch and open source Llama.cPP library. The test shows on the target devices including Samsung Galaxy S24 Ultra and AMD Ryzen that LFM2 dominates the Pareto borders for both the pre -concrete and decomposition speed in relation to the size of the model.

The performance of the strong central processing unit effectively translates into accelerators such as GPU and NPU after improving Kernel, making LFM2 suitable for a wide range of devices formations. This flexibility is important for the diverse ecosystem of edge devices that require AI’s capabilities on devices.

conclusion

LFM2 release treats a critical gap in the scene of spreading artificial intelligence, where the shift from the inference based on the cloud is accelerated. By enabling the time of the transmission of milliliters again, the operation that is not connected to the Internet, and the privacy with a functional design, LFM2 opens new possibilities for artificial intelligence integration through consumer electronics, robots, smart devices, financing, e -commerce and education.

The artistic achievements represented in LFM2 indicate the ripening of the artificial intelligence edge technology, where the comparison between the typical ability and the efficiency of publishing successfully. Since the focal Cloud LLMS is to quickly, quickly, private and reserve, LFM2 places itself as a foundation technology for the next generation of devices and applications that operate in Amnesty International.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-14 06:48:00