Hands on with Gemini 2.5 Pro: why it might be the most useful reasoning model yet

Join daily and weekly newsletters to obtain the latest updates and exclusive content to cover the leading artificial intelligence in the industry. Learn more

Unfortunately for Google, the latest pioneering language version, Gemini 2.5 Pro, under the Studio GHibli Ai Image Storm was buried that absorbed the air from the artificial intelligence space. Perhaps for fear of its failed previous launch, Google was cautioned as “the most intelligent artificial intelligence model” instead of the other AI laboratory approach, which presents its new models as the best in the world.

However, practical experiences with examples in the real world show that Gemini 2.5 Pro is really impressive and may currently be the best thinking model. This opens the way for many new applications and may put Google at the Vanguard of the AI Truc race.

A long context with good coding capabilities

The prominent feature of Gemini 2.5 Pro is a very long context window and the length of the output. The model can address up to one million icons (with 2 million coming soon), which makes it possible to suit multiple long documents and entire symbol warehouses in the claim when necessary. The model also contains 64,000 icons outputs instead of about 8,000 models of other Gemini models.

The long context window also allows extended conversations, as each interaction with the thinking model can generate tens of thousands of symbols, especially if it includes a symbol, pictures and videos (you have faced this problem with Claude 3.7 Sonnet, which contains a window of context 20000).

For example, Simon Willison Gemini 2.5 Pro used a new feature of his website. “I crashed through a full code base and discovered all the places that you needed to change – 18 files in total, as you can see in the resulting public relations. The entire project took about 45 minutes from start to finish – as it could be less than three minutes for each file,” Wilison said in a blog.

Multimedia thinking is impressive

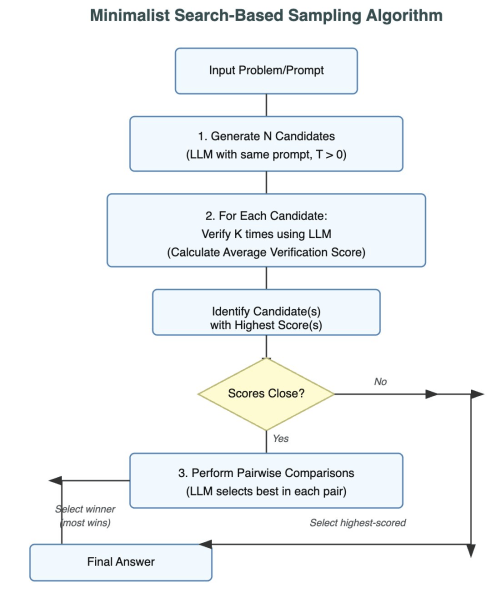

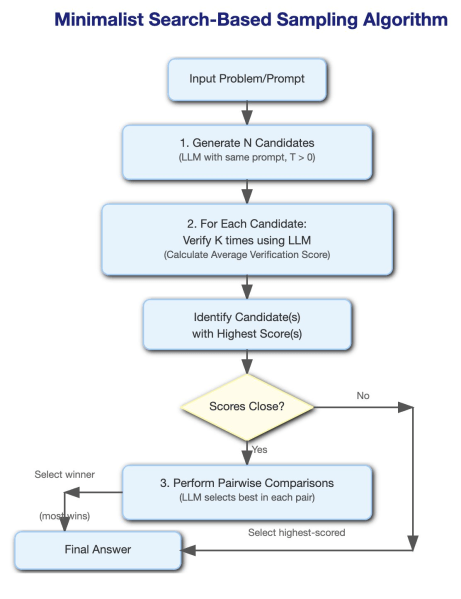

Gemini 2.5 Pro also has the impressive thinking capabilities of the text, images and imperfect videos. For example, I presented her with the text of my last article on the research based on samples and pushed him to create a SVG drawing depicting the algorithm shown in the text. Gemini 2.5 Pro properly extracted the main information from the article and created a streamlined scheme for the process of taking samples and research, even obtaining the police steps properly. (To return to it, the same task took multiple reactions with Claude 3.7 Sonnet, and ultimately, I reached the maximum of the distinctive symbol.)

The image provided was some visual errors (not replaced arrows). You can use a plastic surgery, so I tested Gemini 2.5 Pro with a multimedia demand, giving him a screenshot of the SVG file presented alongside the symbol and the student to improve it. The results were impressive. He corrected stock heads and improved visual quality of the scheme.

Other users had similar experiences with multimedia claims. For example, in their tests, Datacamp repeated the example of the Runner game presented in the Google Blog, then presented the code and recorded a video for the game to Gemini 2.5 Pro and pushed it to make some changes to the game icon. The model can lead to the cause of the visual images, find a portion of the code that must be changed, and make the correct adjustments.

However, it should be noted that like other obstetric models, Gemini 2.5 Pro is vulnerable to errors such as non -relevant file modification and code. The more accurate your instructions, the lower the risk of incorrect changes.

Data analysis with useful thinking tracking

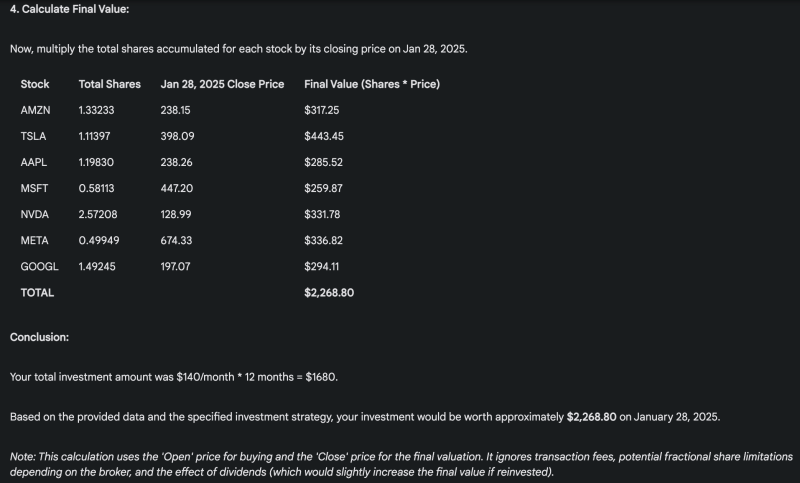

Finally, I have tested Gueini 2.5 Pro in my classic data analysis test for thinking forms. I presented him with a file that contains a mixture of normal text and raw HTML data that you copied and paste it from the pages of the different inventory history in Yahoo! finance. Then I pushed her to calculate the value of the portfolio that would invest $ 140 at the beginning of each month, and spread equally through the wonderful 7 shares, from January 2024 to the last date of the file.

Correctly define the shares that they had to choose from the file (Amazon, Apple, NVIDIA, Microsoft, Tesla, Alphabet and Meta, extract financial information from HTML data, and calculate the value of each investment based on the stock price at the beginning of each month. You have responded to a good table coordination with the value of shares and preservatives every month and provided details about the amount of the entire investment at the end of the period.

More importantly, I found that the logic follow is very useful. It is not clear whether Google reveals the distinctive symbols of the COINI 2.5 Pro series, but tracking thinking is very detailed. You can clearly see how the model is thinking about the data, extracting different parts of the information, and calculating the results before creating the answer. This can help explore the behavior of the model and direct it in the right direction when it makes errors.

Think about the level of the institution?

One of the anxiety about Gemini 2.5 Pro is that it is only available in thinking mode, which means that the model always passes through the “thinking” process even for very simple demands that can be answered directly.

Gemini 2.5 Pro is currently in an inspection version. Once the full form is released and pricing information is available, we will have a better understanding of the cost of building institutions applications on the model. However, as the costs of reasoning continue to decrease, we can expect to become a widespread process.

Perhaps Gemini 2.5 Pro did not have for the first time for the first time, but its capabilities require attention. The huge context window, the impressive and impressive logic provides the detailed thinking chain of concrete advantages of the complex work burden, from re -creation of a code base to delicate data analysis.

2025-03-28 19:39:00