NVIDIA AI Releases OpenReasoning-Nemotron: A Suite of Reasoning-Enhanced LLMs Distilled from DeepSeek R1 0528

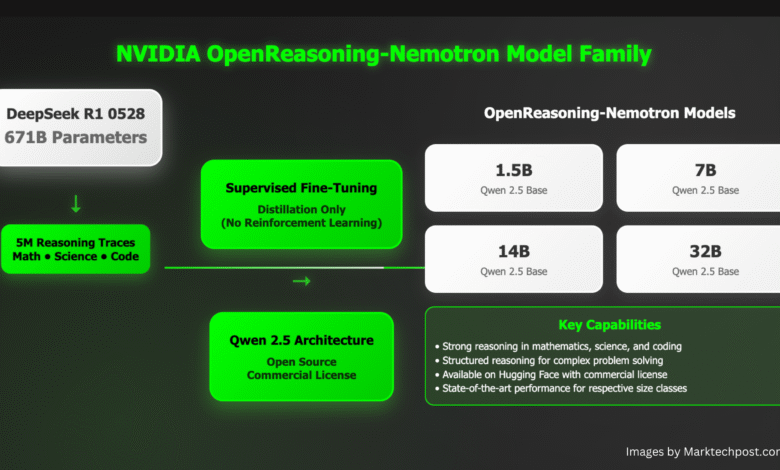

NVIDIA AI presented OpenReasoning-NemotronA family of large language models (LLMS) is designed to excel in complex thinking tasks through mathematics, science and symbol. This model – Comprising 1.5B, 7B, 14B and 32B teacher publications-He was 671b Deepseek R1 0528Grabing high thinking capabilities in significantly smaller and more efficient models.

The NVIDIA version as a pioneering contributor to the open source LLM ecosystem, provides models that drive the latest performance (SOTA) with a widespread and accessible commercial and accessible commercial survival.

Overview of the form and architecture

✅ distillation from Deepseek R1 0528 (671B)

In the heart of OpenReasoning-Nemotron lies Distillation This transmits the logical ability of Deepseeek R1 – the model 671B parameter – in the smaller structure. The process gives priority Circular thinking On raw symbolic prediction, enable compressed models to perform effectively on organized and high -perceive tasks.

The distillation data group confirms Mathematics, science and programming languagesHighering the capabilities of the model with the main thinking areas.

📊 Model variables and specifications

| Model name | border | Intestinal use | Embacious face page |

|---|---|---|---|

| OpenReasoning-Nemotron-1.5B | 1.5b | Think about the level and inference | connection |

| OpenReasoning-Sunotron-7B | 7 b | Medium thinking, good for symbol/mathematics | connection |

| OpenReasoning-Sunotron-14B | 14B | Advanced thinking capabilities | connection |

| OpenReasoning-Sunotron-32B | 32 b | Near the border style performance in the intense tasks of logic | connection |

All models are compatible with TransformerSupport FP16/INT8 quantityIt is improved for Nvidia GPUS and Nemo Frameworks.

Performance standards

These models are a group A new pass from the latest dozens of category of size Through multiple thinking standards:

| model | GPQA | MMLU – Pro | cardamom | LiveCOOOOOBENCH | SCICODE | Aime24 | Aime25 | February 2025 |

| 1.5b | 31.6 | 47.5 | 5.5 | 28.6 | 2.2 | 55.5 | 45.6 | 31.5 |

| 7 b | 61.1 | 71.9 | 8.3 | 63.3 | 16.2 | 84.7 | 78.2 | 63.5 |

| 14B | 71.6 | 77.5 | 10.1 | 67.8 | 23.5 | 87.8 | 82.0 | 71.2 |

| 32 b | 73.1 | 80.0 | 11.9 | 70.2 | 28.5 | 89.2 | 84.0 | 73.8 |

All bumps are the@1 pass without genslect.

🔍 Genseect (Heavy Mode)

Use The obstetric choice with 64 candidates (“Genseect”), performance improves, especially at 32 B:

- 32B investigate: Aime24 89.2 → 93.3, Aime25 84.0 → 90.0, HMMT 73.8 → 96.7, LiveCodebench 70.2 → 75.3.

This indicates the performance of strongly emerging thinking.

Data training and thinking for thinking

Training group High quality sub -group From Deepsek R1 0528 Dataset. The main features include:

- Coordinated thinking data From mathematics and science specialties and CS.

- Immediate engineering Designed to enhance multi -step thought chains.

- Focus on Logical consistency, registration satisfactionAnd Symbolic thinking.

This deliberate excavation guarantees a strong consensus with the problems of thinking in the real world in both academic circles and ML fields.

Open and environmental integration

All four OpenReasoning-Neemotron models are released below An open and commercial licenseWith models cards, evaluation texts, and ready weights for the inference available in the face of the embrace:

These models are designed to connect Nvidia Nemo FrameworkSupport Tensorrt -lmand OnnxAnd Embrace facial transformers Tools, facilitating rapid publication in production and research settings.

Main use cases

- Mathematics teacher and halal theories

- Scientific quality assurance agents and medical thinking systems

- Guard’s generation assistants and error correction assistants

- Answer to multiple laws chain

- Generating artificial data for organized fields

conclusion

NVIDIA OpenReasoning-Nemotron models offer an open source pragmatic path towards Expand the scope of the ability to think without the costs of calculating borders. By distillation of 671b Deepseek R1 and targeting high -procedures thinking, these models provide a strong balance for Accuracy, efficiency and ease of access.

For developers, researchers, and institutions that work on intensive artificial intelligence applications, OpenReasoning-Nemotron provides a convincing-ethical basis of bites that often accompany ownership or excessive mineral models.

🔍 Crocked questions (common questions)

Q1. What are the supported criteria?

GPQA, MMLU-PRO, Hle, LiveCodebeench, SCICODE, Aime 2024/25, HMMT Feb 2025 (Pass@1).

Q2. How much data used?

Distillation 5 million examples of thinking record Through the fields, created by Deepseek – R1 – 0528.

Q3. Is reinforcement learning used?

No – the models are trained through SFT, while maintaining efficiency while enabling future RL research.

Q4. Can I expand the scope of thinking with Genseect?

Yes. The use of Genslect significantly enhances performance – 32B jumps from 73.8 to 96.7 on HMMT with 64 candidates.

verify Technical details. All the credit for this research goes to researchers in this project.

Care opportunity: Access to the most developer of artificial intelligence in the United States and Europe. 1m+ monthly readers, 500K+ community builders, endless possibilities. [Explore Sponsorship]

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-20 04:38:00