OpenAI Halts AI-Powered Sentry Gun Project

Openai stops the Sentry Gun project that operates AI

Openai has made a bold step by stopping the development of an artificial work gun, a decision that resonates deeply in its mission of ensuring safety and moral use of artificial intelligence. This controversial project has sparked widespread discussion, and with increasing concerns about the possible misuse of Amnesty International in weapons, Openai has taken a final position. But what led to this important decision, and what does it mean to the future of artificial intelligence? Let’s divide it.

Also read: The Openai’s O1 challenges its symbol

Sentry rifle that works in male male intelligence: What was everything?

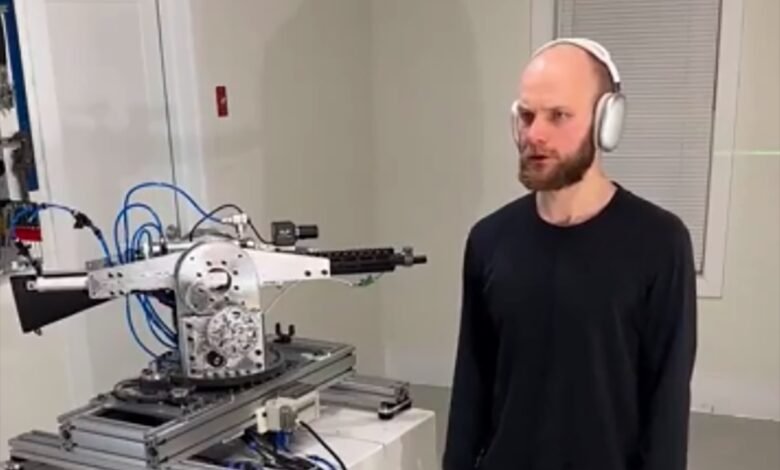

The Sentry Intelligent Intelligent Rifle was a system designed independently using the advanced language model of Openai, Chatgpt, as a basic processing unit. By taking advantage of ChatGPT capabilities, Sentry Gun can be involved in actual decision -making, such as determining threats, distinguishing between goals, and responding accordingly. While the concept offered the potential to combine robots and artificial intelligence, it raised an important question: Should AI should be done?

The developers claimed that the guard pistol was intended for security purposes, such as guarding the sensitive areas of interventions. In theory, this preparation can reduce human participation in dangerous situations and reduce risks. However, the project dependence on decision -making of artificial intelligence for life and death scenarios raised serious moral concerns through technology and defense societies.

Why Openai plug on the project?

Openai’s mission has always been rooted in ensuring the exemption of human artificial intelligence as a whole. The integration of artificial intelligence into weapons systems is directly inconsistent with this philosophy. When the project gained strength, it attracted criticism from researchers, policymakers and even some Openai. Ethical dilemmas, potential security risks, and fear of misuse were at the forefront of these discussions.

The challenges inherent in transparency and accountability in artificial intelligence algorithms also played an important role. Without a clear framework to control the decision -making process in the guard rifle, there was a risk of unintended procedures or misuse. Openai concluded that the best way to support its values is to end the project and reaffirm its position on the lack of artificial intelligence weapon.

Also read: The bold NVIDIA investment in robots and AI

The ethical effects of arming artificial intelligence weapons

The moral questions surrounding the use of artificial intelligence in deep weapons. Critics claim that giving independent machines in the deadly decision -making process erodes accountability. Who is responsible when the artificial intelligence system defines the goal incorrectly or causes unintended damage? The human element, which is necessary to make moral decisions, is stripped in such systems.

There is also a fear that artificial intelligence may lead to an artificial arms race, similar to nuclear spread during the twentieth century. Countries and private organizations may rush to develop increasingly advanced Amnesty International weapons, which destabilize the unexpected stability and consequences. Openai’s decision to stop this project emphasize the need for industrial leaders to take a responsible approach in creating artificial intelligence.

Also read: The responsible Amnesty International can prepare companies for success.

The role of ChatGPT in the controversy

Openai Chatgpt is famous for its progress in treating the natural language, which puts the standard for the interaction between AI-Human. It is initially designed to help tasks such as writing, coding and problem solving. Its capabilities made a candidate for more sophisticated applications, such as the Sentry Gun project.

The use of Chatgpt in the context of a weapon has angered, as many believe it was an inappropriate application of this technology. People asked about the limits of the ability to adapt to artificial intelligence and whether its application to tasks that involve moral provisions were crossing the line. The controversy highlighted the need to define the borders to use artificial intelligence, especially when it comes to protecting human life.

Also read: Determining Amnesty International Company’s strategy

The general reaction and the effect of industry

The general response to Openai’s decision was largely positive, as many praised the company to support its moral responsibilities. The invitation groups and researchers praised this step, noting that they determine an example of other technology companies that are struggling with similar moral dilemmas. By placing ethics above profit, Openai reinforced its reputation as a leader committed to the development responsible for artificial intelligence.

However, some critics asked why the project was followed in the first place. They argue that it showed the end of the ruling, as the concept clashed with the declared Openai principles. This incident is a reminder to companies to ensure that their projects are compatible with their basic values from the beginning.

Forming the future of developing artificial intelligence

By ending this project, Openai sent a strong message about the borders that must be found in creating artificial intelligence. He stressed the importance of focusing on applications that enhance the welfare of humanity rather than those that can cause harm. This decision can inspire policy -making bodies, governments and research institutions to take a position against artificial intelligence.

Moving forward, cooperation through industries will be extremely important in setting ethical criteria for developing artificial intelligence. It can guarantee transparent communication, the participation of stakeholders and continuous monitoring that artificial intelligence techniques are responsible. Openai’s decision is a step in the right direction, which paves the way for a more moral approach to technological progress.

Openai’s commitment to moral intelligence policies

Openai has a history of defending the development of responsible artificial intelligence. It routinely emphasizes the importance of avoiding misuse and setting safety priorities when developing strong AI systems. This includes implementing guidelines, conducting research in moral artificial intelligence practices, and participating with policy makers to form strong frameworks for artificial intelligence governance.

Stopping the Sentry Gun project enhances Openai’s dedication to its mission. It explains that the organization is ready to make difficult decisions to stay compatible with its values. With the continued development of artificial intelligence, these obligations will be decisive in shaping the role of technology in society and ensuring that they are still power for good.

Also read: Openai financing needs have been explained and analyzed

Conclusion: A crucial moment in artificial intelligence ethics

Openai’s decision to stop the development of the Sentry Gun project, which Amnesty International operates a crucial moment in the conversation on the ethics and responsibilities of artificial intelligence. The option highlights the challenges and complications that govern sabotage techniques with an example of other organizations to follow up.

When artificial intelligence becomes more integrated in daily life, decisions such as the need to deliberate innovation confirm. By developing ethics and accountability first, Openai confirmed its commitment to building technology that benefits humanity – an ideal that should direct the future of developing artificial intelligence.

2025-01-11 18:50:00