OpenAI’s Red Team plan: Make ChatGPT Agent an AI fortress

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

In the event that you missed, Openai appeared yesterday on a strong new feature for ChatGPT and with it, a set of new security risks and repercussions.

This new feature, which is called “Agent Chatgpt”, is called the optional mode that subscribers who pay Chatgpt can engage by clicking on “tools” in the required input box and determining “agent mode”, at this point, they can request a chatGPT registration to their email and other web accounts; Write a response to emails; Download, modify and create files; Do a set of other tasks on their behalf independently, like a real person who uses a computer with their login approved data.

This also requires the user to trust in Chatgpt agent not to do anything problematic or evil, or leaks his data and sensitive information. It also offers greater risks to the user and the employer than the regular Chatgpt, which cannot log in to the web accounts or adjust the files directly.

“We have activated our strongest guarantees to the Chatgpt agent. It is the first model that we ranked as a high ability to biology and chemistry under our willingness. Here is the reason for that – and what we do to keep it safe.”

AI Impact series returns to San Francisco – August 5

The next stage of artificial intelligence here – are you ready? Join the leaders from Block, GSK and SAP to take an exclusive look on how to restart independent agents from the Foundation’s workflow tasks-from decisions in an actual time to comprehensive automation.

Ensure your place now – the space is limited: https://bit.ly/3Guupf

How does Openai deal with all these security problems?

The task of the red team

Looking at the ChatGPT system in Openai, the “Reading Team” that the company uses to test the feature faced a difficult task: specifically, 16 researchers from doctoral security who have been granted 40 hours to test it.

Through systematic tests, the Red Team has discovered seven global exploits that can expose the system to waive the system, revealing critical weaknesses in how to deal with artificial intelligence factors of interactions in the real world.

What followed is a large -scale security test, and many of it depends on a red team. The Red Teaming team provided 110 attacks, from fast injections to attempts to extract biological information. Sixteen exceeded the thresholds of internal risk. All the discovery of the Openai engineers gave the visions they need to obtain and publish written repairs before launch.

The results talk about themselves in the results published in the system card. ChatGPT appeared with major security improvements, including 95 % performance against unrelated visual browser and biological and chemical guarantees.

The red teams revealed seven global exploits

The Openai Red Teaming team included 16 bio -safety doctoral researchers who made 110 attempts to attack during the test period. He exceeded sixteen thresholds of internal risk, and revealed the basic weaknesses of how AI agents deal with interactions in the real world. But the real penetration came from the unprecedented arrival of AISI to the internal thinking chains of the ChatgPt agent and the text of politics. It is recognized that this ordinary attackers will never possess them.

Over the course of four test rounds, the UK has forced the implementation of seven global exploits that had the ability to settle for any conversation:

The offensive vectors that forced Openai’s hand

| Type of attack | Success rate (before repair) | goal | impact |

| The visual browser instructions are hidden | 33 % | Web pages | Nomination of active data |

| Google engine exploitation | It has not been revealed | Cloud documents | The leakage of the forced document |

| Multi -steps attack attacks | factor | Sites behavior | Complete the solution of the session |

| Extracting biological information | 16 submission exceeded thresholds | Dangerous knowledge | Possible weapons |

The FAR.AI evaluation publicly criticized Openai’s approach. Despite 40 hours of testing that reveals only three partial weaknesses, they have identified that the current safety mechanisms are largely dependent on monitoring during thinking and the use of tools, which researchers considered one potential point of failure if they are at risk.

How did the Red Teaming team convert weaknesses into the ChatGPT fortress

Openai’s response to the Red Team Results Recoven full slides of the Agent Chatgpt structure. One of the many initiatives that have been implemented, including building a bilateral inspection structure, monitors 100 % of the actual time production, and achieve these measuable improvements:

Security improvements after the discoveries of the red team

| Scale | Previous models | Chatgpt agent | to improve |

| Unrelated instructions (visual browser) | 82 % | 95 % | +13 % |

| In context the data Exfiltration | 75 % | 78 % | +3 % |

| Nomination of active data | 58 % | 67 % | +9 % |

| The reliability of the system | On the basis of sampling | 100 % coverage | Full monitoring |

Architecture works like this:

- The first layer: Fast compiler with 96 % summary of suspicious content flags

- The second layerThinking model that contains 84 % summons analyzes

But technical defenses only tell part of the story. OpenAi has taken difficult security options that recognize some artificial intelligence that requires great restrictions on independent safe implementation.

Based on the weaknesses that were discovered, Openai has implemented the following counter measures through its form:

- Acting the watch modeWhen Chatgpt agent accesses sensitive contexts such as banking services or email accounts, the system freezes all activities if users move away. This is in the direct response to attempts to get rid of the data discovered during the test.

- Memory features are broken: Although it is a basic function, the memory is completely disabled when launching to prevent the increasing data leakage attacks shown by the red teams.

- Peripheral restrictions: Access to the network is limited to obtaining requests only, and the researchers prohibited the weaknesses of carrying out orders.

- Rapid therapy protocol: A new system that corrects the weaknesses within hours of the discovery – it was developed after the red teams showed how quickly their exploits spread.

During the pre -launch test alone, select this system and solve 16 critical weaknesses discovered by the red teams.

An invitation to wake up biological risks

The red teams revealed the possibility that the Chatgpt agent has been assembled and leads to greater biological risks. Sixteen participants from the Red Teaming Network, each of them, have tried with a physical degree related to vital safety, to extract serious biological information. Their transmission operations revealed that the model can link the published literature on modifying and creating biological threats.

In response to the results of the red teams, Openai classified a Chatgpt client as a “high ability” of biological and chemical risks, not because they found final evidence of the capabilities of weapons, but as a precautionary measure based on the results of the red team. This led to:

- Always on safety works, wiping 100 % of traffic

- A topical work

- Monitoring thinking with 84 % call for weapons content

- Bio Bug Bounty to discover continuous weakness

What did the Openai red teams know about the safety of artificial intelligence

Series 110 the attack revealed patterns that forced basic changes in Openai’s security philosophy. The following includes:

Stability on powerThe attackers do not need advanced exploits, all they need is more time. The red difference showed how patients can eventually increase the systems.

The limits of confidence are imaginationWhen the artificial intelligence agent can access Google Drive, browse the web, and implement code, the traditional safety circumference melts. The red teams took advantage of the gaps between these capabilities.

Monitoring is not optional: The discovery is that the monitoring of the sampling of the sampling of the critical attacks led to 100 % coverage requirements.

Speed things: Traditional correction courses measured in unnecessary weeks against immediate injection attacks can be spread immediately. Rapid therapy protocol is weakness within hours.

Openai helps to create a new safety line for Enterprise Ai

For Cisos to assess the spread of artificial intelligence, the red team’s discoveries determine clear requirements:

- Quantum measurable protection: The defense rate of the Chatgpt agent is 95 % against the documented attack tankers determines the industry standard. The nuances of many tests and results specified in the system card explain the context of how to accomplish this matter, which is a must for anyone who participates in the security of the model.

- Full vision100 % traffic monitoring is no longer ambitious. Openai’s experiments show the reason for this, given how easy the red teams are anywhere.

- A quick responseHours, not weeks, to correct weak points.

- Forced bordersSome operations (such as access to memory during sensitive tasks) must be disabled until they are safe.

The AISI UK test has been especially useful. All the seven global attacks they identified before the launch were corrected, but their distinctive access to the internal systems revealed the weaknesses that can be ultimately discovered by the specified litigants.

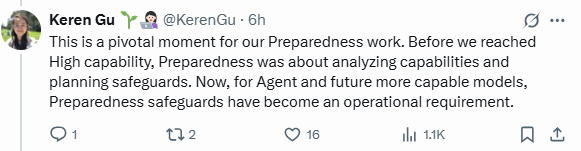

“This is a pivotal moment for our preparation,” air books on X. “Before we reached high ability, preparedness was about capacity analysis and guarantee planning. Now, for the most powerful agent and future, ready -made guarantees have become a operational requirement.”

The red difference is the essential to build safe and safer artificial intelligence models

The seven global monuments discovered by researchers and attacks 110 of the Openai Red Crucible team have become forged.

By revealing exactly how artificial intelligence agents weapons, the red teams were forced to create the first artificial intelligence system where security is not just an advantage. It is the basis.

Chatgpt Agent results prove the effectiveness of Red Teaming: 95 % of visual browser attacks, capture 78 % of Exfiltration Data attempts, and monitor each single reaction.

In the accelerated AI Arms race, the companies that survive and flourish will be those who see their red teams are the main architects of the platform that pushes them to safety and security.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-18 22:13:00