Multimodal Foundation Models Fall Short on Physical Reasoning: PHYX Benchmark Highlights Key Limitations in Visual and Symbolic Integration

Modern models show a competitive accuracy of humans on AIME, GPQA, Math-500 and Olympiadbench, and solve the Olympics level problems. Modern multimedia models have advanced criteria for disciplinary knowledge and sports thinking. However, these assessments miss an important aspect of machine intelligence: physical thinking, which requires the integration of disciplinary knowledge, symbolic processes, and restrictions in the real world. The solution to the physical problems is mainly different from pure sports thinking because it requires models to decipher cure the implicit conditions in the questions. For example, the interpretation of the “smooth surface” as a zero friction factor, and the maintenance of material consistency through thinking chains because physical laws are still fixed regardless of thinking paths.

The MLM shows an excellent visual understanding by combining visual and text data through various tasks, stimulating exploring its thinking capabilities. However, uncertainty remains with regard to whether these models have real advanced thinking possibilities for visual tasks, especially in the material fields closer to the real world scenarios. Several LLM criteria have appeared to assess thinking capabilities, with the most physics -related phybench. Scientific standards on MLLM, such as Physreason and EMMA, have multimedia physics problems with numbers, however, they include only small physics sub -collections, which do not evaluate MLLMS to think and solve advanced physics problems.

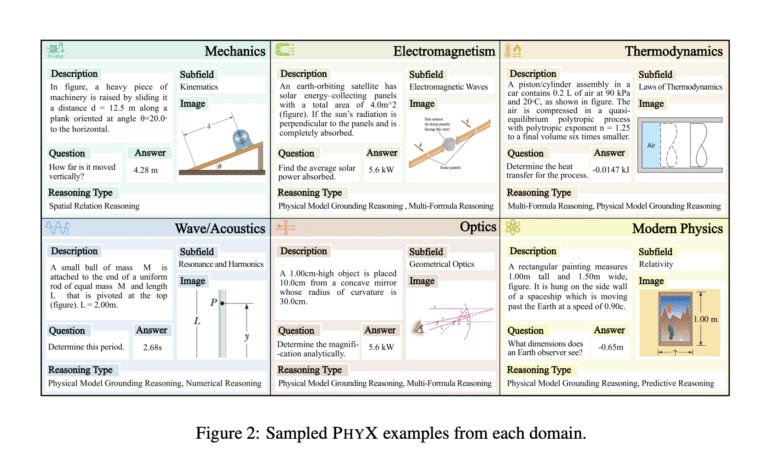

Researchers from the University of Hong Kong, the University of Michigan, the University of Toronto, the University of Waterloo, the Ohio State University, PHYX, a new criterion for assessing the capabilities of material thinking for basic models. It includes 3000 visual physics, carefully sponsored by six distinctive physics: mechanics, electromagnetism, thermal dynamics, wave/audio, optics, and modern physics. It evaluates physics -based thinking by solving multimedia problems with three basic innovations: (A) 3000 questions recently collected with realistic material scenarios that require an integrated visual analysis and causal thinking, (B) the design of the data approved by experts covering six basic physics, (c) (c) the evaluation consisting of three steps.

The researchers designed the data collection process from four stages to ensure high -quality data. This process begins with an in -depth survey of basic physics specialties to determine coverage across various fields and sub -fields, followed by the employment of graduate students in STEM as two expert relationships. They comply with copyright restrictions and avoid data pollution by choosing questions without answers immediately. Moreover, quality control includes a three -stage cleaning process including repeated detection by analyzing lexical overlap with manual review by Physics Ph.d. Students, followed by a shorter liquidation of 10 % of the questions based on the mid -length, led to 3000 high -quality questions from a preliminary set of 3300.

PHYX represents great challenges for current models, with even the worst human experts who achieve a resolution of 75.6 %, outperforming all the models that have been evaluated and showing a gap between human experience and current typical capabilities. The standard reveals that multi -gaps are narrow -performance by allowing the weakest models to rely on signs at the surface level, but open questions require real thinking and generate the accurate answer. The comparison of the GPT-4O performance on Phyx with the results that were previously reported on Mathista and Math-V (both 63.8 %), stressing the low accuracy in the tasks of material thinking that material thinking requires deeper integration of abstract concepts and realistic knowledge, which represents greater challenges of mathematical materials that are limited to.

In conclusion, researchers, Phyx, presented the first large -scale standard for evaluating material thinking in multimedia scenarios, on a visual basis. The strict evaluation reveals that modern models show restrictions on physical thinking, mostly dependence on preserved knowledge, mathematical formulas, and surface optical patterns rather than a true understanding of material principles. This standard focuses exclusively on English claims and explanations, which limits the assessment of multi -language thinking capabilities. Also, although the images depict physical realistic scenarios, they are often angery or similar textbooks instead of realistic images, which may not take the complexity of perception in natural environments completely.

Check the paper, symbol and project page. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 95K+ ML Subreddit And subscribe to Our newsletter.

Sajjad Ansari is in the last year of the first university stage of Iit khargpur. As enthusiastic about technology, it turns into the practical applications of Amnesty International with a focus on understanding the impact of artificial intelligence techniques and their effects in the real world. It aims to clarify the concepts of complex artificial intelligence in a clear and accessible way.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-31 02:41:00