Safeguarding Agentic AI Systems: NVIDIA’s Open-Source Safety Recipe

With the evolution of the LLMS models from simple text generators to Agent systems The mind and mind can be planned and act independently – There is a significant increase in both its capabilities and the risks associated with it. The institutions quickly adopt AIGE Ageric for Automation, but this trend exposes organizations to new challenges: Obtaining goal, immediate injection, unintended behaviors, data leakage, and reduce human control. Treating these concerns, NVIDIA has released an open source software and post -training safety recipe designed to protect AI Agementic systems throughout its life cycle.

The need for safety in the artificial intelligence customer

Agentic llms takes advantage of advanced thinking and using tools, allowing them to work with a high degree of autonomy. However, this autonomy can lead to:

- Moderation failure in the content (For example, generating harmful, toxic or biased outputs)

- Security gaps (Rapid injection, prison attempts)

- The risks of compliance and confidence (Failure to agree with the Foundation’s policies or organizational standards)

Traditional handrails and content filters often decrease with the development of the attackers and techniques quickly. Institutions require methodological strategies at the life cycle level to align the open models with internal policies and external regulations.

Safety recipe in NVIDIA: Overview and Architecture Engineering

The AI Agenic Safety Recipe provides NVIDIA A A comprehensive frame from the end to the end To evaluate LLMS, suit and protect it before, during and after publishing:

- evaluation: Before publishing, the recipe allows the test in exchange for institutions policies, safety requirements and trust thresholds using open data groups and standards.

- Align after trainingUsing reinforcement learning (RL), controlling control (SFT), and a quality data set, models are aligned with safety standards.

- Continuous protectionAfter publishing, NVIDIA Nemo Decredrails, actual time monitoring, persistent and programming services, prohibiting actively unsafe outputs, defending fast injections and prison attempts.

Basic ingredients

| platform | Technology/tools | very |

|---|---|---|

| Pre -publication evaluation | Nemotron content safety collection, Wildguardmix, GARAK SCANNER | Safety test/security |

| Align after training | RL, SFT, open licensed data | Salama/alignment of its seizure |

| Publishing and inference | Nemo Grasslails, NIM Microservices (content safety, control control, discovery jailbreak) | Preventing unsafe behavior |

| Monitoring and comments | Garak, real -time analyzes | Discovering/resisting new attacks |

Open data and standards sets

- Nemotron v2: Nemotron v2: Used to evaluate pre -training and post -training, this data set screens for a wide range of harmful behaviors.

- Wildguardmix Data collection: It aims to moderate content through mysterious and infection claims.

- AEGIS content safety collection: More than 35,000 samples explained, allowing the development of a candidate and the exact classification of the LLM safety tasks.

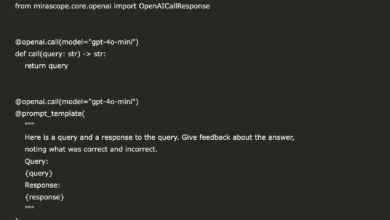

The post -training process

Nafidia recipe is distributed after training for safety Open source Jobter notebook Or as a cloud unit of launch, guarantee transparency and broad access. The workflow usually includes:

- Initial model evaluation: The basic line test on safety/security with open standards.

- Politics safety training: Old response through the targeted model/alignment, controlling supervision, and reinforcement learning with open data groups.

- revaluation: Restart safety/security standards after training to confirm improvements.

- Publishing: Trusted models are deployed with live monitoring and microscopic services for handrails (moderate content, subject control/field, discovery of jailbreak).

Quantitative

- Content safetyIt improved from 88 % to 94 % after applying the post-safety recipe for NVIDIA- gain 6 %, with no concrete loss of accuracy.

- Product security: Improving flexibility against aggressive claims (imprisonment and etc.) from 56 % to 63 %, by 7 %.

The integration of the cooperative and environmental system

NVIDIA approach exceeds internal tools –Partnerships With CISCO AI Defeense, Crowdstrike and Trend Micro, active fence), it allows the integration of continuous safety signals and accident -based improvements through the life intelligence cycle.

How to start

- Open source access: Full safety evaluation and post -training recipe (tools, data groups, evidence) are available to the audience for download and a metaphysical eyeliner.

- The alignment of allocated policy: Institutions can identify customized business policies, risk thresholds, and organizational requirements – using the recipe to align the models accordingly.

- Hardening: Evaluation, post -training, re -evaluation, and publishing them with new risks, ensuring the merit of the typical continuous confidence.

conclusion

It represents the safety recipe at NVIDIA for Agentic Llms The first industry, openly available, a systematic approach For LLMS stiffness against modern artificial intelligence risks. By activating the strong, transparent and extensive safety protocols, institutions can adopt Amnesty International’s confidence and budget innovation with security and compliance.

verify Nvidia Ai safety recipe and technical details. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Common questions: Can Marktechpost help me promote my artificial intelligence product and put it in front of Ai Devs and data engineers?

Answer: Yes, Marktechpost can help enhance your product artificial intelligence by publishing articles, status studies or product features, and targeting a global audience for artificial intelligence developers and data engineers. The MTP platform is widely read by technical professionals, which increases the vision of the product and location within the artificial intelligence community. [SET UP A CALL]

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-29 05:58:00