A Coding Implementation of an Intelligent AI Assistant with Jina Search, LangChain, and Gemini for Real-Time Information Retrieval

In this tutorial, we explain how to build artificial intelligence intelligence assistant by combining Gemini 2.0 and JINA search tools. By combining the capabilities of a strong large language model (LLM) with an external search applications interface, we create an assistant that can provide updated information with categories. This tutorial walks step by step by setting up API keys, installing the necessary libraries, and linking tools for the Gemini model, and building a custom Langchain that calls for a dynamically external tools when the model requires new or specific information. By the end of this tutorial, we will have an interactive Amnesty International Assistant at full capacity that can respond to the user’s information with accurate and current answers and good sources.

%pip install --quiet -U "langchain-community>=0.2.16" langchain langchain-google-genaiWe install Bethon packages required for this project. The Langchain frame for building AI applications, Langchain community tools (version 0.2.16 or higher), and Langchain integration with Google Gemini models. These packages allow the smooth use of Gemini models and external tools within the Langchain pipelines.

import getpass

import os

import json

from typing import Dict, AnyMer the basic units into the project. GETPASS allows API keys safely without displaying them on the screen, while OS helps to manage environmental variables and file paths. JSON is used to deal with JSON data structures, and writing provides tips for variables, such as dictionaries and modes of jobs, and ensuring better and maintenance code reading.

if not os.environ.get("JINA_API_KEY"):

os.environ["JINA_API_KEY"] = getpass.getpass("Enter your Jina API key: ")

if not os.environ.get("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter your Google/Gemini API key: ")We guarantee that the keys to the application programming interface needed for Gina and Jimini are set as an environment variable. Suppose the keys are already defined in the environment. In this case, the user’s textual program demands that it be securely inserted using the GetPass unit, while maintaining hidden keys to display safety purposes. This approach allows smooth access to these services without the need to codify sensitive information in the code.

from langchain_community.tools import JinaSearch

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnableConfig, chain

from langchain_core.messages import HumanMessage, AIMessage, ToolMessage

print("🔧 Setting up tools and model...")We import stereotypes and major categories of Langchain. The JinaseArch tool offers the web search, the Chatgooglegenerativeai model to reach Google, and the basic chapters of Langchain Core, including Chatprompttemplate, Runnableconfig (Humanmessge and Amessage and Amessge MOODSAG). Together, these components allow the completion of external tools with Gemini to retrieve the dynamic information moved by artificial intelligence. The printing statement confirms that the preparation process has started.

search_tool = JinaSearch()

print(f"✅ Jina Search tool initialized: {search_tool.name}")

print("\n🔍 Testing Jina Search directly:")

direct_search_result = search_tool.invoke({"query": "what is langgraph"})

print(f"Direct search result preview: {direct_search_result[:200]}...")We create the JINA search tool by creating Jinasearch () and confirm that it is ready for use. The tool is designed to deal with the Ib web information within the Langchain ecosystem. The text program then runs a direct test, “What is Langgraph”, using the Invoke method, and prints a preview of the search result. This step is validated by the fact that the search tool is working properly before combining it in a more auxiliary workflow than AI.

gemini_model = ChatGoogleGenerativeAI(

model="gemini-2.0-flash",

temperature=0.1,

convert_system_message_to_human=True

)

print("✅ Gemini model initialized")We prepare the Gemini 2.0 Flash using the Chatgoogogenerativei category from Langchain. The model is set with a low temperature (0.1) for more inevitable responses, and Convert_System_Message_to_human = The real parameter correctly as the human -coconut union. The final printing statement confirms that the Gemini model is ready for use.

detailed_prompt = ChatPromptTemplate.from_messages([

("system", """You are an intelligent assistant with access to web search capabilities.

When users ask questions, you can use the Jina search tool to find current information.

Instructions:

1. If the question requires recent or specific information, use the search tool

2. Provide comprehensive answers based on the search results

3. Always cite your sources when using search results

4. Be helpful and informative in your responses"""),

("human", "{user_input}"),

("placeholder", "{messages}"),

])

We quickly define a template using Chatprompttemplate.from_Messages () that directs artificial intelligence behavior. It includes a system message that defines the role of the assistant, a human message about the user inquiries, and a reward for the tools that were created during the tool calls. This structured claim guarantees that artificial intelligence provides useful and beneficial responses and well -resources with the seamless search results into the conversation.

gemini_with_tools = gemini_model.bind_tools([search_tool])

print("✅ Tools bound to Gemini model")

main_chain = detailed_prompt | gemini_with_tools

def format_tool_result(tool_call: Dict[str, Any], tool_result: str) -> str:

"""Format tool results for better readability"""

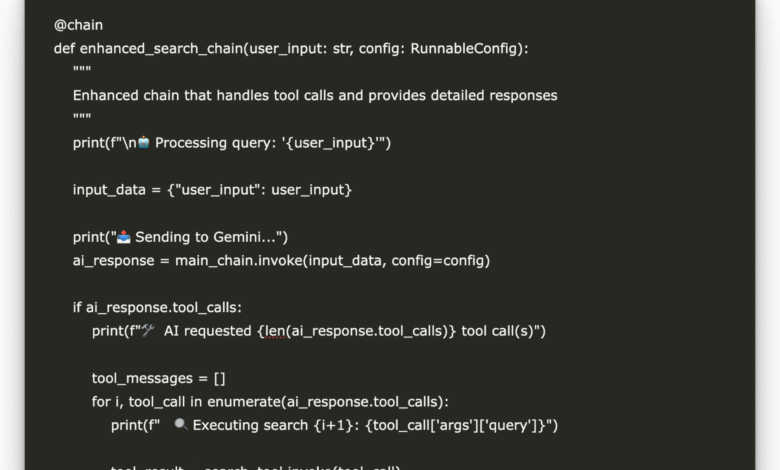

return f"Search Results for '{tool_call['args']['query']}':\n{tool_result[:800]}..."We connect the JINA search tool to the Gemini model using Bind_tools (), allowing the model to call the search tool when needed. Main_chain combines the structured orientated mold and the improved Gemini model, which creates a smooth workflow to deal with user inputs and dynamic tool calls. In addition, Format_Tool_RESULT FUNCTION Search Results for a clear and readable display screen, ensuring that users can easily understand search inquiries.

@chain

def enhanced_search_chain(user_input: str, config: RunnableConfig):

"""

Enhanced chain that handles tool calls and provides detailed responses

"""

print(f"\n🤖 Processing query: '{user_input}'")

input_data = {"user_input": user_input}

print("📤 Sending to Gemini...")

ai_response = main_chain.invoke(input_data, config=config)

if ai_response.tool_calls:

print(f"🛠️ AI requested {len(ai_response.tool_calls)} tool call(s)")

tool_messages = []

for i, tool_call in enumerate(ai_response.tool_calls):

print(f" 🔍 Executing search {i+1}: {tool_call['args']['query']}")

tool_result = search_tool.invoke(tool_call)

tool_msg = ToolMessage(

content=tool_result,

tool_call_id=tool_call['id']

)

tool_messages.append(tool_msg)

print("📥 Getting final response with search results...")

final_input = {

**input_data,

"messages": [ai_response] + tool_messages

}

final_response = main_chain.invoke(final_input, config=config)

return final_response

else:

print("ℹ️ No tool calls needed")

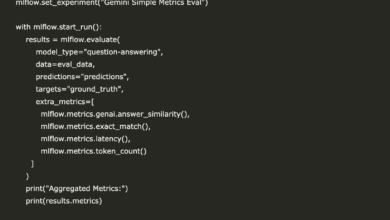

return ai_responseWe define Endanced_Search_Schain using Decorator Chain from Langchain, allowing him to handle user ketchups using dynamic tools. It takes the insertion of the user and a composition, and the insertion is passed through the main chain (which includes the claim and the ezniture with tools), and check whether artificial intelligence suggest any tool calls (for example, search on the Internet via JINA). In the event of tool calls, they carry out searches, create messages, and re -implement the chain with the results of the tool for the fertilized final response to the context. If calls are not made to the tool, they return directly to artificial intelligence.

def test_search_chain():

"""Test the search chain with various queries"""

test_queries = [

"what is langgraph",

"latest developments in AI for 2024",

"how does langchain work with different LLMs"

]

print("\n" + "="*60)

print("🧪 TESTING ENHANCED SEARCH CHAIN")

print("="*60)

for i, query in enumerate(test_queries, 1):

print(f"\n📝 Test {i}: {query}")

print("-" * 50)

try:

response = enhanced_search_chain.invoke(query)

print(f"✅ Response: {response.content[:300]}...")

if hasattr(response, 'tool_calls') and response.tool_calls:

print(f"🛠️ Used {len(response.tool_calls)} tool call(s)")

except Exception as e:

print(f"❌ Error: {str(e)}")

print("-" * 50)The job, Test_Search_chain (), verifies the authenticity of the entire AI assistant setting by playing a series of testing queries through Endanced_Search_chain. It determines a list of various test claims, coverage of tools, artificial intelligence topics, Langchain integration, and the results of publications, indicating whether tool calls have been used. This helps in checking that artificial intelligence can effectively run searches on the web, processing responses, returning useful information for users, and ensuring a strong and interactive system.

if __name__ == "__main__":

print("\n🚀 Starting enhanced LangChain + Gemini + Jina Search demo...")

test_search_chain()

print("\n" + "="*60)

print("💬 INTERACTIVE MODE - Ask me anything! (type 'quit' to exit)")

print("="*60)

while True:

user_query = input("\n🗣️ Your question: ").strip()

if user_query.lower() in ['quit', 'exit', 'bye']:

print("👋 Goodbye!")

break

if user_query:

try:

response = enhanced_search_chain.invoke(user_query)

print(f"\n🤖 Response:\n{response.content}")

except Exception as e:

print(f"❌ Error: {str(e)}")Finally, we manage the artificial intelligence assistant as a text when implementing the file directly. He first calls the Test_Search_chain function () to verify the authenticity of the system with pre -specific information, ensuring that the preparation works properly. After that, it begins an interactive position, allowing users to write custom questions and receive answers created by artificial intelligence enriched with dynamic research results when needed. The episode continues until users “stop”, “exit” or “goodbye”, providing an intuitive and practical way to interact with the artificial intelligence system.

In conclusion, we have successfully built an artificial intelligence assistant that enhances the normative Langchain framework, the Capacity of Gemini 2.0 Flash, and the actual time search function of JINA Search. This mixed approach explains how artificial intelligence models can expand their acquaintances beyond fixed data, providing users with timely and related information from reliable sources. You can now extend this project more by integrating additional tools, customizing claims, or publishing the assistant as the API application or the web app for the broader applications. This institution opens endless capabilities to build strong and trained smart systems.

Check the notebook on Gabbab. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 95K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-01 19:18:00