Small Language Models Are the New Rage, Researchers Say

The original version From this story it appeared in Quanta magazine.

Large language models work well because they are very large. The latest models of Openai, Meta and Deepseek use hundreds of billions of “parameters” – adjustable handles that define communications between data and get an amendment during the training process. With more parameters, models are more able to identify patterns and communications, which in turn makes them more powerful and accurate.

But this force comes at a cost. Training a model with hundreds of billions of parameters take huge calculation resources. For the Training of the Gemini 1.0 Ultra, for example, Google was said to have spent $ 191 million. LLMS models also require a large math strength every time they answer a request, making them notorious energy pigs. One query consumes Chatgpt about 10 times the energy such as one Google search, according to the Electrical Energy Research Institute.

In response, some researchers are now thinking. IBM, Google, Microsoft, and Openai released all SLMS models that use a few billion parameters – part of their LLM counterparts.

Small models are not used as tools for general purposes like her older cousins. But they can outperform specific and more tight tasks, such as summarizing conversations, answering the patient’s questions as a Chatbot Healthcare, and collecting data in smart devices. “For many tasks, the 8 billion billionaire model is actually very good,” said Zico Coulter, a computer world at Carnegie Mellon University. They can also run a laptop or laptop, instead of a huge data center. (There is no consensus on the accurate definition of “Small”, but all new models reach about 10 billion parameters.)

To improve the training process for these small models, researchers use some tricks. Large models often get rid of raw training data from the Internet, and these data can be unorganized, chaotic and difficult to process. But these large models can create a high -quality data set that can be used to train a small model. The approach, which is called distillation of knowledge, gets the largest model to effectively pass his training, such as the teacher who provides lessons to the student. “the reason [SLMs] “Be good with such small models and such small data is that they use high -quality data instead of chaotic things,” Coulter said.

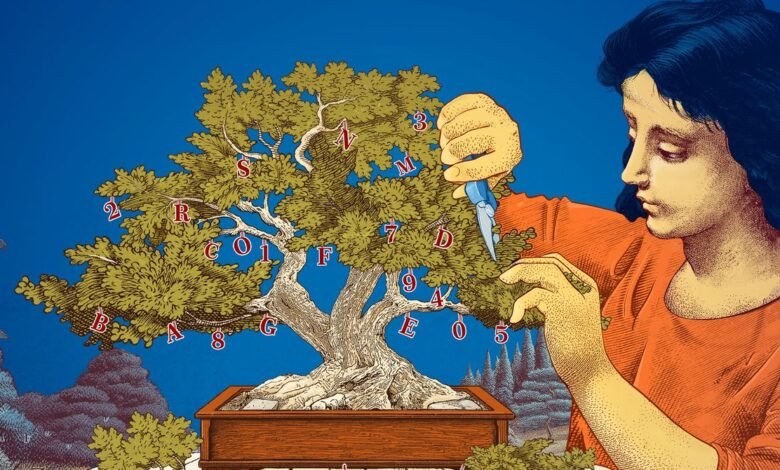

Researchers have also explored ways to create small models by starting and cutting large numbers. One of the methods, known as pruning, requires removing unnecessary or ineffective parts of a nervous network – the sprawling network for connected data points that are behind a large model.

Pruning is inspired by a realistic nervous mesh, the human brain, which acquires efficiency by cutting the links between the clamps as a person. The pruning approach today is due to a leaf in 1989, where the computer world has argued, now in Meta, that up to 90 percent of the parameters in a trained nervous network can be removed without sacrificing efficiency. He called the “optimal brain damage” method. Pruning for researchers can help control a small language model for a specific task or environment.

For researchers interested in how language models do the things you do, the smaller models offer an inexpensive way to test new ideas. And because they have less parameters than large models, their thinking may be more transparent. “If you want to create a new model, you need to try things,” said Leshm Shumsin, a research scientist at the MIT-IBM Watson AI Lab Lab. “Small models allow researchers to experience low risk.”

Large models will remain expensive, with their constantly increasing parameters, beneficial to applications such as General Chatbots, photo generators and drug discovery. But for many users, a small targeted model will also work, while researchers facilitate their training and construction. “These effective models can save money, time and arithmetic,” said Choshin.

The original story Recal it with permission from Quanta magazine, An independent editorial publication for Simonz Foundation Its mission is to enhance the general understanding of science by covering research developments and trends in mathematics, physical sciences and life.

Don’t miss more hot News like this! Click here to discover the latest in Technology news!

2025-04-13 06:00:00