Reacher Season 3 Reminds Us That Jack Reacher’s Muscles Are His Second Greatest Strength

This post contains spoilers for episode 5 of “Reacher” season 3.

Jack Reacher (Alan Ritchson) is a simple man. He forges his own codes of honor, beats up the bad guys, and stands up for what’s right. In many ways, the core appeal of Lee Child’s Reacher novel series is that his hero is impossibly strong and that he is easily the biggest man in every room he walks into. Whether he is dangling thugs off buildings or smashing car doors in their faces, Reacher knows that strength can turn the tides of even the most rigged fights and shootouts.

Season 3 of “Reacher” forces Jack Reacher to rein in this dependable strength, as he must play the long game after infiltrating Zachary Beck’s (Anthony Michael Hall) shady inner circle. Moreover, Reacher is no longer the biggest man in the room, thanks to Paulie (Olivier Ritchers), Beck’s 7’2″ bodyguard who looms over everyone and has it out for our guy. While Reacher gets plenty of opportunities to break limbs and shoot bullets this season, he must rely on yet another innate strength: his unmatched skills as an undercover agent.

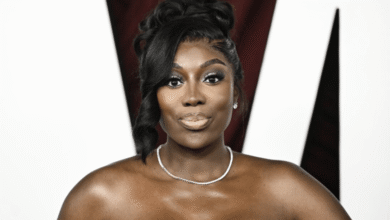

Child’s “Persuader” is the perfect choice to highlight this quality in Season 3, as it exclusively banks on Reacher’s ability to evade suspicion while sneaking in and out of the lion’s den. When he’s not using his teeny, tiny cellphone to keep DEA agent Susan Duffy (Sonya Cassidy) updated, Reacher has to surreptitiously gather intel, sneak around a heavily guarded mansion, and earn Beck’s trust while eliminating obstacles. There are moments where he messes up big time, coming really close to being outed as the mole, but a combination of his bonkers skills and sheer luck keeps the mission going. Episode 5 drives this point home, as we are now privy to the truth about Dominique Kohl’s (Mariah Robinson) horrible death, and the reason why Reacher must not fail before he can get to Francis Xavier Quinn (Brian Tee).

Things go south very early in this episode, with Duffy and fellow DEA agent Guillermo (Roberto Montesinos) being cornered in one of Quinn’s warehouses by his henchmen. Reacher is quick to arrive and save the day, proving yet again that he’s ever prepared to tackle any curveball thrown at him. So let’s take a closer look at his journey as an undercover agent so far.

Reacher’s improvise, adapt, overcome strategy works every time

The Beck stronghold is essentially a heavily surveilled mansion built on a coastline, with a tempestuous sea constantly beating against steep, slippery rocks. The only way in is the main gate guarded by Paulie, so it is probably safe to say that the mansion is like a fortress. The turbulent sea is another possible route to get in and out, of course, but only an insane person would swim across it at night, right? Well, Reacher is something of a madman, and there’s a method to his madness. In an earlier episode, he asks Duffy to meet him at a spot with a change of clothes, and he casually swims there in just his boxers, as one does. While such a feat is near impossible for the average person, Reacher makes it look so easy when he repeatedly uses this route to sneak around undetected.

In Episode 5, Reacher needs to act fast to stop the escaped bodyguard John Cooper (Ronnie Rowe), and his only exit is the front gate, as he deduces that Cooper will use the main road to drive straight to the mansion. Using the telltale pretext of “let me go and see what’s up,” Reacher slips out and works with Guillermo to flip the DEA agent’s car, asking him to lie beneath it. It is a risky plan to make Cooper stop near the car and assume (and assumptions kill!) that he will bend down to check who’s underneath. However, the plan works, giving our heroes a split-second window to shoot Cooper, which helps avert a potential catastrophe.

Another instance of Reacher being a shrewd undercover agent is when he prevents Duffy from shooting the last of Quinn’s men and scoops up her badge, which she had lost during the warehouse shootout. This is a man who notices everything and goes over all possible outcomes during tense, real-time crises, staying one step ahead of smart DEA agents like Duffy. Even when he’s on the brink of being found out, Reacher improvises like an expert, lying through his teeth to sway the odds in his favor. While his exposé as a mole is inevitable, I’m sure someone as capable as Jack Reacher will complete his mission and emerge unscathed.

2025-03-06 18:00:00