Supercharged AI: Kove’s Software Outsources Memory for Speed

Modern society has become increasingly hungry data, especially since the use of artificial intelligence continues to grow dramatically. As a result, enough to ensure enough computer memory – and the sustainable support power of that memory – a great concern.

Now, the KOVE software company has discovered a way to collect a computer memory and use external sources dynamically in a way that greatly enhances the efficiency of the computer. The KOVE system works to take advantage of the external combined memory to produce results faster than it can be achieved with local memory.

For a KOVE customer, the approach of energy consumption reduced up to 54 percent. For another, it has reduced the time required to run a complex model of Amnesty International, allowing the completion of training for 60 days in just one day.

John Overton, CEO CEO, is working to solve this software for 15 years. It confirms that meeting high memory demand is one of the most urgent concerns facing the computer industry. “You hear about people running out of memory all the time,” he says, noting that machine learning algorithms require huge amounts of data.

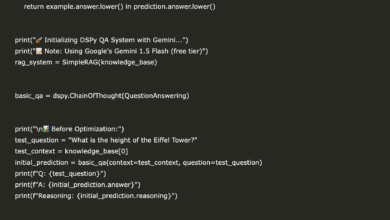

However, computers can only break data as quickly as their memory allows, and will be disrupted in the middle of the tasks without enough of them. The memory solution to the software (SDM) aims to alleviate this problem by using external memory sources to external servers.

How to work memory knowledge of programs

Overton notes that many computer scientists thought that the use of external sources of memory – at least with the same data efficiency locally – was impossible. Such a work that will challenge the laws of physics.

The problem is due to the fact that electrons can only travel at light. Therefore, if the outer server is 150 meters from a major computer, there will be a delay of about 500 nanos in the electrons that reach the outer server: approximately 3.3 nanoes of the cumin (delay) per meter, the data must travel. “People assume that this problem is not solved,” says Opton.

SDM is able to overcome this problem and use the collected memory at high speed speeds due to the way it strategically divides the data that is processed. It guarantees the data that will be processed more locally with the CPU, while other data is found in the external memory gathering. Although this does not transmit data faster than the speed of light, it is more efficient than all data processing locally using the CPU. In this way, SDM can actually process data faster than if the data has been kept locally.

“We are smart about making sure that the therapist gets the memory it needs from the local motherboard,” explains Overton. “The results are amazing.” For example, it is noted that one of his customers, PadsIt saw a decrease of 9 percent in cumin using the SDM.

Energy savings from the combined memory

Another main feature with the SDM approach is the cut in energy needs. Scientists usually need to run models on any available server, and they often need to run medium -sized models on large servers to accommodate temporary nails in memory needs. This means running larger and more energy -attractive servers for a relatively small computing function.

But when the memory is assembled and customized dynamically across different servers, as it is with SDM, the exact amount of the required server memory is used. So fewer servers are needed to achieve the same results, then less powerful is used. Cove is called thatRed HAT, in cooperation with SuperMicro servers, was measured to 54 percent of energy saving using the KOVE system. This reduces companies ’need to buy TERABYTE servers for Gigabyte functions, which leads to cost savings and efficiency gains.

“It takes 200 milliliters to give memory,” says Opton, noting the time the person takes to include his eye. “Therefore, literally flashing your eyes and getting the memory you need.”

A Kove’s customer, the World Financial Correspondent Network Swift, tested the SDM approach and achieved an improvement in 60 times the speed when the training models compared to a virtual device running the same task on the same devices, but with the traditional method of memory.

“Imagine if it takes a job for 60 days, or took a single -hour job. [using our software]Overton says.

From your site articles

Related articles about the web

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-06 15:00:00