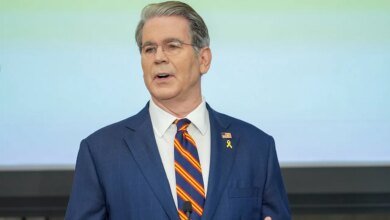

Billionaire PC tycoon Michael Dell is riding the AI gold rush—and he says the party’s far from over even if eventually ‘there’ll be too many’ data centers

Michael Dell, who revolutionized the personal computer industry with Dell Technologies in the late 1990s and is now the world’s 10th-richest person, offered his take on the AI wave his company is helping to enable, and why he believes the peak of data center construction has yet to arrive.

Dell Technologies stock is up 32% in the past year alone thanks to the growing importance of the infrastructure services it provides to clients like OpenAI. While some analysts and technology leaders claim the AI industry is in a bubble, Dell said the boom remains strong due to AI companies’ insatiable appetite for computing power.

If customers were buying without a real need, Dell would be concerned, but “we don’t see that at all,” he told CNBC. “In fact, we see kind of the opposite.”

When it comes to artificial intelligence, Dell said, the company’s customers face a lot of their own demand, confronting the limits of America’s aging power grid.

“Our customers immediately deploy whatever infrastructure they get,” he said. “The limiting factor for many of them seems to be, can they deliver power to the buildings to provide the required energy.”

Dell said the company’s server networking business grew 58% last year. Over the next four years, the company’s annual revenue growth will exceed expectations by 7% to 9%, compared to the 3% to 4% it previously expected. The company said Tuesday that growth in its headline annual earnings per share (EPS) — a measure of how much a company makes on each outstanding share — will be 15% or better, compared to the 8% previously expected.

AI stock market boom

Overall, the AI industry is driving a stock market boom that has lifted companies that supply AI infrastructure. Oracle shares jumped about 36% in one day after the company announced a five-year, $300 billion cloud deal with OpenAI last month. This week, AMD shares rose 24% after it said it would partner with OpenAI in AI data centers. Dell Technologies stock is up 39% year to date.

Sure enough, many market spectators are starting to raise alarms about market froth. On Wednesday, the Bank of England’s Financial policy Committee warned that “equity valuations appear stretched” and warned that due to record concentration in the stock market, stocks could be at risk if the impact of AI is not sufficient.

However, Dell sees the huge demand for AI and the infrastructure that supports it as a sign that good times are still here, at least for now.

“I’m sure at some point there will be a lot of these things that will be built, but we’re not seeing any signs of that,” Dale said.

2025-10-08 19:08:00