A Comprehensive Tutorial on the Five Levels of Agentic AI Architectures: From Basic Prompt Responses to Fully Autonomous Code Generation and Execution

In this tutorial, we explore five levels of dealerships, from the simplest linguistic model calls to the fully independent code generation system. This tutorial is designed to run it smoothly on Google Colab. Starting with a basic “simple processor” that simply eliminates the model, you will gradually create the logic of guidance, integrate external tools, organize multi -step workflow tasks, and ultimately enable the model to plan, correct and implement its Python code. During each section, you will find detailed interpretations, stand -alone experimental functions, and clear demands that explain how human control and the independence of the machine in artificial intelligence applications in the real world.

import os

import torch

from transformers import pipeline, AutoTokenizer, AutoModelForCausalLM

import re

import json

import time

import random

from IPython.display import clear_outputWe import the basic Peton and third -party libraries, including the operating system and time to control the environment and implementation, and the flame, along with Huging Face transformers (pipeline, autotokenizer, automodelforcausallm) to download models and inference. Also, we use Re and JSON to analyze LLM outputs, random seeds and fake data, while Clear_utput maintains a collapse.

MODEL_NAME = "TinyLlama/TinyLlama-1.1B-Chat-v1.0"

def get_model_and_tokenizer():

if not hasattr(get_model_and_tokenizer, "model"):

print(f"Loading model {MODEL_NAME}...")

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype=torch.float16,

device_map="auto",

low_cpu_mem_usage=True

)

get_model_and_tokenizer.model = model

get_model_and_tokenizer.tokenizer = tokenizer

print("Model loaded successfully!")

return get_model_and_tokenizer.model, get_model_and_tokenizer.tokenizerHere, we define Model_name to refer to the Tinyllama 1.1B chat and implement a great asset_model_and_tokenizer () that downloads and prepares the distinctive and model code only once, with temporary storage for the first connection to reduce public expenditures, then restore the cases hidden for all subsequent benefit calls.

def get_model_and_tokenizer():

if not hasattr(get_model_and_tokenizer, "model"):

print(f"Loading model {MODEL_NAME}...")

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype=torch.float16,

device_map="auto",

low_cpu_mem_usage=True

)

get_model_and_tokenizer.model = model

get_model_and_tokenizer.tokenizer = tokenizer

print("Model loaded successfully!")

return get_model_and_tokenizer.model, get_model_and_tokenizer.tokenizerThis assistant function is carried out lazy loading pattern for Tinyllama and Tokenizer model. In the first call, it downloads and creates both semi -precision and automatic mode, and stores them as features on the job object, and on subsequent calls, simply return the counterparts already loaded to avoid excess public expenditures.

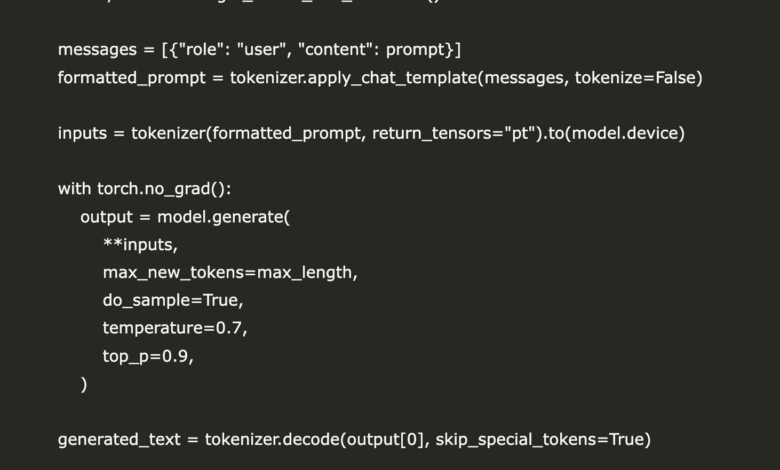

def generate_text(prompt, max_length=512):

model, tokenizer = get_model_and_tokenizer()

messages = [{"role": "user", "content": prompt}]

formatted_prompt = tokenizer.apply_chat_template(messages, tokenize=False)

inputs = tokenizer(formatted_prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

output = model.generate(

**inputs,

max_new_tokens=max_length,

do_sample=True,

temperature=0.7,

top_p=0.9,

)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

response = generated_text.split("ASSISTANT: ")[-1].strip()

return responseThe Conriete_text function turns the Tinyllama interference: it recalls the stored model and tokeenizer, coordinating the user’s demand for the chat template, refusing and transferring the inputs to the form device, then response samples with temperature and higher settings P. After the generation, decomposes the output and only extracts the assistant response through division on “Assistant:”.

Level 1: Simple processor

At the simplest level, the code determines a direct pipeline for the hotel that treats the model as a purely language processor. When the user provides a router, “Simple_Processor ‘Assistor” requires the Tinyllama 1.1B chat to produce a free response. Then it displays that response directly. Under the cover, the ‘center_text` “ center_text` ’that the distinctive model and symbol are loaded only once by storing them inside the function of the fun_model_and_tokenizer’, coordinating the demand for the chat form, running the generation with sampling parameters for diversity, and extracts the assistant response through the division on “assistant:”. Marker. This level shows the basic reaction pattern: the input is received, the output is created, and the flow of the program remains completely under human control.

def simple_processor(prompt):

"""Level 1: Simple Processor - Model has no impact on program flow"""

response = generate_text(prompt)

return response

def demo_level1():

print("\n" + "="*50)

print("LEVEL 1: SIMPLE PROCESSOR DEMO")

print("="*50)

print("At this level, the AI has no control over program flow.")

print("It simply takes input and produces output.\n")

user_input = input("Enter your question or prompt: ") or "Write a short poem about artificial intelligence."

print("\nProcessing your request...\n")

output = simple_processor(user_input)

print("OUTPUT:")

print("-"*50)

print(output)

print("-"*50)Simple_Processor function embodies the simple therapist for our hierarchical chain by dealing with a purely model as a text generator; He accepts a mentor and delegate offered by the user to create _Text. It restores everything that results in the model without any branching logic or decision. DEMO_LEVEL1 routine provides the minimum interactive loop, printing a clear head, the user’s insertion (with reasonable default) is allowed, Simple_Processor is called, then the raw output, and the basic action of the claims to the response in which no effect has been practiced on the flow of the program.

Level 2: The router

The second level provides a conditional guidance based on the model classification to inquire the user. The function of `router_agen` first requires the category of query in” technology “,” creative “or” realistic “, then it normalizes the form of the form in one of these categories. Depending on the category that is discovered, the query is sent to a specialized processor, either `Handle_technical_query`,` Handle_creative_quness’s, or ‘handle_factual_query`, each of them wraps the user’s inquiry in a claim similar to the system specifically designed for arrogance and purpose. This guidance mechanism provides partial control of the program’s flow, allowing it to direct the subsequent interaction path while continuing to rely on the specific human treatments to generate the final output.

def router_agent(user_query):

"""Level 2: Router - Model determines basic program flow"""

category_prompt = f"""Classify the following query into one of these categories:

'technical', 'creative', or 'factual'.

Query: {user_query}

Return ONLY the category name and nothing else."""

category_response = generate_text(category_prompt)

category = category_response.lower()

if "technical" in category:

category = "technical"

elif "creative" in category:

category = "creative"

else:

category = "factual"

print(f"Query classified as: {category}")

if category == "technical":

return handle_technical_query(user_query)

elif category == "creative":

return handle_creative_query(user_query)

else:

return handle_factual_query(user_query)

def handle_technical_query(query):

system_prompt = f"""You are a technical assistant. Provide detailed technical explanations.

User query: {query}"""

response = generate_text(system_prompt)

return f"[Technical Response]\n{response}"

def handle_creative_query(query):

system_prompt = f"""You are a creative assistant. Be imaginative and inspiring.

User query: {query}"""

response = generate_text(system_prompt)

return f"[Creative Response]\n{response}"

def handle_factual_query(query):

system_prompt = f"""You are a factual assistant. Provide accurate information concisely.

User query: {query}"""

response = generate_text(system_prompt)

return f"[Factual Response]\n{response}"

def demo_level2():

print("\n" + "="*50)

print("LEVEL 2: ROUTER DEMO")

print("="*50)

print("At this level, the AI determines basic program flow.")

print("It decides which processing path to take.\n")

user_query = input("Enter your question or prompt: ") or "How do neural networks work?"

print("\nProcessing your request...\n")

result = router_agent(user_query)

print("OUTPUT:")

print("-"*50)

print(result)

print("-"*50)The ROUTER_AGENT function implements the behavior of the router by requesting the model first classification of the user’s inquiries as “technical”, “creative”, or “realistic”, then normalizing this classification and sending the query to the corresponding therapist (Handle_technical_quryy, YeGle_creative_query, or Handle_Factual_queryy. DEMO_LEVEL2 routine provides a clear treatment interface, printing heads, entry acceptance (with virtual), router_agent invitation, sympathetic response offer, and how the model can control the basic of the program’s flow by choosing the processing path to follow -up.

Level 3: Contact Tool

At the third level, the code enables the model to determine which of the external tools that should be called by including the JSON job selection protocol in the claim. Tool_Calling_agen `Tool_Calling_agen` `Tool_Calling_agent ‘is the question of the user along with a list of potential tools, including the search for weather, the simulation of the web search, the recovery of history and the current time, or a direct response, and the model is directed to respond with a valid JSON message that defines the selected tool and its teachers. Then Regex extracts the first JSON object out of the form, and the code safely returns to a direct response if the analysis fails. Once the tool and media is determined, the corresponding Python function is performed, its results are taken, and the final form of the form that leads to a coherent answer is combined. This style embodies thinking with the implementation of the concrete code by allowing the model to organize any applications of applications or auxiliary tools for communication.

def tool_calling_agent(user_query):

"""Level 3: Tool Calling - Model determines how functions are executed"""

tool_selection_prompt = f"""Based on the user query, select the most appropriate tool from the following list:

1. get_weather: Get the current weather for a location

2. search_information: Search for specific information on a topic

3. get_date_time: Get current date and time

4. direct_response: Provide a direct response without using tools

USER QUERY: {user_query}

INSTRUCTIONS:

- Return your response in valid JSON format

- Include the tool name and any required parameters

- For get_weather, include location parameter

- For search_information, include query and depth parameter (basic or detailed)

- For get_date_time, include timezone parameter (optional)

- For direct_response, no parameters needed

Example output format: {{"tool": "get_weather", "parameters": {{"location": "New York"}}}}"""

tool_selection_response = generate_text(tool_selection_prompt)

try:

json_match = re.search(r'({.*})', tool_selection_response, re.DOTALL)

if json_match:

tool_selection = json.loads(json_match.group(1))

else:

print("Could not parse tool selection. Defaulting to direct response.")

tool_selection = {"tool": "direct_response", "parameters": {}}

except json.JSONDecodeError:

print("Invalid JSON in tool selection. Defaulting to direct response.")

tool_selection = {"tool": "direct_response", "parameters": {}}

tool_name = tool_selection.get("tool", "direct_response")

parameters = tool_selection.get("parameters", {})

print(f"Selected tool: {tool_name}")

if tool_name == "get_weather":

location = parameters.get("location", "Unknown")

tool_result = get_weather(location)

elif tool_name == "search_information":

query = parameters.get("query", user_query)

depth = parameters.get("depth", "basic")

tool_result = search_information(query, depth)

elif tool_name == "get_date_time":

timezone = parameters.get("timezone", "UTC")

tool_result = get_date_time(timezone)

else:

return generate_text(f"Please provide a helpful response to: {user_query}")

final_prompt = f"""User Query: {user_query}

Tool Used: {tool_name}

Tool Result: {json.dumps(tool_result)}

Based on the user's query and the tool result above, provide a helpful response."""

final_response = generate_text(final_prompt)

return final_response

def get_weather(location):

weather_conditions = ["Sunny", "Partly cloudy", "Overcast", "Light rain", "Heavy rain", "Thunderstorms", "Snowy", "Foggy"]

temperatures = {

"cold": list(range(-10, 10)),

"mild": list(range(10, 25)),

"hot": list(range(25, 40))

}

location_hash = sum(ord(c) for c in location)

condition_index = location_hash % len(weather_conditions)

season = ["winter", "spring", "summer", "fall"][location_hash % 4]

temp_range = temperatures["cold"] if season in ["winter", "fall"] else temperatures["hot"] if season == "summer" else temperatures["mild"]

temperature = random.choice(temp_range)

return {

"location": location,

"temperature": f"{temperature}°C",

"conditions": weather_conditions[condition_index],

"humidity": f"{random.randint(30, 90)}%"

}

def search_information(query, depth="basic"):

mock_results = [

f"First result about {query}",

f"Second result discussing {query}",

f"Third result analyzing {query}"

]

if depth == "detailed":

mock_results.extend([

f"Fourth detailed analysis of {query}",

f"Fifth comprehensive overview of {query}",

f"Sixth academic paper on {query}"

])

return {

"query": query,

"results": mock_results,

"depth": depth,

"sources": [f"source{i}.com" for i in range(1, len(mock_results) + 1)]

}

def get_date_time(timezone="UTC"):

current_time = time.strftime("%Y-%m-%d %H:%M:%S", time.gmtime())

return {

"current_datetime": current_time,

"timezone": timezone

}

def demo_level3():

print("\n" + "="*50)

print("LEVEL 3: TOOL CALLING DEMO")

print("="*50)

print("At this level, the AI selects which tools to use and with what parameters.")

print("It can process the results from tools to create a final response.\n")

user_query = input("Enter your question or prompt: ") or "What's the weather like in San Francisco?"

print("\nProcessing your request...\n")

result = tool_calling_agent(user_query)

print("OUTPUT:")

print("-"*50)

print(result)

print("-"*50)In level 3, the Tool_Calling_agement function demands the model to choose from a set of pre -defined tools, such as searching for weather, searching for fake web, or retrieving history/time, by returning the JSON object in the name of the specified tool and its parameters. Next, he safely has JSON, calls for the Python function corresponding to organized data, and he makes a follow -up form to merge the tool output into a coherent response and faces the user.

Level 4: Multi -Step Agent

The fourth level extends the tools pattern to the multi -line -line agent that manages the workflow and its condition. The “Multistepagent” category maintains an internal memory to enter the user, tool outputs and procedures. Every repetition creates a layout mentor that summarizes the entire memory, and ask the form to choose one of several tools, such as the web search simulation, the extraction of information, the summary of the text, the creation of reporting, or the production of the task with a final output. After implementing the specified tool and attaching its results again to the memory, the process is repeated until the form issued a “full” procedure or the maximum number of steps was reached. Finally, the agent collects memory in a coherent final response. This structure explains how LLM can organize multiple complex operations while consulting external functions and improving its plan based on the previous results.

class MultiStepAgent:

"""Level 4: Multi-Step Agent - Model controls iteration and program continuation"""

def __init__(self):

self.tools = {

"search_web": self.search_web,

"extract_info": self.extract_info,

"summarize_text": self.summarize_text,

"create_report": self.create_report

}

self.memory = []

self.max_steps = 5

def run(self, user_task):

self.memory.append({"role": "user", "content": user_task})

steps_taken = 0

while steps_taken < self.max_steps:

next_action = self.determine_next_action()

if next_action["action"] == "complete":

return next_action["output"]

tool_name = next_action["tool"]

tool_args = next_action["args"]

print(f"\n📌 Step {steps_taken + 1}: Using tool '{tool_name}' with arguments: {tool_args}")

tool_result = self.tools[tool_name](**tool_args)

self.memory.append({

"role": "tool",

"content": json.dumps(tool_result)

})

steps_taken += 1

return self.generate_final_response("Maximum steps reached. Here's what I've found so far.")

def determine_next_action(self):

context = "Current memory state:\n"

for item in self.memory:

if item["role"] == "user":

context += f"USER INPUT: {item['content']}\n\n"

elif item["role"] == "tool":

context += f"TOOL RESULT: {item['content']}\n\n"

prompt = f"""{context}

Based on the above information, determine the next action to take.

Choose one of the following options:

1. search_web: Search for information (args: query)

2. extract_info: Extract specific information from a text (args: text, target_info)

3. summarize_text: Create a summary of text (args: text)

4. create_report: Create a structured report (args: title, content)

5. complete: Task is complete (include final output)

Respond with a JSON object with the following structure:

For tools: {{"action": "tool", "tool": "tool_name", "args": {{tool-specific arguments}}}}

For completion: {{"action": "complete", "output": "final output text"}}

Only return the JSON object and nothing else."""

next_action_response = generate_text(prompt)

try:

json_match = re.search(r'({.*})', next_action_response, re.DOTALL)

if json_match:

next_action = json.loads(json_match.group(1))

else:

return {"action": "complete", "output": "I encountered an error in planning. Here's what I know so far: " + self.generate_final_response("Error in planning")}

except json.JSONDecodeError:

return {"action": "complete", "output": "I encountered an error in planning. Here's what I know so far: " + self.generate_final_response("Error in planning")}

self.memory.append({"role": "assistant", "content": next_action_response})

return next_action

def generate_final_response(self, prefix=""):

context = "Task history:\n"

for item in self.memory:

if item["role"] == "user":

context += f"USER INPUT: {item['content']}\n\n"

elif item["role"] == "tool":

context += f"TOOL RESULT: {item['content']}\n\n"

elif item["role"] == "assistant":

context += f"AGENT ACTION: {item['content']}\n\n"

prompt = f"""{context}

{prefix} Generate a comprehensive final response that addresses the original user task."""

final_response = generate_text(prompt)

return final_response

def search_web(self, query):

time.sleep(1)

query_hash = sum(ord(c) for c in query)

num_results = (query_hash % 3) + 2

results = []

for i in range(num_results):

results.append(f"Result {i+1}: Information about '{query}' related to aspect {chr(97 + i)}.")

return {

"query": query,

"results": results

}

def extract_info(self, text, target_info):

time.sleep(0.5)

return {

"extracted_info": f"Extracted information about '{target_info}' from the text: The text indicates that {target_info} is related to several key aspects mentioned in the content.",

"confidence": round(random.uniform(0.7, 0.95), 2)

}

def summarize_text(self, text):

time.sleep(0.5)

word_count = len(text.split())

return {

"summary": f"Summary of the provided text ({word_count} words): The text discusses key points related to the subject matter, highlighting important aspects and providing context.",

"original_length": word_count,

"summary_length": round(word_count * 0.3)

}

def create_report(self, title, content):

time.sleep(0.7)

report_sections = [

"## Introduction",

f"This report provides an overview of {title}.",

"",

"## Key Findings",

content,

"",

"## Conclusion",

f"This analysis of {title} highlights several important aspects that warrant consideration."

]

return {

"report": "\n".join(report_sections),

"word_count": len(content.split()),

"section_count": 3

}

def demo_level4():

print("\n" + "="*50)

print("LEVEL 4: MULTI-STEP AGENT DEMO")

print("="*50)

print("At this level, the AI manages the entire workflow, deciding which tools")

print("to use, when to use them, and determining when the task is complete.\n")

user_task = input("Enter a research or analysis task: ") or "Research quantum computing recent developments and create a brief report"

print("\nProcessing your request... (this may take a minute)\n")

agent = MultiStepAgent()

result = agent.run(user_task)

print("\nFINAL OUTPUT:")

print("-"*50)

print(result)

print("-"*50)The Multistepagent category maintains an advanced memory for user inputs and tool outputs, then repeatedly demands LLM to determine the following procedure, whether to search in the web, extract information, summarize the text, create a report, finish, implement the selected tool and attach the result until the task is completed or reached to a step. When doing this, the level agent 4 who organizes the multi -wheelchapping tasks is displayed by leaving the form of control of the form and the continuation of the program.

Level 5: A completely independent worker

At the most advanced level, the `Mostonomousagent ‘Class shows a closed loop system in which the model is not planned and is also implemented, but also creates and verifies the new Python icon. After registering the user’s task, the agent asks the form to produce a detailed plan, then demands it to create a self -separate solution, which is automatically cleaned of a reduction format. The subsequent verification step inquires from the model for any sentence or logical problems; If the problems are found, the agent is required to improve the code. The symbol whose health is validated is then wrapped using Sandboxing tools, such as safe printing, pictured output institutions, logic to capture the result, and implement it in a restricted local environment. Finally, the agent collects a professional report explaining what has been done, how it has been accomplished, and the final results. This level shows a truly self -intelligence system that can extend its capabilities by creating and implementing dynamic software instructions.

class AutonomousAgent:

"""Level 5: Fully Autonomous Agent - Model creates & executes new code"""

def __init__(self):

self.memory = []

def run(self, user_task):

self.memory.append({"role": "user", "content": user_task})

print("🧠 Planning solution approach...")

planning_message = self.plan_solution(user_task)

self.memory.append({"role": "assistant", "content": planning_message})

print("💻 Generating solution code...")

generated_code = self.generate_solution_code()

self.memory.append({"role": "assistant", "content": f"Generated code: ```python\n{generated_code}\n```"})

print("🔍 Validating code...")

validation_result = self.validate_code(generated_code)

if not validation_result["valid"]:

print("⚠️ Code validation found issues - refining...")

refined_code = self.refine_code(generated_code, validation_result["issues"])

self.memory.append({"role": "assistant", "content": f"Refined code: ```python\n{refined_code}\n```"})

generated_code = refined_code

else:

print("✅ Code validation passed")

try:

print("🚀 Executing solution...")

execution_result = self.safe_execute_code(generated_code, user_task)

self.memory.append({"role": "system", "content": f"Execution result: {execution_result}"})

# Generate a final report

print("📝 Creating final report...")

final_report = self.create_final_report(execution_result)

return final_report

except Exception as e:

return f"Error executing the solution: {str(e)}\n\nGenerated code was:\n```python\n{generated_code}\n```"

def plan_solution(self, task):

prompt = f"""Task: {task}

You are an autonomous problem-solving agent. Create a detailed plan to solve this task.

Include:

1. Breaking down the task into subtasks

2. What algorithms or approaches you'll use

3. What data structures are needed

4. Any external resources or libraries required

5. Expected challenges and how to address them

Provide a step-by-step plan.

"""

return generate_text(prompt)

def generate_solution_code(self):

context = "Task and planning information:\n"

for item in self.memory:

if item["role"] == "user":

context += f"USER TASK: {item['content']}\n\n"

elif item["role"] == "assistant":

context += f"PLANNING: {item['content']}\n\n"

prompt = f"""{context}

Generate clean, efficient Python code that solves this task. Include comments to explain the code.

The code should be self-contained and able to run inside a Python script or notebook.

Only include the Python code itself without any markdown formatting.

"""

code = generate_text(prompt)

code = re.sub(r'^```python\n|```$', '', code, flags=re.MULTILINE)

return code

def validate_code(self, code):

prompt = f"""Code to validate:

```python

{code}

```

Examine the code for the following issues:

1. Syntax errors

2. Logic errors

3. Inefficient implementations

4. Security concerns

5. Missing error handling

6. Import statements for unavailable libraries

If the code has any issues, describe them in detail. If the code looks good, state "No issues found."

"""

validation_response = generate_text(prompt)

if "no issues" in validation_response.lower() or "code looks good" in validation_response.lower():

return {"valid": True, "issues": None}

else:

return {"valid": False, "issues": validation_response}

def refine_code(self, original_code, issues):

prompt = f"""Original code:

```python

{original_code}

```

Issues identified:

{issues}

Please provide a corrected version of the code that addresses these issues.

Only include the Python code itself without any markdown formatting.

"""

refined_code = generate_text(prompt)

refined_code = re.sub(r'^```python\n|```$', '', refined_code, flags=re.MULTILINE)

return refined_code

def safe_execute_code(self, code, user_task):

safe_imports = """

# Standard library imports

import math

import random

import re

import time

import json

from datetime import datetime

# Define a function to capture printed output

captured_output = []

original_print = print

def safe_print(*args, **kwargs):

output = " ".join(str(arg) for arg in args)

captured_output.append(output)

original_print(output)

print = safe_print

# Define a result variable to store the final output

result = None

# Function to store the final result

def store_result(value):

global result

result = value

return value

"""

result_capture = """

# Store the final result if not already done

if 'result' not in locals() or result is None:

try:

# Look for variables that might contain the final result

potential_results = [var for var in locals() if not var.startswith('_') and var not in

['math', 'random', 're', 'time', 'json', 'datetime',

'captured_output', 'original_print', 'safe_print',

'result', 'store_result']]

if potential_results:

# Use the last defined variable as the result

store_result(locals()[potential_results[-1]])

except:

pass

"""

full_code = safe_imports + "\n# User code starts here\n" + code + "\n\n" + result_capture

code_lines = code.split('\n')

first_lines = code_lines[:3]

print(f"\nExecuting (first 3 lines):\n{first_lines}")

local_env = {}

try:

exec(full_code, {}, local_env)

return {

"output": local_env.get('captured_output', []),

"result": local_env.get('result', "No explicit result returned")

}

except Exception as e:

return {"error": str(e)}

def create_final_report(self, execution_result):

if isinstance(execution_result.get('output'), list):

output_text = "\n".join(execution_result.get('output', []))

else:

output_text = str(execution_result.get('output', ''))

result_text = str(execution_result.get('result', ''))

error_text = execution_result.get('error', '')

context = "Task history:\n"

for item in self.memory:

if item["role"] == "user":

context += f"USER TASK: {item['content']}\n\n"

prompt = f"""{context}

EXECUTION OUTPUT:

{output_text}

EXECUTION RESULT:

{result_text}

{f"ERROR: {error_text}" if error_text else ""}

Create a final report that explains the solution to the original task. Include:

1. What was done

2. How it was accomplished

3. The final results

4. Any insights or conclusions drawn from the analysis

Format the report in a professional, easy to read manner.

"""

return generate_text(prompt)

def demo_level5():

print("\n" + "="*50)

print("LEVEL 5: FULLY AUTONOMOUS AGENT DEMO")

print("="*50)

print("At this level, the AI generates and executes code to solve complex problems.")

print("It can create, validate, refine, and run custom code solutions.\n")

user_task = input("Enter a data analysis or computational task: ") or "Analyze a dataset of numbers [10, 45, 65, 23, 76, 12, 89, 32, 50] and create visualizations of the distribution"

print("\nProcessing your request... (this may take a minute or two)\n")

agent = AutonomousAgent()

result = agent.run(user_task)

print("\nFINAL REPORT:")

print("-"*50)

print(result)

print("-"*50)The Autonomousagen category embodies the independence of a fully independent worker by maintaining a process of operating for the user’s task and systematically organizing five basic stages: planning, generating symbol, verification of health, safe implementation, and reporting. Upon starting, the agent is demanding the model to create a detailed plan to solve the task and store this plan in memory. After that, the model is required to create a self -independent Beton symbol based on that plan, and it abstracts any discount format, then verifies the validity of the code by inquiring about the model of a sentence construction, logic, performance and safety. If health verification reveals problems, the agent directs the model to improve the code until it passes the inspection. Then the final code is wrapped in harnessing a sand filled with a sand, with the completion of the temporary stores of the extracted output and the extraction of automatic results, and implementing them in an isolated local environment. Finally, the agent collects a polished professional report by feeding the results of the implementation again in the form, resulting in a narration that explains what was done, how it was accomplished, and what are the acquired visions. Demo_level5 job provides a direct interactive episode and exchanges the user’s task, runs the agent, and provides a comprehensive final report.

Main Job: All the steps mentioned above

def main():

while True:

clear_output(wait=True)

print("\n" + "="*50)

print("AI AGENT LEVELS DEMO")

print("="*50)

print("\nThis notebook demonstrates the 5 levels of AI agents:")

print("1. Simple Processor - Model has no impact on program flow")

print("2. Router - Model determines basic program flow")

print("3. Tool Calling - Model determines how functions are executed")

print("4. Multi-Step Agent - Model controls iteration and program continuation")

print("5. Fully Autonomous Agent - Model creates & executes new code")

print("6. Quit")

choice = input("\nSelect a level to demo (1-6): ")

if choice == "1":

demo_level1()

elif choice == "2":

demo_level2()

elif choice == "3":

demo_level3()

elif choice == "4":

demo_level4()

elif choice == "5":

demo_level5()

elif choice == "6":

print("\nThank you for exploring the AI Agent levels!")

break

else:

print("\nInvalid choice. Please select 1-6.")

input("\nPress Enter to return to the main menu...")

if __name__ == "__main__":

main()

Finally, the main function displays a simple interactive list that scanned a Colat output for reading, and displays all the five levels of the agent along with the option to quit smoking, then sends the user’s choice to the corresponding presentation function before waiting for the entry to the list. This structure provides a coherent interface, in the CLI, allowing you to explore each level of serials without the implementation of manual cells.

In conclusion, by working through these five levels, we gained a practical look at the principles of the agent of artificial intelligence and the bodies between control, flexibility and independence. We have seen how the system can evolve from direct, direct response behavior to complex decision -making lines to the implementation of self -modified software. Whether you are aimed at an intelligent assistant model, or create data pipelines, or an experience with emerging artificial intelligence capabilities, this progress frame provides a road map to design strong and developed factors.

Here is Clap notebook. Also, do not forget to follow us twitter And join us Telegram channel and LinkedIn GrOup. Don’t forget to join 90k+ ml subreddit.

🔥 [Register Now] The virtual Minicon Conference on Agency AI: Free Registration + attendance Certificate + 4 hours short (May 21, 9 am- Pacific time)

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-04-25 19:38:00