Tencent Hunyuan Releases HunyuanOCR: a 1B Parameter End to End OCR Expert VLM

Tencent Hunyuan has released HunyuanOCR, a 1B-parameter vision language model specializing in optical character recognition and document understanding. The model is built on the original Hunyuan multimedia architecture and works to discover, analyze and extract information, visually answer questions and translate text images through a single end-to-end pipeline.

HunyuanOCR is a lightweight alternative to generic VLMs like the Gemini 2.5 and Qwen3 VL that still matches or beats them in OCR-centric tasks. It targets production use cases such as document analysis, card and receipt extraction, video subtitle extraction, and multilingual document translation.

Architecture, ViT native resolution plus lightweight LLM

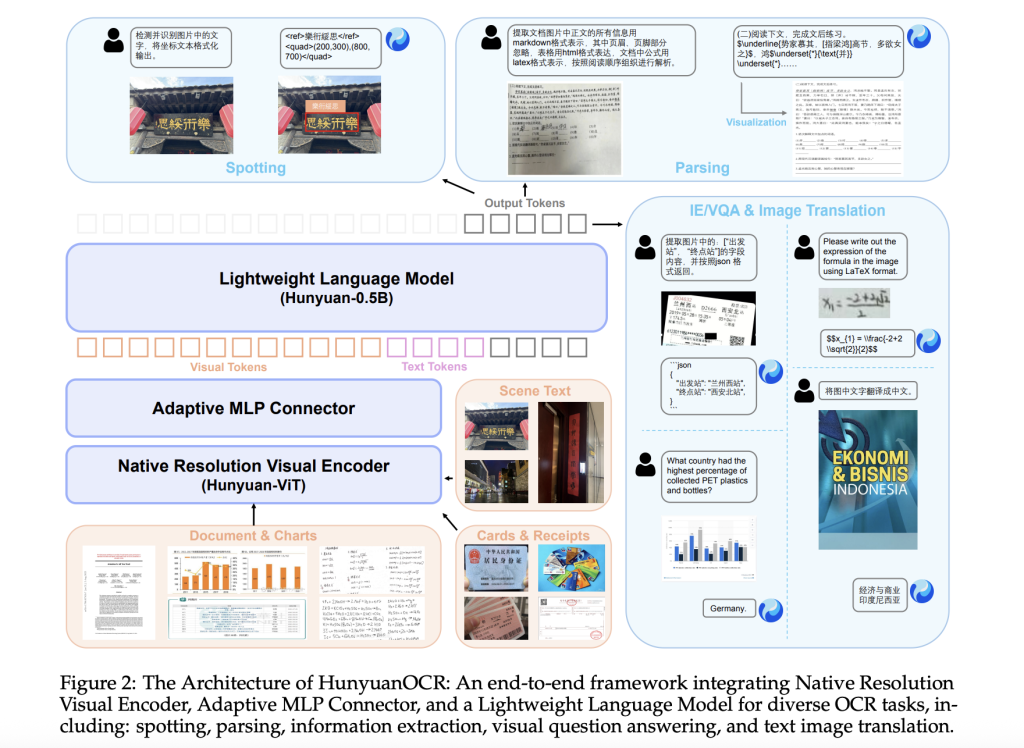

HunyuanOCR is used 3 main unitsa native-resolution visual encoder called Hunyuan ViT, an adaptive MLP connector, and a lightweight language model. The encoder is based on the SigLIP-v2-400M and is expanded to support arbitrary input resolutions through adaptive correction that maintains the original aspect ratio. Images are divided into patches according to their original proportions and processed with global attention, improving recognition of long lines of text, long documents and low-quality scans.

The Adaptive MLP Connector performs learnable clustering on the spatial dimension. It compresses dense visual codes into shorter sequences, while retaining information from dense text areas. This reduces the length of the sequence passed to the language model and reduces computation, while preserving details relevant to OCR.

The language model is based on the densely designed Hunyuan 0.5B model and uses XD RoPE. XD RoPE divides rotary position embeddings into 4 subspaces of text, height, width and time. This model gives an original way to align the 1D token arrangement with the 2D layout and 3D spatiotemporal structure. As a result, the same stack can handle multi-column pages, cross-page flows, and video frame sequences.

Training and inference follow a complete model from end to end. There is no external layout analysis or post-processing model in the loop. All tasks are expressed as natural language prompts and handled with a single forward pass. This design eliminates error propagation across flow stages and simplifies the deployment process.

Data and pre-workout recipe

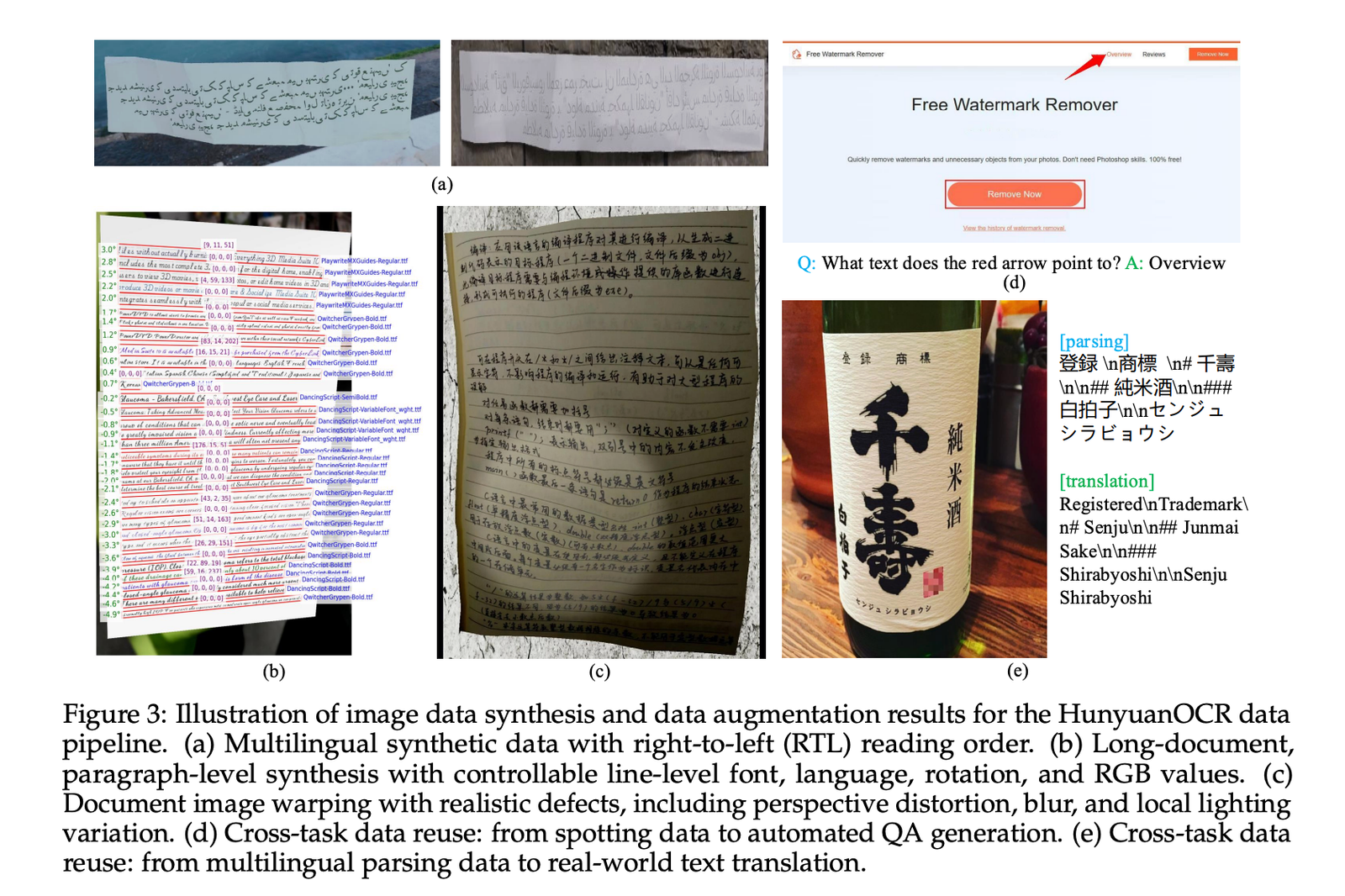

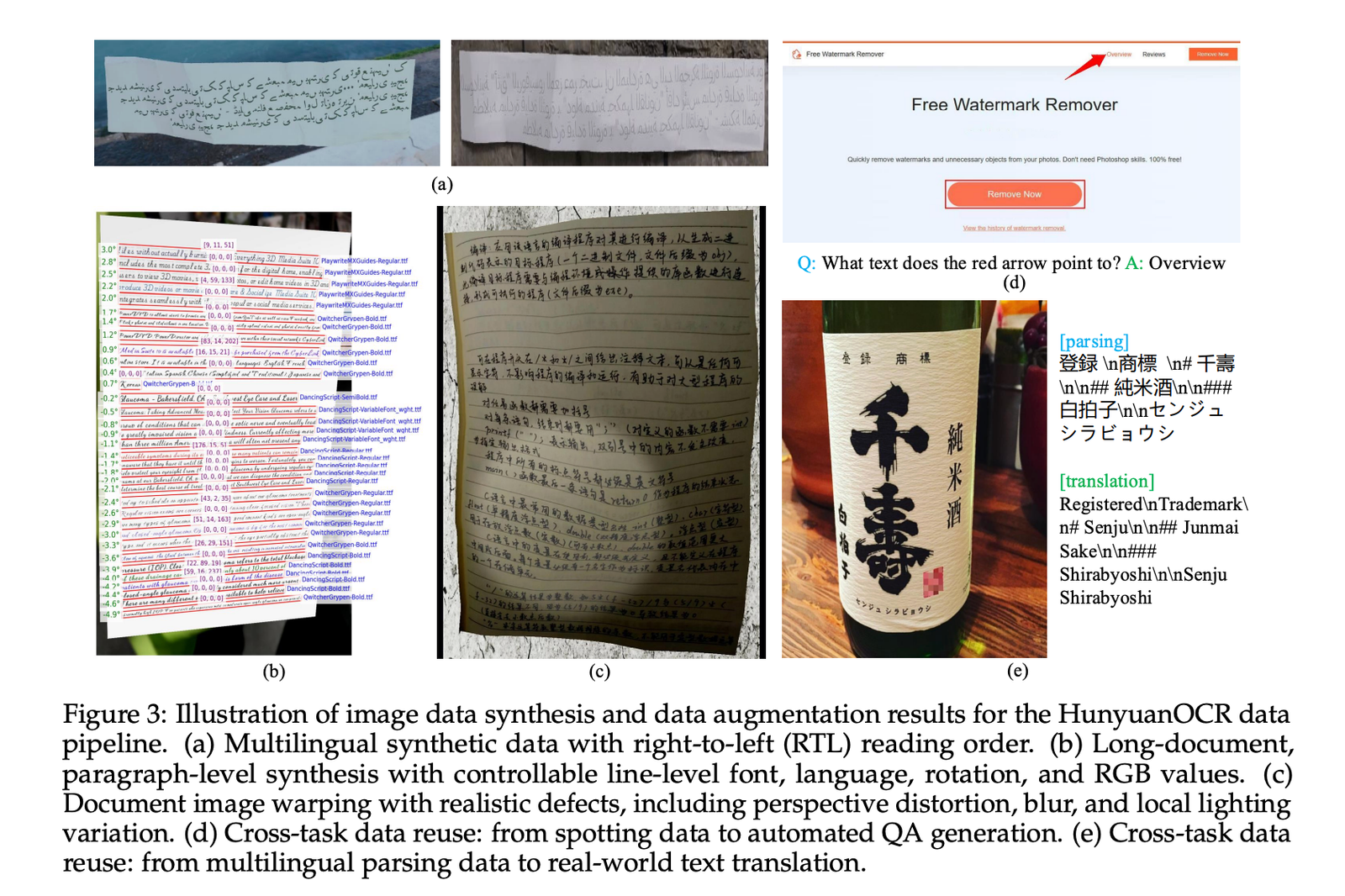

The data pipeline builds over 200 million image-text pairs, across 9 real-world scenarios, including street views, documents, advertisements, handwritten texts, screenshots, cards, certificates, invoices, game interfaces, video frames, and artistic typography. The body covers more than 130 languages.

Synthetic data comes from a multilingual generator that supports right-to-left scripting and paragraph-level display. The pipeline controls font, language, rotation, and RGB values, and applies warp, blur, and local lighting changes to simulate handheld shots and other difficult conditions.

Pre-training follows 4 stages. Phase 1 performs vision language alignment with pure text, synthetic recognition and analysis data, and global caption data, using 50 billion tokens and 8KB of context. Phase 2 runs multi-modal pre-training on 300B tokens mixing pure text with synthetic detection, analysis, translation and VQA samples. Phase 3 expands the context length to 32 KB with 80 bytes of tokens focusing on long documents and long texts. Phase 4 is an application-oriented fine-tuning of 24B codes of annotated human data and hard-coded passive data, while preserving 32B context and standardized instruction templates.

Reinforce learning with verifiable rewards

After supervised training, HunyuanOCR is further improved through reinforcement learning. The research team uses GRPO and reinforcement learning with verifiable reward setting for structured tasks. For text detection, the reward is based on the intersection via uniform matching of merged boxes with the edit distance normalized to the text. For document parsing, the bonus uses a natural edit distance between the generated structure and the reference.

For VQA and translation, the system uses LLM as an arbiter. VQA uses a binary reward that checks for semantic matching. Translation uses the COMET method to score and credit the LLM [0, 5]normalized to [0, 1]. The training framework imposes strict length limits and formats, and assigns a zero reward when output exceeds or breaks the schema, stabilizing optimization and encouraging valid JSON or structured output.

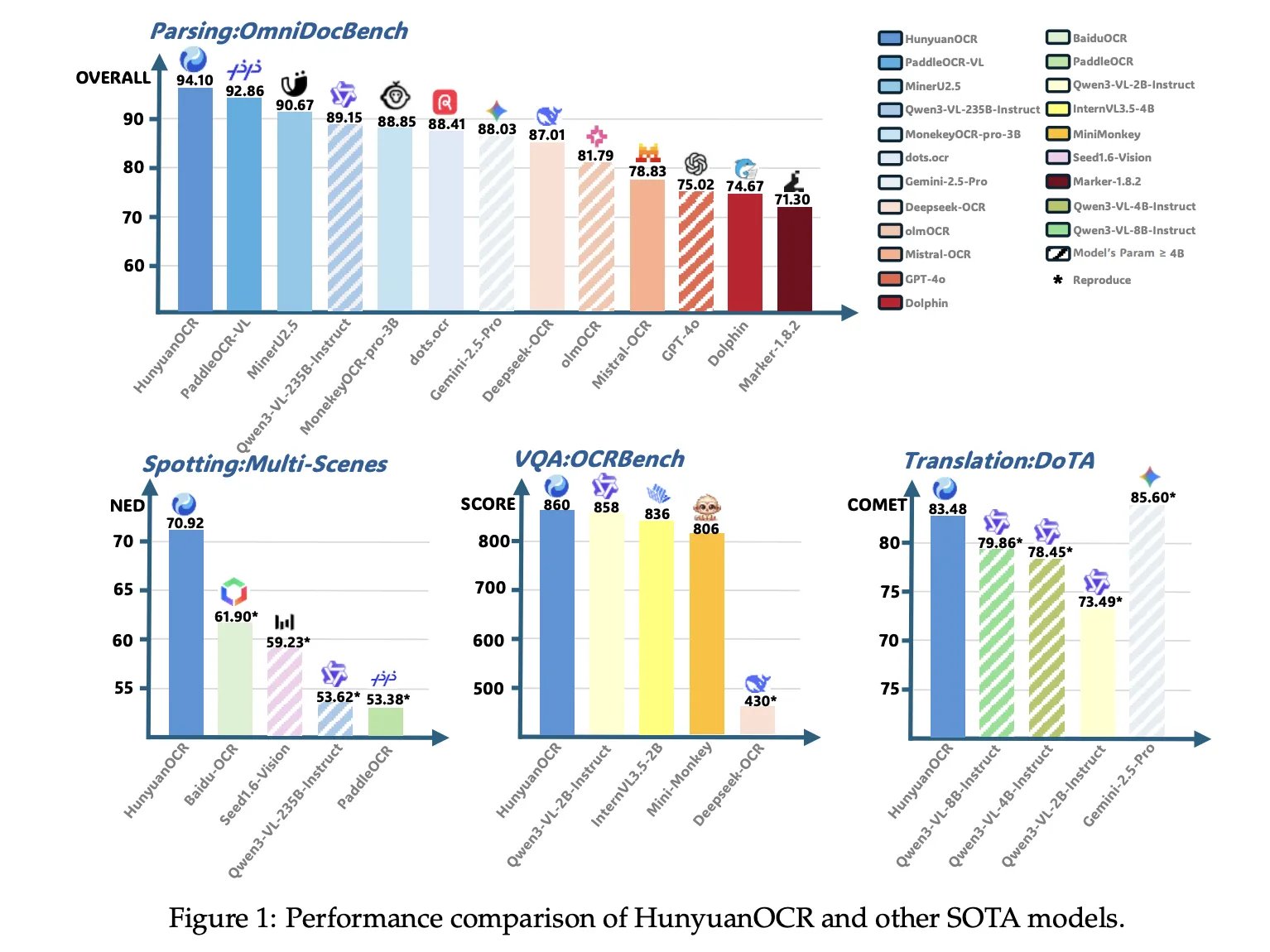

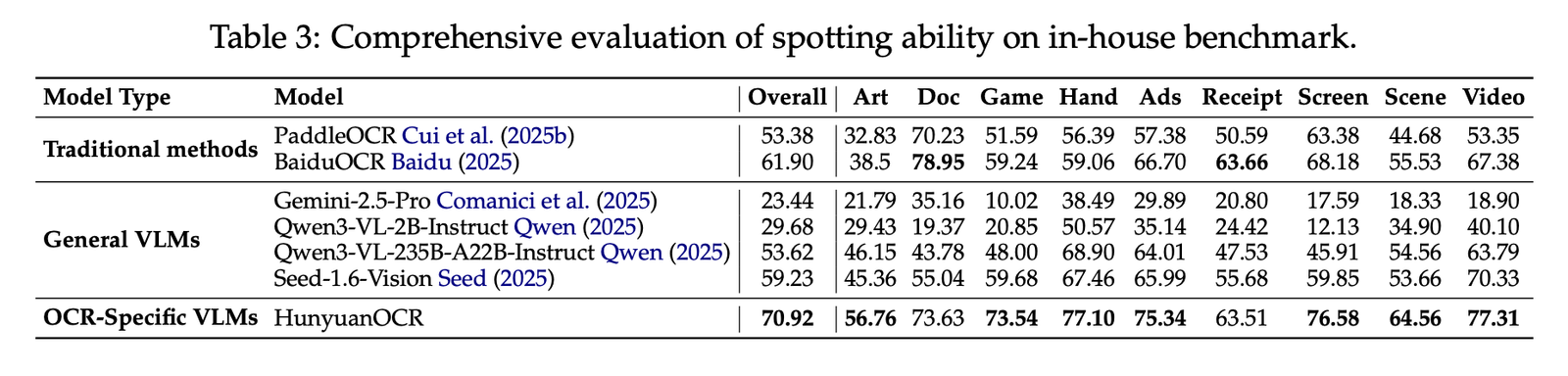

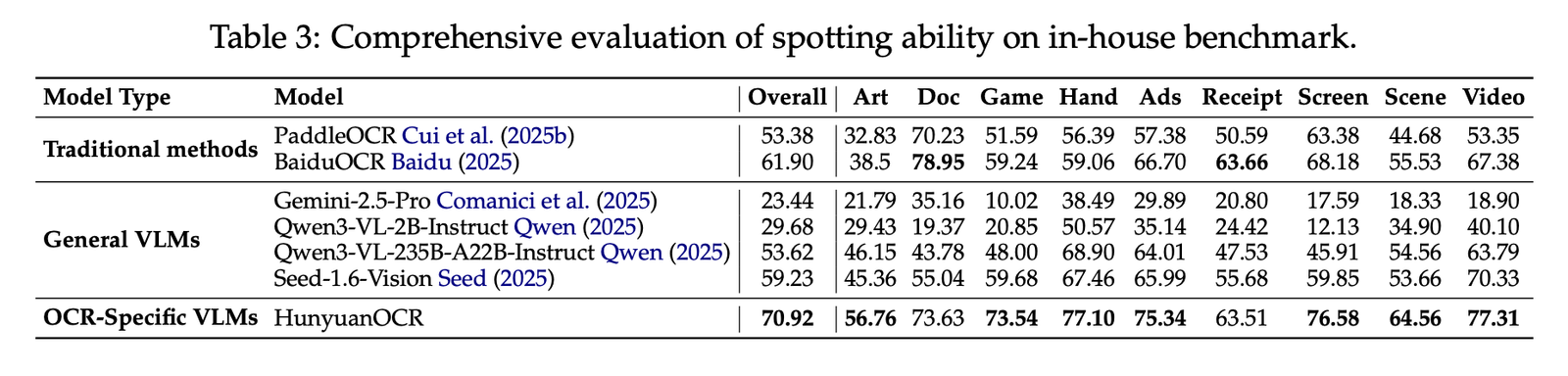

Benchmarking results,Model 1B competes with larger VLMs

In the internal text recognition benchmark of 900 images across 9 categories, HunyuanOCR reaches an overall score of 70.92. It outperforms traditional pipeline approaches such as PaddleOCR and BaiduOCR and also generic VLMs such as Gemini 2.5 Pro, Qwen3 VL 2B, Qwen3 VL 235B and Seed 1.6 Vision, despite using much fewer parameters.

In OmniDocBench, HunyuanOCR scored 94.10 overall, with 94.73 in formulas and 91.81 in tables. In the Wild OmniDocBench variant, which prints and recaptures documents under creases and lighting changes, it scored 85.21 overall. In DocML, a multilingual parsing standard across 14 non-Chinese and non-English languages, it reaches 91.03, and the paper reports the latest results across all 14 languages.

For information extraction and VQA, HunyuanOCR reaches an accuracy of 92.29 on cards, 92.53 on receipts, and 92.87 on video subtitles. On OCRBench, it scored 860 points, which is higher than DeepSeek OCR on a similar scale and close to larger public VLMs like the Qwen3 VL 2B Instruct and Gemini 2.5 Pro.

In text image translation, HunyuanOCR uses the DoTA standard and an internal suite based on DocML. It achieved a strong COMET score in DoTA for document translation from English to Chinese, and the model won first place in the Track 2.2 OCR Free Model Small Model in the ICDAR 2025 DIMT competition.

Key takeaways

- Built-in end to end OCR VLM: HunyuanOCR is an OCR-focused vision language model with a 1B parameter that links ViT at a native resolution of 0.4B to a 0.5B Hunyuan language model through an MLP compiler, and runs detection, parsing, information extraction, VQA, and translation in an end-to-end instruction-driven pipeline without external mapping or detection modules.

- Unified support for diverse OCR scenarios: The model was trained on over 200 million image-text pairs across 9 scenarios, including documents, street views, advertisements, handwritten content, screenshots, cards, invoices, game interfaces and video frames, with over 130 languages covered in training and support for over 100 languages in deployment.

- Data pipeline plus reinforcement learning: The training uses a 4-stage recipe, vision language alignment, multi-modal pre-training, long context pre-training and application-oriented fine-tuning, followed by reinforcement learning with group relative policy optimization and verifiable rewards for discovery, analysis, VQA and translation.

- Robust standard results for 3B submodels

HunyuanOCR reached 94.1 on OmniDocBench for document understanding, and scored 860 on OCRBench, which is reported as state-of-the-art for vision language models with less than 3B parameters, while also outperforming several commercial OCR APIs and larger open models such as Qwen3 VL 4B on core OCR benchmarks.

Editorial notes

HunyuanOCR is a strong signal that OCR VLMs are maturing into practical infrastructure, not just standards. Tencent combines an end-to-end 1B parameter architecture with Native Vision Transformer, Adaptive MLP Connector, and RL with verifiable bonuses to deliver a single model covering discovery, analysis, IE, VQA, and translation across 100+ languages, and it does so while reaching leading scores on OCRBench for 3B submodels and 94.1 on OmniDocBench. Overall, HunyuanOCR represents an important shift toward embedded, instruction-driven OCR engines that are realistic for production deployment.

verify Paper, form weight and repo. Feel free to check out our website GitHub page for tutorials, codes, and notebooks. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

🙌 FOLLOW MARKTECHPOST: Add us as a favorite source on Google.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-11-26 19:07:00