NVIDIA AI Releases Orchestrator-8B: A Reinforcement Learning Trained Controller for Efficient Tool and Model Selection

How can an AI system learn to choose the right model or tool for each step of the task instead of always relying on one big model for everything? Researchers from NVIDIA released Orchestral instrumenta new method for training a small language model to act as a coordinator – the “brain” of a heterogeneous tool-wielding agent

policy">From individual model agents to coordination policy

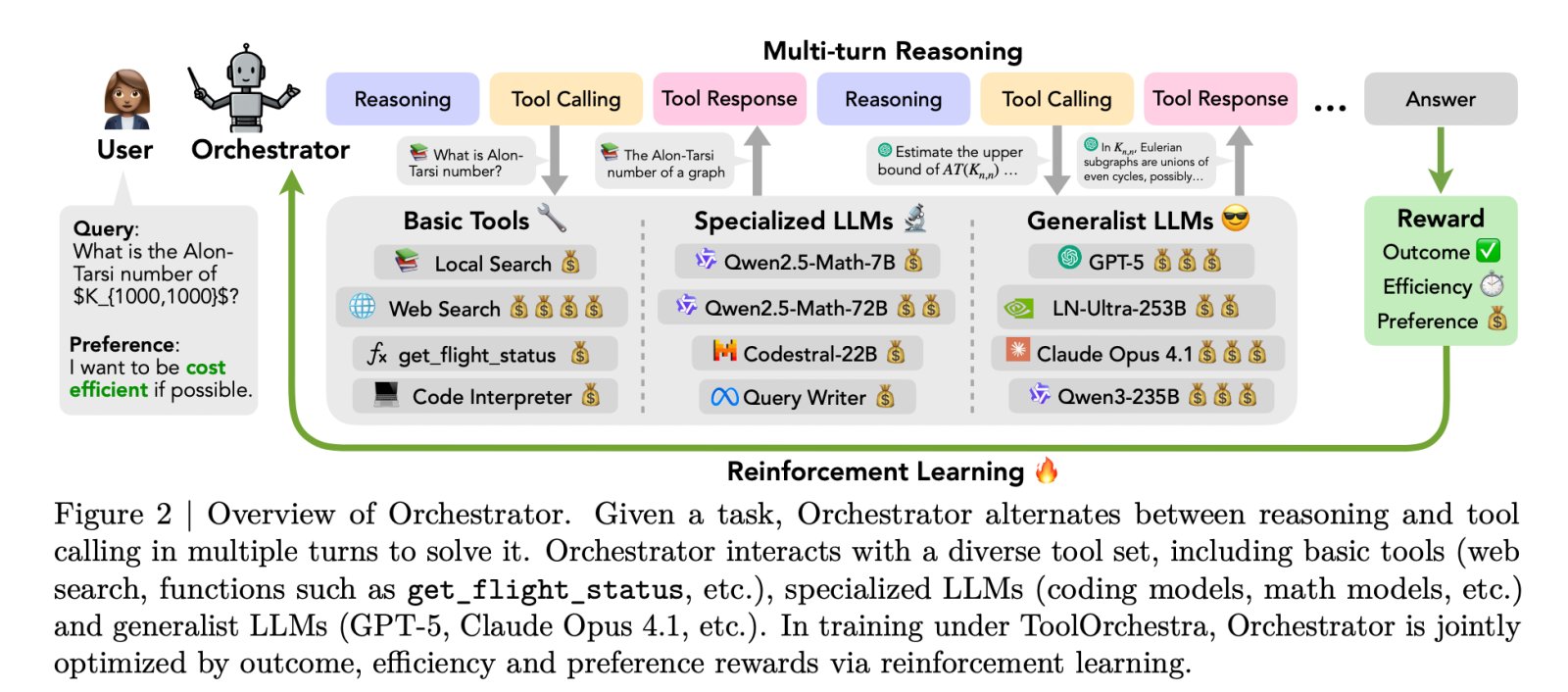

Most current agents follow a simple pattern. One large form like GPT-5 receives a prompt describing the available tools, and then decides when to call a web search or code compiler. All high-level inferences are still within the same model. ToolOrchestra changes this setting. It trains a custom control model called “Orchestra-8B‘, which treats both classical tools and other LLMs as callable components.

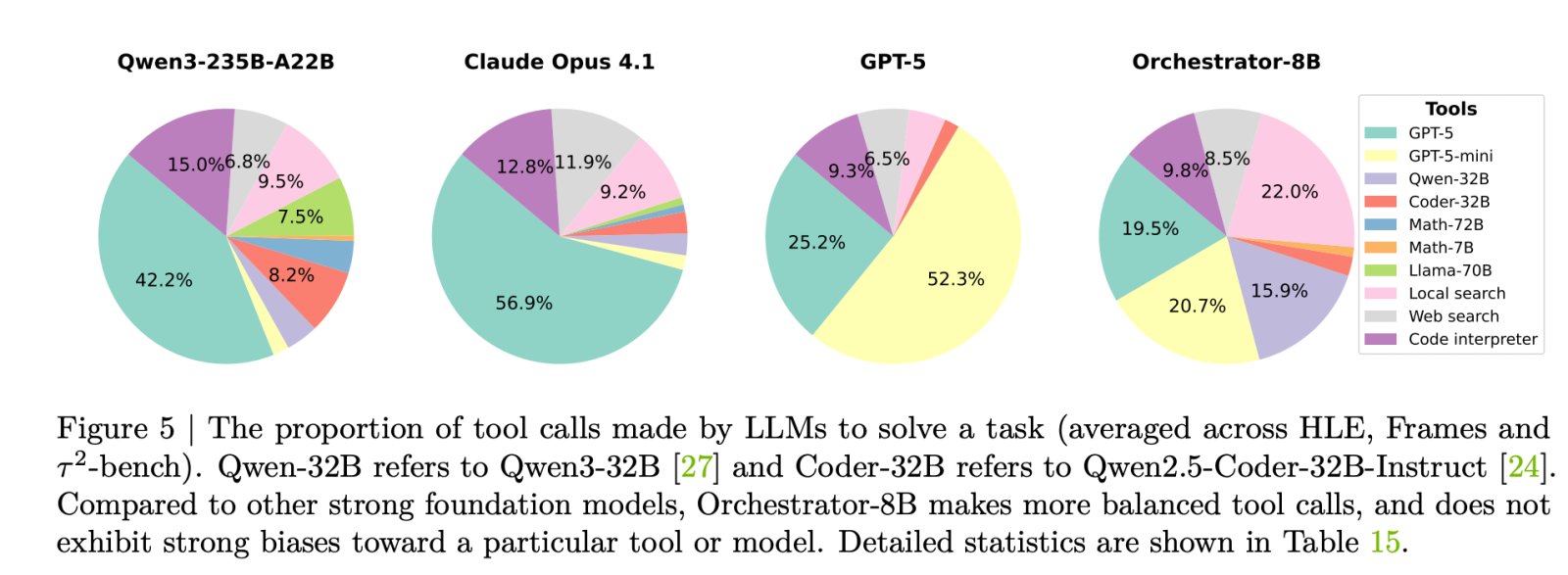

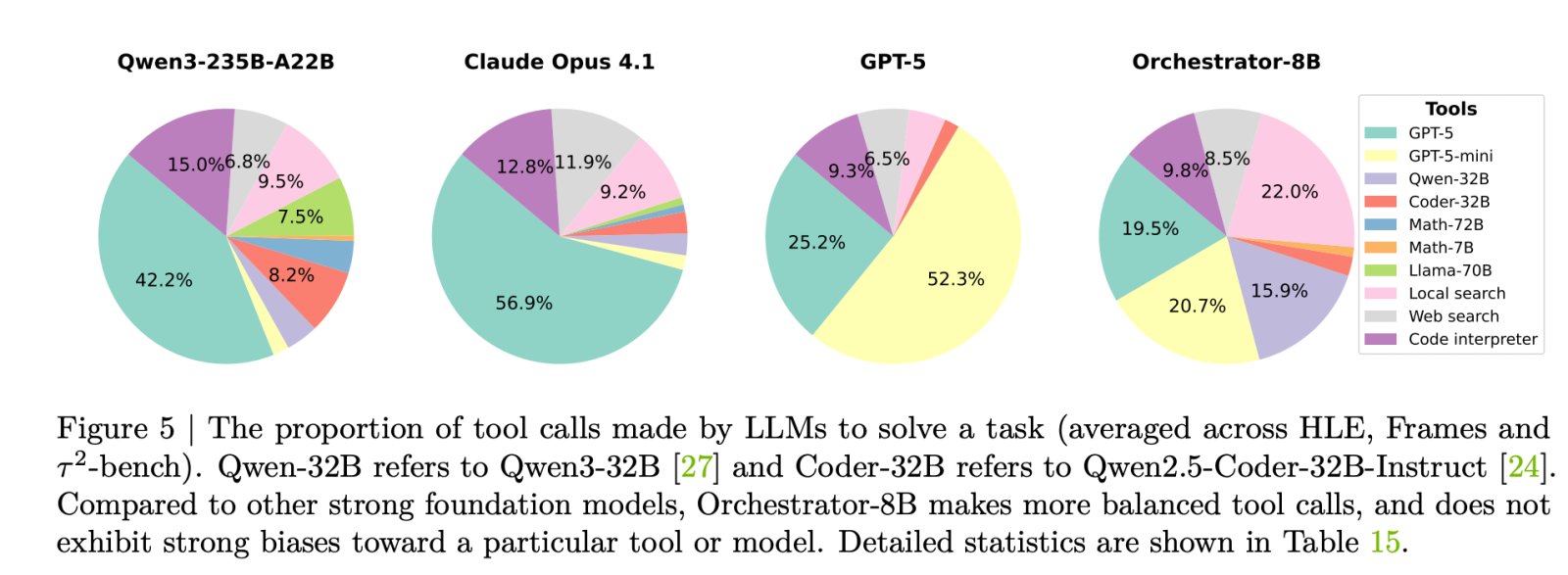

An experimental study in the same paper shows why naive motivation is not enough. When Qwen3-8B is asked to route between GPT-5, GPT-5 mini, Qwen3-32B, and Qwen2.5-Coder-32B, it delegates 73 percent of the cases to GPT-5. When GPT-5 acts as its own formatter, it calls GPT-5 or GPT-5 mini in 98 percent of cases. The research team calls these biases self-reinforcement and other-reinforcement biases. Routing policy uses robust models and ignores cost instructions.

Instead, ToolOrchestra explicitly trains a small orchestrator on this routing problem, using reinforcement learning across complete multi-turn paths.

What is Coordinator 8B?

Orchestrator-8B is an 8B parameter decoder only. It was built by fine-tuning the Qwen3-8B as a synchronization model and released on Hugging Face.

At inference time, the system runs a multi-cycle loop that exchanges inference and tool calls. The offer has been made Three main steps. FirstlyOrchestrator 8B reads user instructions and an optional description of natural language preferences, for example a request to prioritize low latency or to avoid web searching. secondIt generates an internal chain of thought and thinking style and plans action. thirdit selects a tool from the available set and issues a structured tool call in a standardized JSON format. The environment executes this call, appends the result as an observation, and then feeds it back in the next step. The process stops when the end signal is issued or when a maximum of 50 cycles are reached.

Tool cover Three main groups. Basic tools include Tavily web search, a Python Sandbox code compiler and a local Faiss cursor built using Qwen3-Embedding-8B. Specialized LLM certifications include Qwen2.5-Math-72B, Qwen2.5-Math-7B, and Qwen2.5-Coder-32B. Common LLM tools include GPT-5, GPT-5 mini, Llama 3.3-70B-Instruct, and Qwen3-32B. All tools share the same schema with names, natural language descriptions, and written parameter specifications.

End-to-end reinforcement learning with multi-objective rewards

Orchestral instrument He formulates the entire workflow as a Markov decision process. The status contains conversation history, previous tool calls, notes, and user preferences. Procedures are the next scripting step, including inference tokens and a tool call diagram. After up to 50 steps, the environment calculates a numerical reward for the complete path.

The reward has Three components. The score reward is binary and depends on whether the path solves the task. For open-ended answers, GPT-5 is used as a referee to compare the model output to the reference. Efficiency bonuses penalize both financial cost and wall clock latency. Token usage for private and open source tools is set to financial cost using the public API and Together AI pricing. Preference payoff measures the extent to which tool use matches a user’s preference vector that can increase or decrease weight on cost, latency, or specific tools. These components are combined into a single scalar using a preference vector.

The policy has been improved using Improving the group’s relative policy GRPO, a form of Gradient Policy Reinforcement Learning that normalizes rewards within sets of paths for the same task. The training process involves filters that drop trajectories with invalid instrumental recall format or poor reward variance to stabilize the improvement.

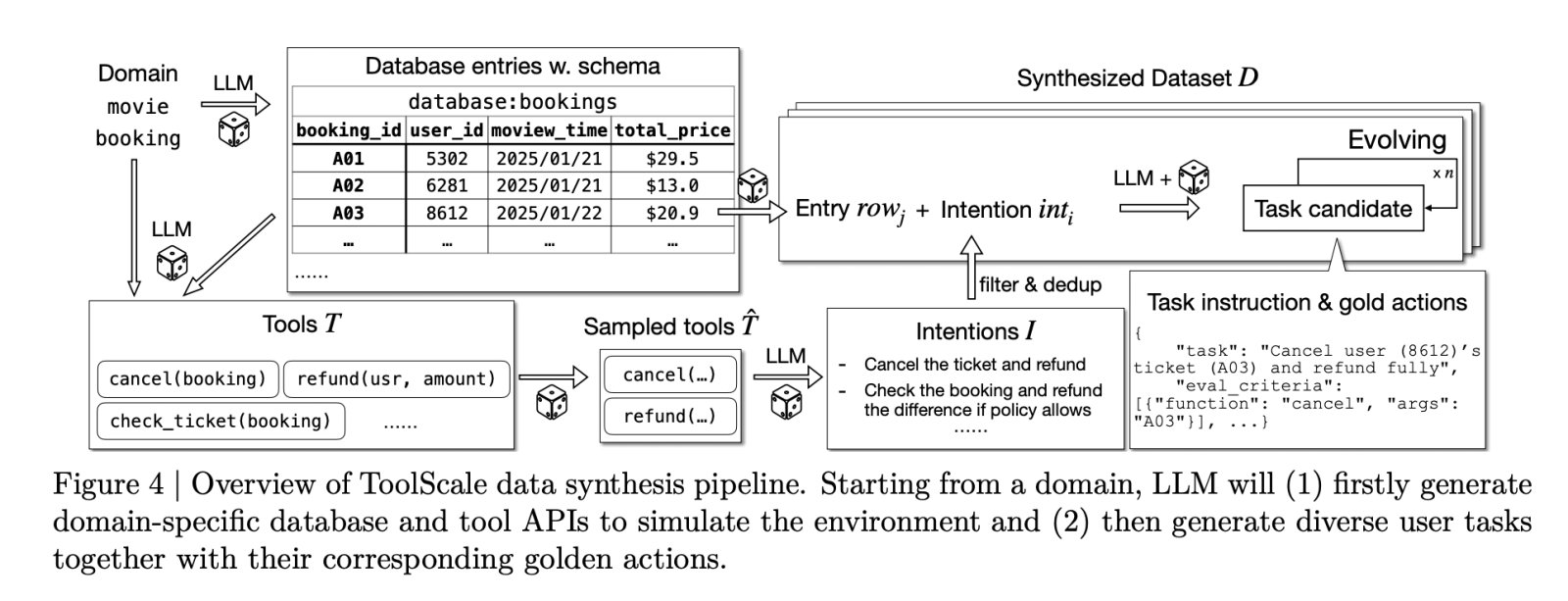

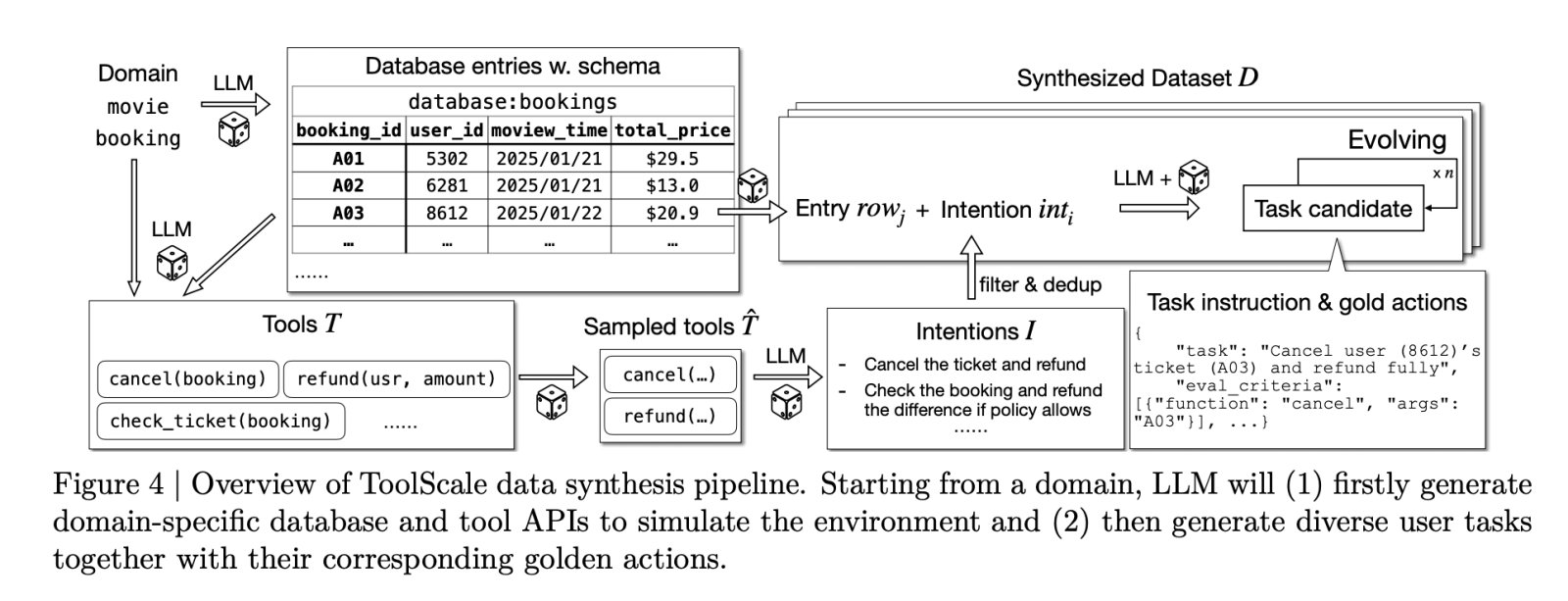

To make this training possible on a large scale, The research team plans to introduce ToolScale,A synthetic dataset for multi-step tool recall tasks. For each domain, LLM creates a database schema, database entries, domain-specific APIs, and then various user tasks with ground truth sequences of function calls and required intermediate information.

Benchmark results and cost file

The NVIDIA research team is evaluating Orchestra-8B On three tough criteria, the final test for humanity, tires and seat τ². These measures target long-term thinking, concreteness in retrieval, and recall of functions in a dual control environment.

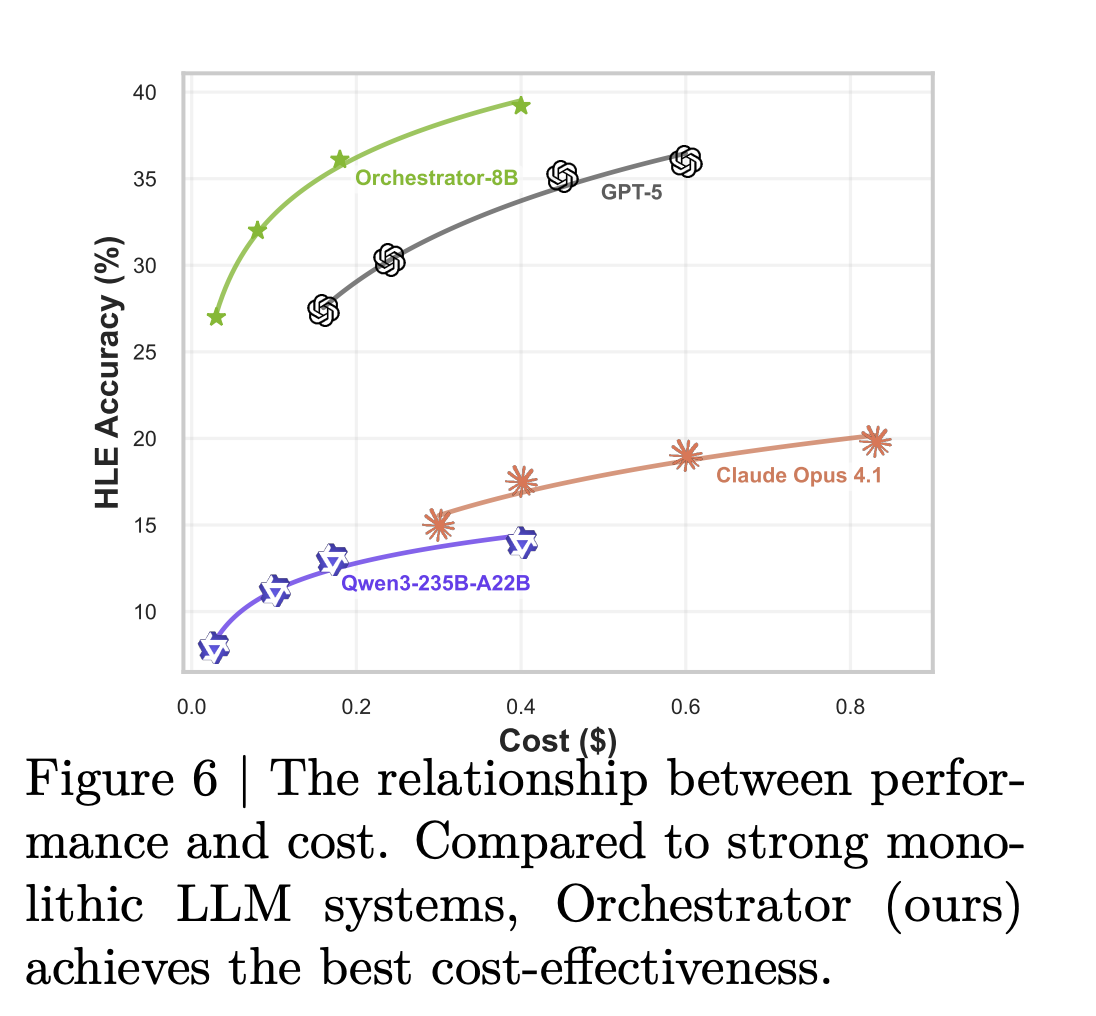

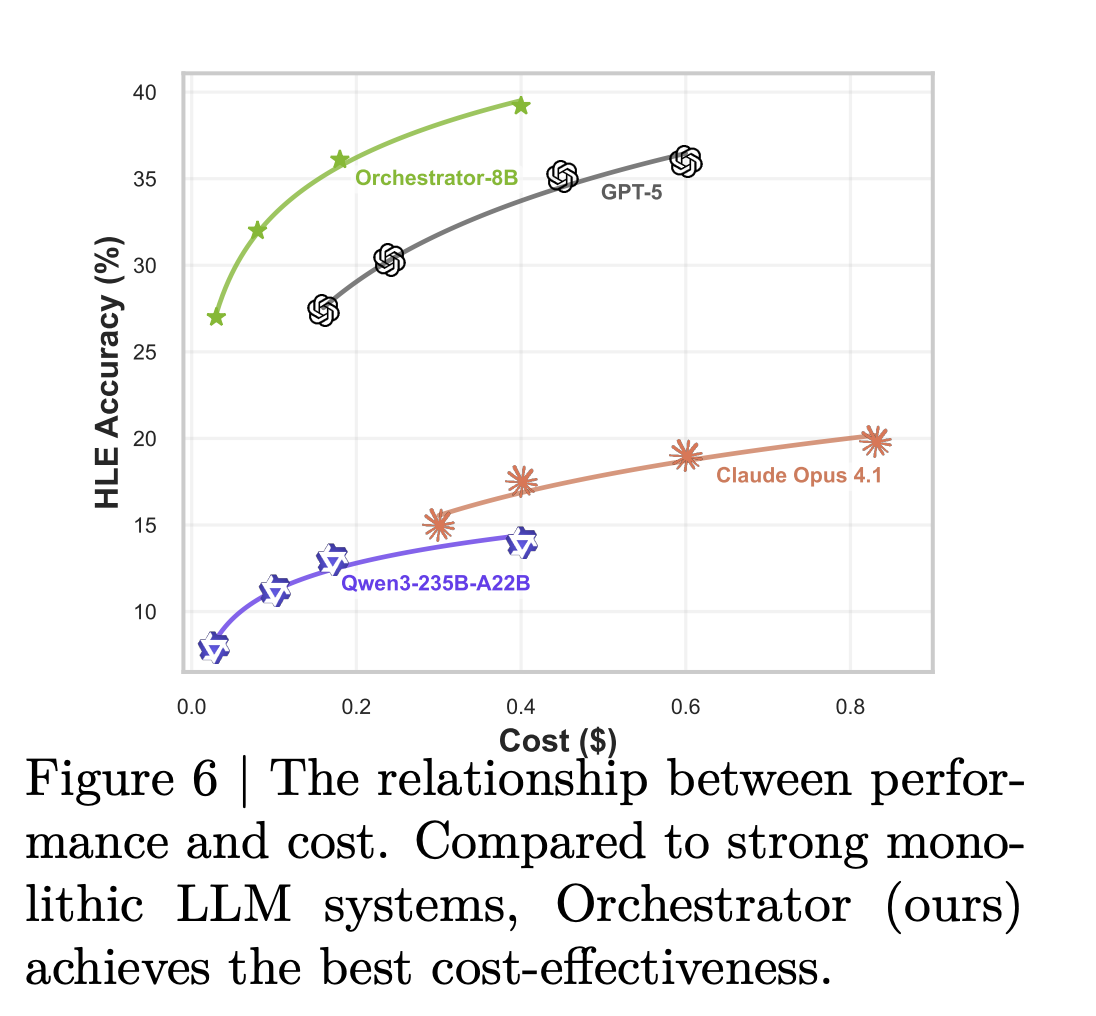

On Humanity’s Last Exam text-only questions, the Orchestrator-8B has an accuracy of 37.1 percent. GPT-5 with basic tools reaches 35.1 percent on the same setup. In frames, Orchestrator-8B achieves 76.3 percent versus GPT-5’s 74.0 percent with tools. On the τ² Bench, the Orchestrator-8B scored 80.2 percent versus 77.7 percent for the GPT-5 with basic tools.

The efficiency gap is even greater. In a configuration that uses the basic tools as well as the specialized and general LLM tools, Orchestrator-8B averages 9.2 cents and a latency of 8.2 minutes per query, averaged over Humanity’s test and frameworks. In the same configuration, GPT-5 costs 30.2 cents and takes 19.8 minutes on average. A typical card sums this up at about 30 percent of the financial cost and is 2.5 times faster for Orchestrator-8B compared to GPT-5.

Analysis of tool use supports this picture. Claude Opus 4.1 is used as a formatter that calls GPT-5 most of the time. GPT-5 is used as the format and GPT-5 mini is preferred. The Orchestrator-8B distributes calls more evenly across powerful models, cheaper models, local search and retrieval, and the code compiler, and reaches higher accuracy at a lower cost for the same role budget.

Generalization experiments replace training-time artifacts with unseen models such as OpenMath Llama-2-70B, DeepSeek-Math-7B-Instruct, Codestral-22B-v0.1, Claude Sonnet-4.1, and Gemma-3-27B. The Orchestrator-8B still achieves the best trade-off between accuracy, cost, and latency among all baselines in this setting. A separate test suite for preference perception shows that the Orchestrator-8B also tracks a user’s tool use preferences more closely than the GPT-5, Claude Opus-4.1, and Qwen3-235B-A22B under the same reward measure.

Key takeaways

- ToolOrchestra trains an 8B parameter coordination model, Orchestrator-8B, that selects and sequences tools and LLMs to solve multi-step agent tasks using reinforcement learning with outcome, efficiency, and preference-aware rewards.

- The Orchestrator-8B was released as an open-weight model on Hugging Face. It is designed to coordinate diverse tools such as web search, code execution, retrieval, and specialized LLMs through a unified schema.

- In recent Humanity testing, Orchestrator-8B reached 37.1 percent accuracy, outperforming GPT-5 by 35.1 percent, while being about 2.5 times more efficient, and in τ² Bench and FRAMES, outperforming GPT-5 while using nearly 30 percent of the cost.

- The framework shows that naively claiming the LLM as its own router leads to a self-reinforcement bias in which it overuses itself or a small set of powerful models, while the trained coordinator learns a more balanced and cost-conscious routing policy across multiple tools.

Editorial notes

NVIDIA’s ToolOrchestra is a practical step toward composite AI systems where the 8B orchestration model, Orchestrator-8B, learns an explicit routing policy around tools and LLMs rather than relying on a single parametric model. It shows clear gains in recent Humanity testing, framerates and τ² Bench at about 30 percent of the cost and about 2.5 times better efficiency than GPT-5-based baselines, making it directly relevant to teams that care about accuracy, response time, and budget. This launch makes coordination policy an ideal first-order target in artificial intelligence systems.

verify Sheet, repo, project page and Typical weights. Feel free to check out our website GitHub page for tutorials, codes, and notebooks. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

🙌 FOLLOW MARKTECHPOST: Add us as a favorite source on Google.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-11-29 04:18:00