This AI Paper Introduces Effective State-Size (ESS): A Metric to Quantify Memory Utilization in Sequence Models for Performance Optimization

In automated learning, sequence models are designed to process data with temporal structure, such as language, time chain, or signals. These models follow the dependencies through time steps, which makes it possible to create coherent outputs through learning from the development of inputs. Neurological structures such as repeated nerve networks and attention mechanisms are managed by interior conditions. The ability of the model depends on remembering the previous inputs and linking them to the current tasks on the extent of its use of its memory mechanisms, which are decisive in determining the effectiveness of the model through real tasks that include serial data.

One of the ongoing challenges in studying sequence models is to determine how to use memory during calculation. Although the size of the model’s memory – the measuring as a case or the size of the cache – is easy to determine, it does not reveal whether this memory is effective. Two models may have similar memory capabilities but they are completely different ways to apply this ability during learning. This contradiction means that the current assessments fail to capture the nuances of the behavior of the model, which leads to inefficiency in design and improvement. A more accurate scale is needed to monitor memory use instead of just the size of the memory.

Previous methods of understanding memory use in sequence models depend on surface level indicators. The perceptions of operators such as attention maps or basic metrics, such as displaying the model and the capacity of cache, provide some insight. However, these methods are limited because they often apply only to narrow categories of models or do not explain important architectural features such as causal mask. Moreover, techniques such as spectral analysis are hidden through assumptions that do not stick to all models, especially those that have dynamic or variable input structures. As a result, it is less than to direct how models improve or pressure them without decomposing performance.

Researchers from the artificial intelligence liquid, the University of Tokyo, Richin and the University of Stanford provided an effective scale of the state’s size (ESS) to measure the extent of what is really used as the model of the model. ESS has been developed using principles of control and signaling theory, and it targets a general category of models that include a variable written operators of input and input institutions. This covers a group of structures such as attention variables, tawafruce, and repetition mechanisms. ESS works by analyzing the Submatics rank within the operator, with a focus specifically on how previous inputs contribute to the current outputs, providing a measurable method for evaluating memory use.

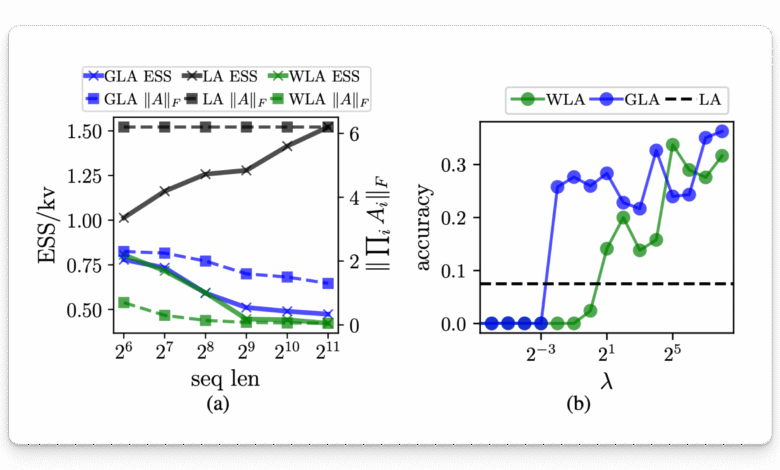

The ESS account is based on analyzing the rank of an operator under the operator that links previous input slides to subsequent outputs. Two variables have been developed: tolerance-which uses a specific threshold by the user on individual values, and Entropy-Ass, which uses a natural spectrum entrance to a more adaptive vision. Both methods are designed to deal with practical and developed account problems through multi -layer models. ESS can be calculated for each channel and the sequence index and collected as an average or total ESS for comprehensive analysis. The researchers emphasize that ESS is the minimum required memory and can reflect dynamic patterns in the form of the model.

Experimental evaluation confirmed that ESS is closely related to performance through various tasks. In multiple interconnected tasks (MQAR), ESS has been normalized by the number of pairs of the main value (ESS/KV) stronger with the accuracy of the model of the theoretical condition (TSS/KV). For example, high -resolution ESS models constantly achieve a higher accuracy. The study also revealed two failures in the use of typical memory: saturation of the condition, as ESS is almost equal to TSS, and the collapse of the situation, as ESS remains unsuccessful. Also, ESS was successfully applied to the form of the form by distillation. The highest ESS in the teachers ’models led to a greater loss when pressing smaller models, indicating the interest of ESS to predict pressure. It also follows how to use the distinctive symbols of modified memory in large language models such as Falcon Mamba 7B.

The study determines an accurate and effective approach to solving the gap between the theoretical memory size and the use of actual memory in sequence models. By developing ESS, researchers offer a strong scale that brings clarity to evaluate the model and improvement. It paves the way for designing more efficient sequence models and enabling the use of ESS to regulate organizing and preparing strategies and pressing models based on clear memory behavior that is quantum measuring.

verify paper. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 90k+ ml subreddit.

Here is a brief overview of what we build in Marktechpost:

Niegel, a trainee consultant at Marktechpost. It follows an integrated double degree in materials at the Indian Institute of Technology, Khargpur. Nichil is a fan of artificial intelligence/ml that always looks for applications in areas such as biomedics and biomedical sciences. With a strong background in material science, it explores new progress and creates opportunities to contribute.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-11 18:29:00