Thought Anchors: A Machine Learning Framework for Identifying and Measuring Key Reasoning Steps in Large Language Models with Precision

Understand the current limits of interpretation tools in LLMS

Artificial intelligence models, such as Deepseek and GPT, depend on billions of parameters that work together to deal with complex thinking tasks. Despite its capabilities, one of the main challenges is to understand parts of their thinking that has the greatest effect on the final product. This is especially important to ensure the reliability of artificial intelligence in critical areas, such as health care or financing. Current interpretation tools, such as importance at the level of a distinctive symbol or gradient styles, provide only limited vision. These methods often focus on isolated ingredients and fail to capture how to connect different thinking steps and affect their decisions, leaving the main aspects of the logic of the model hidden.

Thought anchor: Interpretation at the sentence level of thinking paths

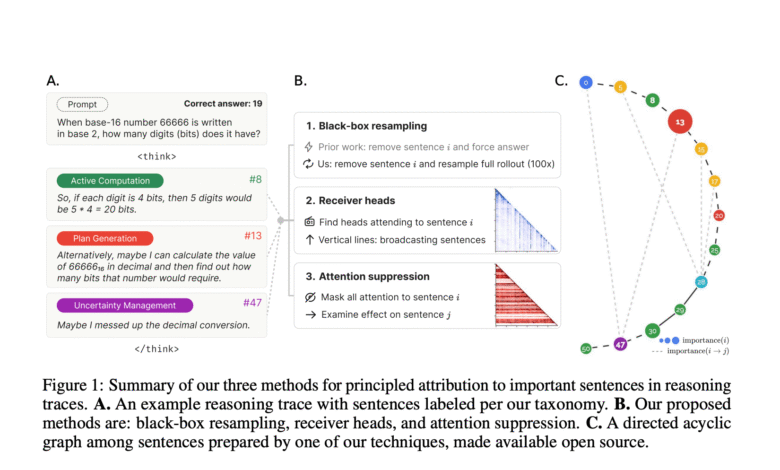

Researchers from Duke University and APHABET presented a new explanation framework called “”Thought -anchors“This methodology is particularly examined by logic contributions at the sentence level in the models of large language. Aspects of thinking, and providing comprehensive coverage of the interpretation of the model.

Evaluation methodology: Deepseek and mathematics data collection

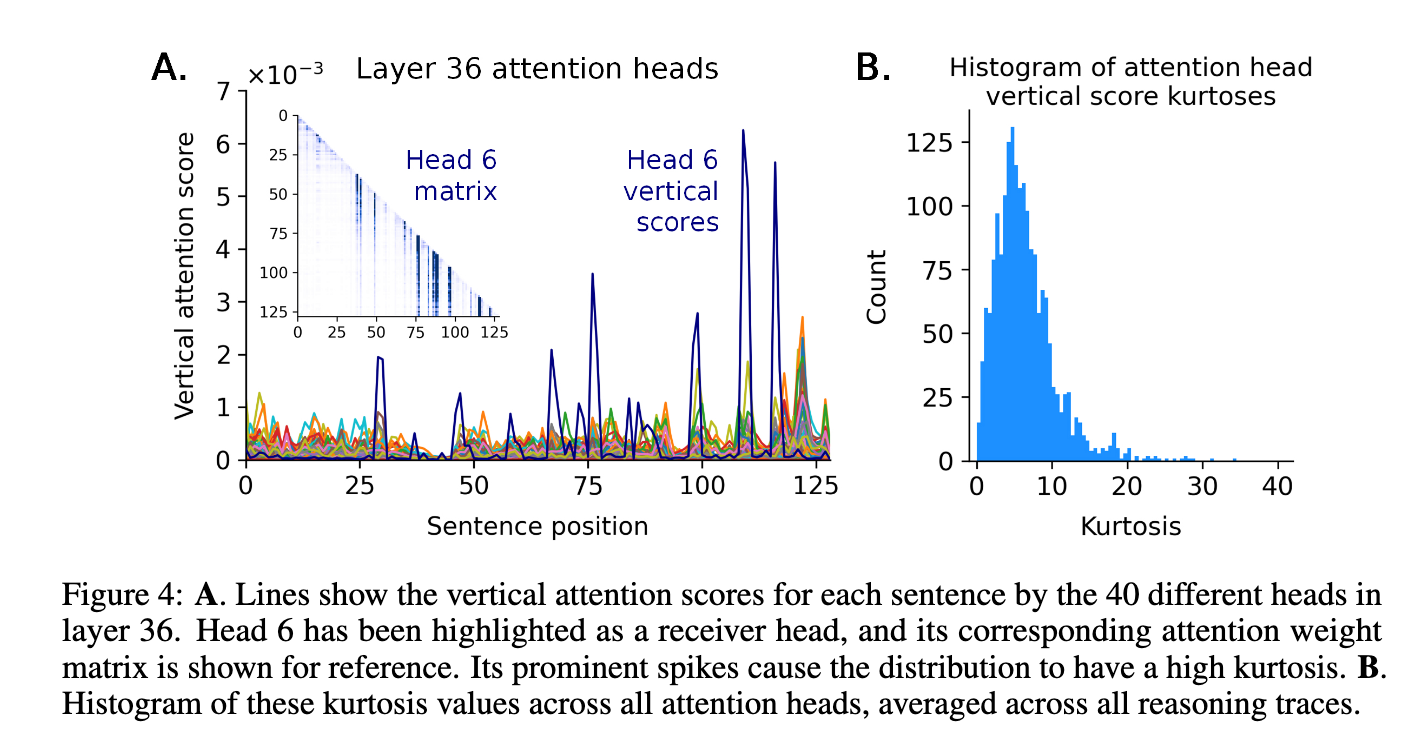

The research team detailed three ways to clearly explain their evaluation. The first approach, which measures the black box, uses an anti -an analysis by systematically removing the sentences within the effects of thinking and measuring their effect. For example, the study showed accuracy assessments at the sentence level by running analyzes on a large evaluation data set, including 2000 thinking tasks, each producing 19 responses. They used the Deepseek Q&A, which features about 67 billion teachers, and tested it on a specially designed mathematics data collection with about 12,500 difficult mathematical problems. Second, the recipient’s head analysis measures attention patterns between sentences, which reveals how preceding thinking steps affecting the processing of subsequent information. The study found a great directional attention, indicating that some anchor sentences greatly direct the subsequent thinking steps. Third, the causal attribution method affects how the suppression of the effect of thinking steps specific to subsequent outputs affects the accurate contribution of internal thinking elements. Combated, these technologies produced accurate analytical outputs, and to reveal explicit relationships between the components of thinking.

Quantitative gains: high accuracy and clear causal links

By applying the anchors of thought, the research group showed noticeable improvements in the ability to explain. Black fund analysis achieved strong performance standards: for each step to think about evaluation tasks, the research team noticed clear differences in influencing the accuracy of the model. Specifically, the correct thinking paths have achieved accuracy levels above 90 %, greatly outperforming incorrect paths. The recipient’s head analysis provided evidence of strong directional relationships, and is measured by attention distributions in all layers and attention heads within Deepseek. These directional attention patterns are constantly directed by subsequent thinking, with the heads of the recipient showing the results of the link on average about 0.59 across the layers, which confirms the ability of the method of interpretation to effectively define the steps of thinking effectively. Moreover, the experiences of causal support explicitly identified how to spread the thinking steps its influence forward. The analysis revealed that the causal effects practiced by the initial thinking sentences led to effects that can be observed on subsequent sentences, with the average causal effect of about 0.34, which increases the increase in the accuracy of your thought.

Also, take the search another decisive dimension: assembling attention. Specifically, the study analyzed 250 chiefs of great attention within the Deepseek model through multiple thinking tasks. Among these heads, the research determined that some of the heads of the recipient have constantly gave great attention to the specific thinking steps, especially during intense athletically intensive information. On the other hand, other attention heads showed more distributed or mysterious patterns. The explicit classification of the heads of the recipient through their interpretation provided more details in understanding the structure of the internal decisions of LLMS, which may direct architecture improvements in the future.

Main meals: precise thinking analysis and practical benefits

- Thought anchor enhances interpretation by specifically focusing on internal thinking processes at the sentence level, and greatly outperformed traditional methods based on traditional activation.

- Combining the measurement of black boxes, the recipient’s head analysis, and causal support, the anchors of thought offer comprehensive and accurate visions of typical behaviors and thinking flows.

- The application of the anchor of thought on the Deepseek Q&A (with 67 billion teachers) resulted in convincing experimental evidence, characterized by a strong association (average attention degree 0.59) and causal effect (average scale of 0.34).

- The open-source perception tool provides great advantages in the field of use, which enhances cooperative exploration and improving the methods of interpretation.

- The widespread attention head analysis of the study (250 heads) has been an understanding of how attention mechanisms contribute to thinking, providing possible ways to improve future models structures.

- The capabilities of your thoughts that showed the establishment of strong foundations for using advanced language models safely in sensitive areas and high risks such as health care, financing and critical infrastructure.

- The framework suggests opportunities for future research in advanced interpretation methods, with the aim of improving the transparency and durability of artificial intelligence.

verify Paper and interaction. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-04 00:48:00