Department of Transportation scraps memos pushing ‘equity initiatives’

The Department of Transportation (DOT) scrapped two memos from the that the agency said misaligned priorities to serve a “social justice and environmental agenda.”

The two memorandums issued during the Biden administration under listed objectives such as “reconnecting communities and reflecting the inclusion of disadvantaged and under-represented groups in the planning, project selection, and design process” and “accommodating new and emerging technologies like electric vehicle charging stations,” according to DOT.

“Under President Trump’s leadership, the Department of Transportation is getting back to basics — building critical infrastructure projects that move people and move commerce safely,” said in a statement. “The previous administration flouted congress in an attempt to push a radical social and environmental agenda on the American people. This was an act of federal overreach. It stops now.”

Traffic along the Glades Road interchange of the Turnpike in Boca Raton, Florida, on Feb. 25, 2022. (John McCall/South Florida Sun Sentinel/Tribune News Service via Getty Images)

Specifically, the department took issue with the memos’ efforts when it came to cutting back on “greenhouse gas emissions” and “equity initiatives.”

The memos centered on how to best use the billions in funding from the Infrastructure Investment and Jobs Act of 2021 across the country. Neither memo is currently available on the website.

However, this is not the first time the memos have faced scrutiny.

The United States Chamber of Commerce in Jan. 2023 asked the Federal Highway Administrator Shailen Bhatt to get rid of the “Policy on Using Bipartisan Infrastructure law Resources to Build a Better America” memo in order to avoid over-complicating the overall mission of taxpayer-funded infrastructure investments.

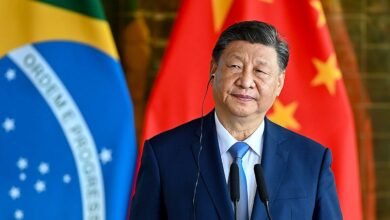

Transportation Secretary Sean Duffy is taking aim at New York’s congestion tolls. (Eduardo MunozAlvarez/VIEWpress/Samuel Corum/Sipa/Bloomberg via Getty Images)

“We supported the Infrastructure Investment and Jobs Act (IIJA) because it represents the most significant infusion of investment in our infrastructure since the enactment of the Interstate Highway System in the mid-1950s,” the chamber wrote with various other groups, including the American Trucking Associations and the Association of American Railroads.

“It is also a carefully negotiated and balanced package of policy reforms and targeted national investments that will make Americans’ lives better. However, the Dec. 16 memo elicited significant confusion within the transportation community as the guidance intended to serve as an overarching policy framework that prioritizes IIJA resources towards certain projects, which was inconsistent with what was laid out under the legislation President Biden signed into law the month before,” the letter added.

President Donald Trump, right, listens as Transportation Secretary Sean Duffy speaks in the James Brady Press Briefing Room at the White House on Thursday, Jan. 30, 2025 in Washington, D.C. (Alex Brandon/AP Photo)

DOT has taken aim at various liberal policies in the early days of Duffy’s tenure in office, including ordering a compliance audit of the California High-Speed Rail project and is asking for the Manhattan congestion tolls program to end. In Congress, other transportation-related efforts are being scrutinized, such as wanting to get rid of electric-vehicle efforts in the .

2025-03-11 16:45:00