Trump says he pulled Stefanik ambassador n to protect GOP House majority: ‘cannot take a chance’

In the face of the senior Republican majority in the House of Representatives, President Donald Trump says he withdrew the nomination of MP Elise Stefanick to be the UN ambassador because he “does not want to take any chances.”

The President made his comments on Friday, when he answered questions in the White House, one day after his announcement of the social media position that he was withdrawing Stefanik’s nomination, a Republican from New York and Trump’s ally in the House of Representatives, due to concerns about passing his agenda through the Chamber.

“I said, Elise, will you give me a favor? We cannot seize the opportunity. We have a slim margin,” Trump told reporters.

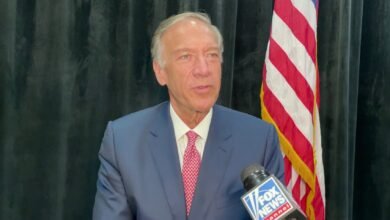

What Stefanik Fox News told about the decision of the “team player” to stay in Congress

Representative Eliz Stefanick with a secret candidate at the time Donald Trump, in New Hampshire during the Republican Elementary Party campaign for the year 2024. (Getty Images)

Trump’s move comes amid fears from the White House and Republicans in Capitol Hill during the Special Congress elections next week in Florida.

Voters in two regions of Congress in Florida will go to opinion polls on Tuesday, where Republicans aim to maintain control of both strong red seats and give themselves a little more space at home.

The elections are in the first and sixth regions of Florida, which Trump held by 37 and 30 points in the presidential elections last year.

“Power Show” – Trump’s ally campaign in the race to succeed the Florida government. Desantis

But the democratic candidates have greatly outperformed Republican candidates, and the polls indicated in recent days that the race in the sixth region was within the margin of error.

The Republican Party is currently holding a majority of 218-213 in the House of Representatives, with two vacant seats, where Republicans and two were stepped in, as Democratic lawmakers died in March.

President Donald Trump at the White House in Washington speak on Thursday, March 27, 2025. (Baleso via AP) (Get on AP)

“When it comes to Florida, you have two races, and they seem to be good,” Trump said.

But the indication of the feature of collecting huge donations by Democratic candidates for the Republican Party competitors, Trump raised concerns, saying, “You never know what happens in such a situation.”

Jimmy Rafiya, Florida Financial Manager, prefers gay democratic Valentine in a multi -race field in the race to fill the vacant seat in the first CD, which is located in the far northwestern corner of Florida in the Banhandel region.

Republican Matt Gaitz, who won his re -election in the area in the November elections, resigned from his post after weeks after Trump chose him to be his candidate for the public lawyer in his second administration.

Gaetz later withdrew from looking at the cabinet amid controversy.

But the race in the Sixth CD, which is located on the Atlantic coast in Florida from the Dynona Beach to South Augustine directly and the interior to the outskirts of Ukla, is already concerned about some of the Republican Party.

The race is behind Republican Michael Waltz, who resigned from the seat on January 20 after Trump called him the National Security Adviser.

Democrats away from happiness for Biden’s possible political return

Republican Senator Randy Fine faces the teacher Josh Wale, a democratic, in the field of multiple candidates.

Florida State MP Randy Fine, a Republican from South Brevard Province, who is nominated in the Special parliament elections on Tuesday in the Sixth Congress in the state. (AP)

Weil has acquired a lot of national attention in recent weeks by photographing the critical battle of the campaign through the margin of approximately ten to one.

The cash contradiction in the Sixth CD race motivated external groups of the Republican Party to make contributions at the last minute to support the fine in the closing days of the campaign, as PACS Super Super has launched Trump’s support for Trump.

“I would have preferred if our candidate had raised money at a faster rate and was on TV faster,” Representative Richard Hudson of North Carolina, head of the National Congress Committee, told reporters earlier this week.

But Hudson added that the fine is “doing what he needs to do. It is on TV now.”

“We will win the seat. I am not worried at all.”

Trump, who indicates a fine, on Friday admitted that “our candidate does not have this kind of money.”

There was criticism of a fine by some Republican colleagues. Steve Bannon, former political adviser Trump and conservative host Steve Bannon, warned that the fine “does not win.”

The governor of the state of Florida, Ron Desantis, said that the Republican Party will put in justice in the race, on the pretext that “it is a reflection of the candidate who runs in this race.”

But it is worth referring to the controversial history between Desantis and Fine, which was the first fans in Florida to turn his support from Desantis to Trump during the Republican Presidential Nomination Battle of 2024.

In the first boycott, where there is less concern by Republicans about seat loss, Valmonant topped the donation sponsor five to one difference.

Florida Financial Director Jimmy Rae, a Republican candidate in the Special Congress elections on Tuesday in the first congressional region. (Tiffany Tombkins/Bradnton Herald/Tribune News Service via Getty Images)

Republican Peron Donalds of Florida, speaking with Fox News Digital at his 2026 campaign in Bonita Springs, predicts “it will be difficult” for the majority of the House of Representatives if the party lost a election on Tuesday.

But he added: “I am not looking forward to that. I think we will win both seats on Tuesday. I think Republican voters in those areas will turn because at the end of the day, the choice is clear.”

While races in the two regions dominated by the two republics are far from the ideal for Democrats to try to flip, the elections are the first opportunity for voters and donors to try to make a change since Trump returns to power in the White House.

Democrats say the increase in donation of their candidates is a sign that their party is driven amid frustration of the voters from the controversial and controversial moves of Trump in the opening weeks in his position.

“The American people do not buy what the Republicans sell,” the deputy, the deputy, MP Jeffrez, told the minority journalists earlier this week.

Click here to get the Fox News app

Jeffrez and other Democrats do not expect victory.

But Jeffrez, the Great Democrats in the House of Representatives, highlighted that “these provinces are very republican, and there will be no reason to believe that races will be close, but what I can say almost is that the democratic candidate in each of these special elections in Florida will excel in performance.”

The President of the Republican Conference in the House of Representatives, Elise Stefanick, RN.Y. (Andrew Harnik/Getty Images)

Stefanik Distirct in the twenty -first Congress in New York, a large area, is mostly rural, in the far north of the state, which includes most of the ADIRONDACK mountains and the thousand islands. She was sailing to his re -election last November by 24 points.

“We don’t want to take any chances. We don’t want the experience,” Trump said, indicating what would have been special elections later this year to fill the Stefanik seat if she resigned if the UN ambassador is confirmed. “It is opinion polls as I do. I won its area through many points. It also works very well.”

“She is very popular. It will win. Another person will also win, because we have achieved well there. We have achieved well there. But the word” may not “not good, adding that he confirms that he does not want to take any chances.”

Trump said he asked Stefanick, “Do you mind staying in Congress?” “We don’t want to take any chances.

“I really appreciate it to do this,” Trump added. “She offers me a great favor … because she was ready to go to the United Nations.”

Click here to get the Fox News app

Stefanick has already stepped down and was replaced by the role of the Republican Party leadership in the House of Representatives as President of the Republican House of Representatives Conference.

“I spoke to Mike Johnson, they will put it in a great leadership position,” Trump said.

2025-03-28 19:45:00