Unbabel Introduces TOWER+: A Unified Framework for High-Fidelity Translation and Instruction-Following in Multilingual LLMs

Large language models made progress in automatic translation, as they benefit from a huge training company to translate dozens of languages and dialects while capturing the fine linguistic differences. However, controlling these models of the accuracy of translation often weakens their skills in pursuing education and conversation, and struggling with wide versions in order to meet professional loyalty standards. The balance between precise translations, culturally conscious with the ability to deal with the generation of the code, solve problems, and the user’s coordination difficult. The models should also maintain the consistency of the term and adhere to the coordination instructions across the various masses. Stakeholders need systems that can dynamically adapt to field requirements and user preferences without sacrificing fluency. Standard grades such as WMT24 ++, which cover 55 language variables, and IFEVAL demands that focus on the 541 instructions on the gap between specialized translation quality and multi -purpose multiple plurals, puts the critical bottleneck to publish the institution.

Current approach to designing language models for the accuracy of translation

Multiple ways to design language models for translation. Large language models have been used pre -trained on parallel CorPora to improve the adequacy of translated and fluent text. Meanwhile, the continuation of training on a mixture of mono and parallel data enhances multi -language fluency. Some research teams have completed training with learning reinforcement from human reactions to the alignment of outputs with quality preferences. Ownership systems such as GPT-4O and Claude 3.7 have shown the leading translation quality, and open weight models including Tower V2 and Gemma 2 have reached equal or have crossed closed credible models under certain language scenarios. These strategies reflect the ongoing efforts to address the dual demands of the accuracy of translation and the vast language capabilities.

Providing a tower+: Unified training for translation and general linguistic tasks

Researchers from Unclablel, Instituto de Telecomicações, Instituto Superior Técnico, Universidade de Lisboa (Lisbon Ellis Unit), MICS, CentralesupéC Tower+A set of models. The research team was designed on multiple parameters standards, 2 billion, 9 billion, and 72 billion, to explore the comparison between translation and benefit for general purposes. By implementing a unified training pipeline, researchers aim to place TOWER+ models on the Pareto borders, and to achieve both high translation performance and strong public capabilities without sacrificing the other. The approach to the structure benefits the budget of the specific requirements for automatic translation with the flexibility required by the tasks of conversation and educational, and support a set of application scenarios.

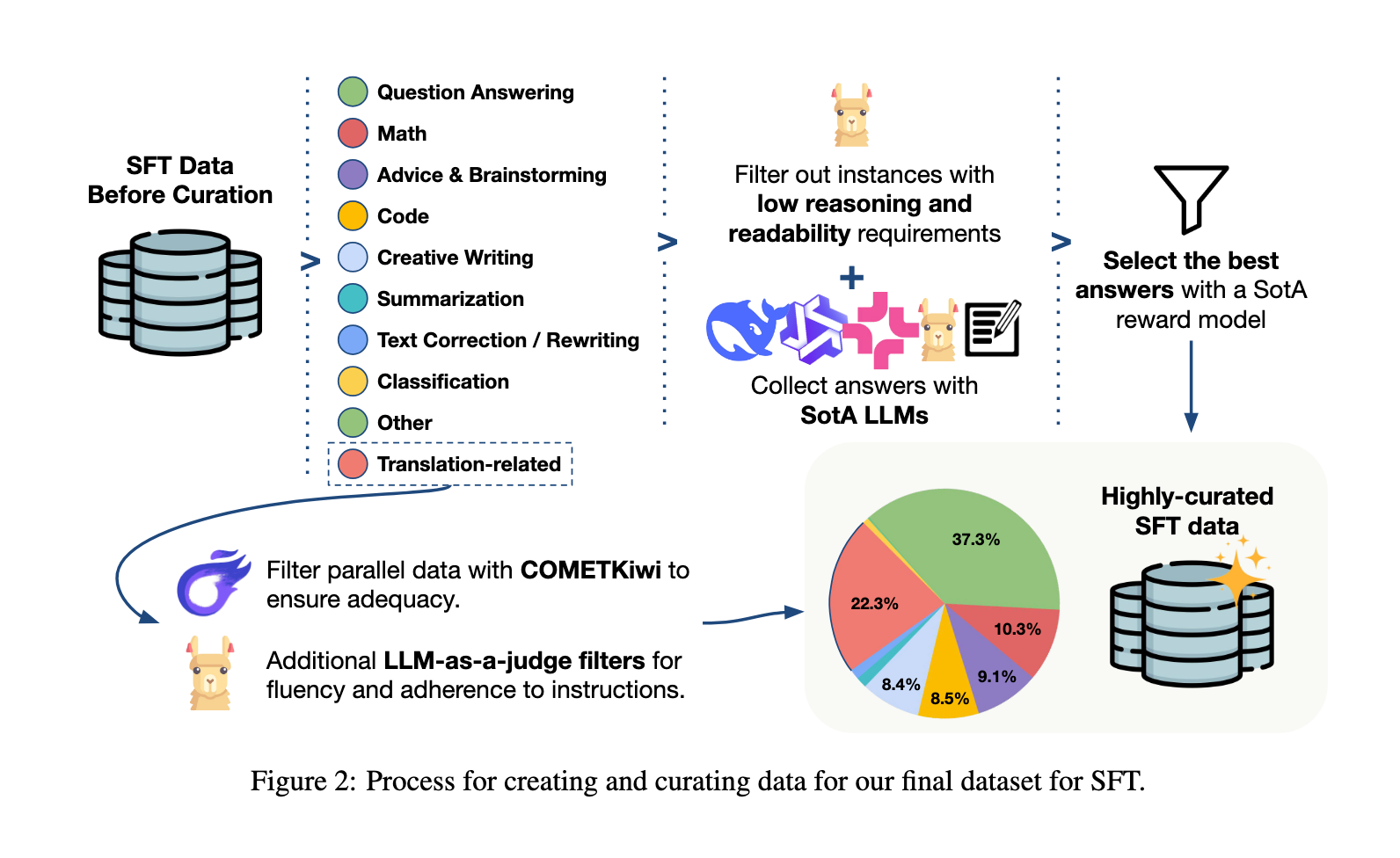

Tower+ Training: PRTRINING, supervision control, preferences, and RL

The training pipeline begins with the continuation of carefully coordinated data that includes mono -language content, parallel camels that have been coordinated as translation instructions, and a small part of the instructions -like examples. After that, the control subject to supervising the refinement of the model uses a set of translation tasks and the diverse instruction scenarios, including generating the code, solving mathematical problems, and trading questions. It follows the preference improvement phase, as it employs the improvement of weighted preferences and policy updates associated with the group trained on policy signals and translation variables modified by humans. Finally, strengthening learning with procedure bonus enhances the accurate compliance of the shift guidelines, using ReGEX checks and preference conditions to improve the model’s ability to follow explicit instructions during translation. This combination of training, alignment subject to supervision, and bonus updates lead to a strong balance between the accuracy of specialized translation and multi -use linguistic efficiency.

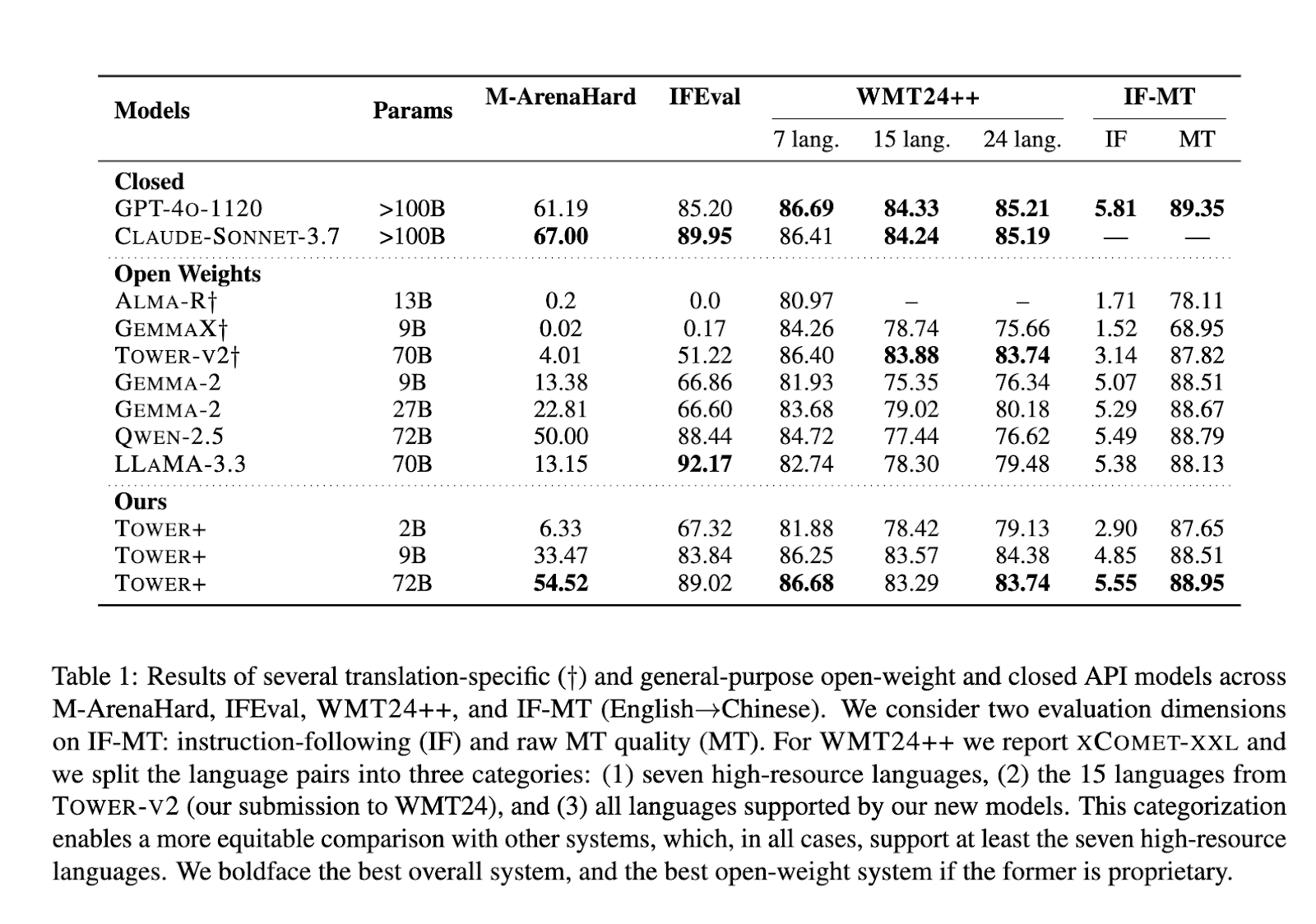

Standard Results: Tower+ achieves the following translation and instructions

The Tower+ 9B model has a 33.47 % victory rate over the multi-language public chat claims, with the 84.38 Xcomt-XXL degree through 24 pairs of languages, outperforming similar open counterparts. The pioneering alternative of 72 billion with a score of 54.52 percent won M-Arnahar, and the IFEVAL 89.02 instructions recorded, and reached the level of Xcomet-XXL of 83.29 at full WMT24 ++ standards. On the integrated standard for translation and follow-up to instructions, IF-MT 5.55 has registered to commit the instructions and 88.95 for translation salvation, which led to recent results between open-weight models. These results confirm that the integrated pipeline for researchers effectively blocks the gap between the performance of specialized translation and the vast language capabilities, which indicates its ability to both institutions and research.

The most prominent major artistic events in the models of the tower+

- Tower+ models, developed by UNFABEL and Academic partners, SPAN 2 B, 9 B and 72 B to explore the limits of performance between translation and benefit for general purposes.

- The post -training pipeline merges four stages: continued training (66 % monochromatic, 33 % parallel, 1 % instructions), supervision control (22.3 % translation), improving weighted preference, and reinforcement learning, to maintain chat skills while enhancing the accuracy of translation.

- Continuing 27 languages and dialects, in addition to 47 pairs of languages, cover more than 32 billion symbols, and integrate specialized and public checkpoints to maintain balance.

- The alternative 9 B achieved a 33.47 % victory rate on M-Arnahard, 83.84 % on IFEVAL, 84.38 % Xcomet-XXL across 24 pairs, with IF-MT degrees of 4.85 (Instructions) and 88.51 (translation).

- The 72 B 54.52 % M-Arnahad, 89.02 % IFEVAL, 83.29 % Xcomet-XXL record, and 5.55/88.95 % IF-MT, set a new open standard.

- Even the 2B model corresponds to the larger foundation lines, with 6.33 % on M-Arnahard and 87.65 % of the IF-MT translation quality.

- It was registered against GPT-4O-1120, Claude-Sonnet-3.7, Alma-R, GMMA-2 and Llama-3.3, constantly corresponding to Suite Tower+ or outperform both specialized and public tasks.

- The research provides a repetitive recipe for the construction of LLMS, which serves the needs of translation and continuity simultaneously, which reduces the spread of models and general operational expenses.

Conclusion: The optimal Barito framework for LLMS that focuses on future translation

In conclusion, by unifying the training on a large scale with specialized alignment stages, Tower+ explains that excellence in translation and conversation’s ingenuity can coexist inside one open -weight suite. The models achieve a balanced balance in Parito through sincerity translation, follow -up to education and public chat capabilities, providing a developed plan for the development of the LLM for the future field.

verify paper and Models. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-27 19:36:00