Alibaba AI Unveils Qwen3-Max Preview: A Trillion-Parameter Qwen Model with Super Fast Speed and Quality

QWEN team revealed in alibaba QWEN3-MAX-PREVIEW (Addruct)A new large language model with More than one trillion teacher– Greater to date. It can be accessed through QWEN Chat, Alibaba Cloud API and OpenROTER, and as an Anycoder tool for Huging Face.

How do you fit the LLM scene today?

This teacher comes at a time when the industry is moving towards smaller and more efficient models. Alibaba’s decision to move to the top in the scale is a deliberate strategic choice, highlighting both its technical capabilities and its commitment to research the trillion parameter.

What is QWEN3-MAX size and what are the limits of context?

- border:> 1 trillion.

- Window of context: until 262,144 symbols (258,048 input, 32,768 output).

- Efficiency feature: It includes Temporary storage for context To accelerate multi -turn sessions.

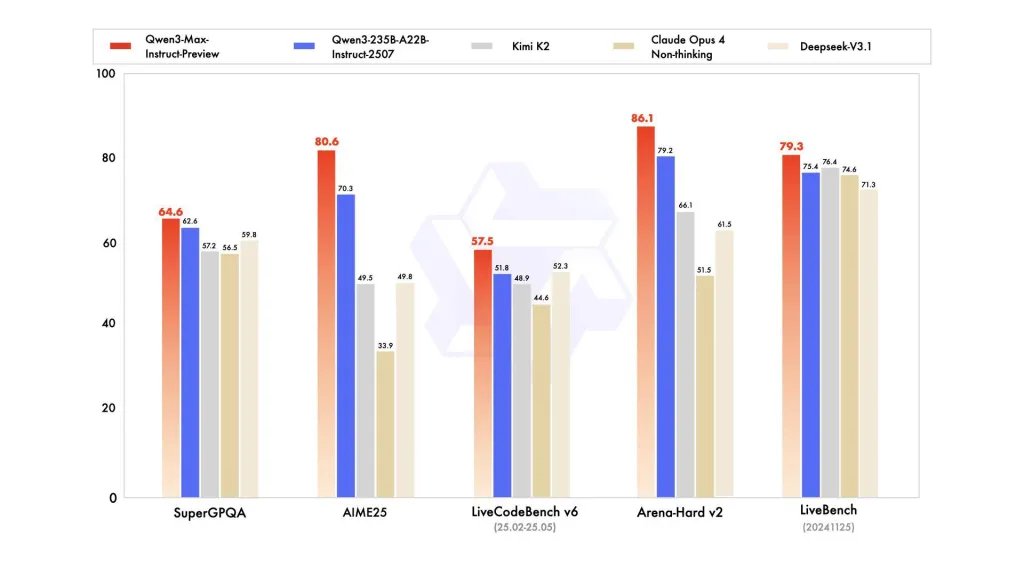

How do QWEN3-MAX perform against other models?

Standards show that they excel over performance QWEN3-235b-A22B-2507 He competes strongly with Claude Obus 4, Kimi K2, Deepseek-V3.1 via Supergpqa, Aime25, LiveCodebench V6, Arena-Hard V2 and Livebench.

What is the pricing structure for use?

Alibaba Cloud applies the document to the distinctive symbol:

- 0-32K codes: 0.861 dollars/million inputs, $ 3.441/million directors

- 32k – 128k: 1.434 dollars/million inputs, $ 5.735/million directors

- 128k – 252k: $ 2.151/million inputs, $ 8.602/million directing

This model is expensive for smaller tasks, but it rises dramatically in the long -context work burden.

How does the closed approach affect adoption?

Unlike the previous QWEN versions, this model is Not open. Access is limited to applications and partner platforms. This choice highlights the focus on alibaba marketing, but it may slow down wider in research societies and open source sources

Main meals

- QWEN Model First Teacher trillion -QWen3-max exceeds 1T parameters, making it the largest and most advanced LLM in alibaba so far.

- Treating very long context Support 262k symbols With temporary storage, enable documents processing and the extended session outside most of the commercial models.

- Competitive standard performance -Beat QWEN3-235B and compete with Claude Obus 4, Kimi K2 and Deepseek-V3.1 for logic, coding and general tasks.

- Emerging thinking despite the design Although it was not marketed as a thinking model, early results show the possibilities of organized thinking in complex tasks.

- Source pricing form Available through applications programming facades with pricing based on the distinctive symbol; Economic for small tasks but is expensive to use the higher context, which limits access.

summary

QWEN3-MAX-PREVIEW set a new standard in the commercial LLMS. Its design is highlighted in a trillion parameter, the length of 262 km, and the strong standard results, the artistic depth of Albaba. However, the closed version of the source and acute cheerful prices creates a question for broader access.

verify QWEN Chat and Alibaba Cloud API. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Michal Susttter is a data science specialist with a master’s degree in Data Science from the University of Badova. With a solid foundation in statistical analysis, automatic learning, and data engineering, Michal is superior to converting complex data groups into implementable visions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-09-06 07:16:00