Sakana AI’s TreeQuest: Deploy multi-model teams that outperform individual LLMs by 30%

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

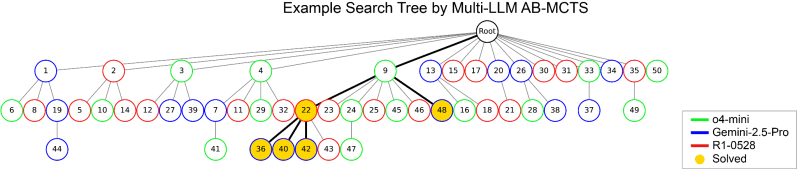

Japanese Sakana Ai has introduced a new technology that enables multiple large language models (LLMS) to cooperate on one mission, effectively creating a “dream team” of artificial intelligence agents. The method, called Multi-LLM AB-MCTS, allows examples of experiments and error and collect their unique power points to solve very complex problems for any individual model.

For institutions, this approach provides a way to develop more powerful and capable artificial intelligence systems. Instead of being locked in one provider or model, companies can dynamically benefit the best aspects of different border models, and set the appropriate artificial intelligence for the appropriate part of the task to achieve superior results.

Group intelligence power

AI border models are developing quickly. However, each model has the distinguished strengths and weaknesses derived from unique training and architecture data. One may excel in coding, while another excels in creative writing. Sakana Ai researchers argue that these differences are not a mistake, but a feature.

“We see these various biases and preparations not as restrictions, but as valuable resources to create collective intelligence,” the researchers say, “The researchers say at the blog post. They believe that like the greatest human achievement comes from various teams, artificial intelligence systems can achieve more by working together. “By collecting their intelligence, artificial intelligence systems can solve problems that cannot be overcome for any one model.”

Think longer at the time of reasoning

The new Sakana Ai algorithm is the technique of “limiting the time of reasoning” (also referred to as “Test Test Time”), a field of research that has become very common last year. Although most of the focus in artificial intelligence was “limiting the time of training” (which makes the models larger and training them on larger data sets), scaling time in reasoning improves performance by customizing more mathematical resources after training a model already.

One of the common methods includes the use of reinforcement learning to demand models to create longer and more detailed sequences for the idea series (COT), as shown in common models such as Openai O3 and Deepseek-R1. Another and simpler way is to take repeated samples, where the model is given the same tall several times to create a variety of potential solutions, similar to the brainstorming session. Sakana Ai combines and develops these ideas.

“Our framework offers a more intelligent and more strategic version of the best types of frequent fish),” said Takoya Akiba, a research scientist AI and the co -author of the paper. “It complements thinking techniques like long Cot through RL. By choosing a dynamic and LLM search strategy, this approach increases performance within a limited number of LLM calls, which achieves better results in complex tasks.”

How works branching to adapt

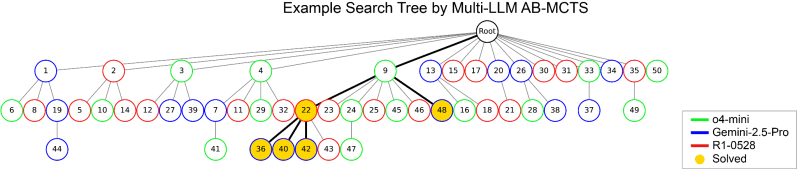

The essence of the new style is an algorithm called the AB-MCTS. It enables LLM to perform the experience and error effectively by budget with the intelligence of two different research: “research is deeper” and “search is broader”. The research includes the deepest and promising answer again and again, while searching for a broader means generating completely new solutions from the zero point. AB-MCTS combines these methods, allowing the system to improve a good idea but also in the axis and experience something new if it has reached a dead end or discover another promising direction.

To accomplish this, the Monte Carlo Tree Search (MCTS) system is used, a decision -making algorithm used by Alphago’s alphage. In each step, AB-MCTS uses possibilities to determine whether it is strategic to improve a current solution or create a new solution.

The researchers took this step forward with Multi -lm Ab-MCTS, which not only decides “what” must be done (polishing for generation) but also “that” should do so. At the beginning of the mission, the system does not know any suitable model for the problem. It begins with a balanced mixture of LLMS available, and with its progress, the models learn more effective, and allocate more work burden for them over time.

AI’s “Dream Team” placed on the test

The researchers tested the AB-MCTS system on the ARC-AGI-2 standard. Arc (abstraction and thinking) is designed to test a human -like ability to solve new visual thinking problems, making it difficult for artificial intelligence.

The team used a set of border models, including O4-Mini, GEMINI 2.5 Pro and Deepseek-R1.

The set of models managed to find correct solutions for more than 30 % of test problems 120, which is the result that greatly outperformed any of the models that operate alone. The system showed the ability to set the best form of a specific problem in a dynamic way. In the tasks where there is a clear path to a solution, the most effective LLM algorithm is determined and used frequently.

The most impressive thing, the team noted cases where the forms solved problems that were previously impossible for any of them. In one case, the solution created by the O4-MINI model was incorrect. However, the system has passed this defective attempt to Deepseeek-R1 and Gemini-2.5 Pro, which managed to analyze the error, correct it, and produce the correct answer in the end.

“This indicates that the Multi -lm Ab-MCTS can combine a flexible way between the border models to solve pre-solvable problems, pushing the limits of what can be achieved using LLMS as a collective intelligence,” the researchers write.

Akiba said: “In addition to the individual positives and the negatives of each model, the tendency to hallucinations can differ greatly between them,” Akiba said. “By creating a group with a less likely model for hallucinations, it may be possible to achieve the best in the two worlds: strong logical capabilities and strong basis. Since hallucinations are a major issue in the context of work, this approach may be valuable to mitigate it.”

From searching to realistic applications

To help developers and companies apply this technology, Sakana Ai has released the basic algorithm as an open source frame called Treequest, available under APache 2.0 license (useable for commercial purposes). Treequest provides a flexible application programming interface, allowing users to carry out multiple AB-MCTS for their own tasks with allocated registration and logic.

“While we are in the early stages of the AB-MCTS application on specific problems directed to the business, we reveal our search for great potential in many areas,” Akiba said.

Besides the ARC-AGI-2 standard, the team managed to successfully apply AB-MCTS to tasks such as complex algorithm coding and improving the accuracy of automated learning models.

“AB-MCTS can be very effective for problems that require experience and repetitive error, such as improving performance measures for current programs,” said Akiba. “For example, it can be used to find ways to improve the time of web service response automatically.”

The release of an open source process can pave the way for a new category of AI applications for the most powerful and reliable institutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-03 22:00:00